mirror of

https://github.com/openappsec/openappsec.git

synced 2025-11-15 17:02:15 +03:00

Compare commits

173 Commits

orianelou-

...

main

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

78d1bcf7c4 | ||

|

|

c90862d74c | ||

|

|

b7923dfd8c | ||

|

|

ed4e20b010 | ||

|

|

14159402e2 | ||

|

|

b74957d9d4 | ||

|

|

0c0da6d91b | ||

|

|

ef887dd1c7 | ||

|

|

6bbc89712a | ||

|

|

dd19bf6158 | ||

|

|

60facef890 | ||

|

|

a3ac05642c | ||

|

|

682b91684d | ||

|

|

ff8c5701fe | ||

|

|

796c6cf935 | ||

|

|

31ff6f2c72 | ||

|

|

eac686216b | ||

|

|

938cae1270 | ||

|

|

87cdeef42f | ||

|

|

d04ea7d3e2 | ||

|

|

6d649cf5d5 | ||

|

|

5f71946590 | ||

|

|

c75f1e88b7 | ||

|

|

c4975497eb | ||

|

|

782dfeada6 | ||

|

|

bc1eac9d39 | ||

|

|

4dacd7d009 | ||

|

|

3a34984def | ||

|

|

5aaf787cfa | ||

|

|

2c7b5818e8 | ||

|

|

c8743d4d4b | ||

|

|

d703f16e35 | ||

|

|

692c430e8a | ||

|

|

72c5594b10 | ||

|

|

2c6b6baa3b | ||

|

|

37d0f1c45f | ||

|

|

2678db9d2f | ||

|

|

52c93ad574 | ||

|

|

bd3a53041e | ||

|

|

44f40fbd1b | ||

|

|

0691f9b9cd | ||

|

|

0891dcd251 | ||

|

|

7669f0c89c | ||

|

|

39d7884bed | ||

|

|

b8783c3065 | ||

|

|

37dc9f14b4 | ||

|

|

9a1f1b5966 | ||

|

|

b0bfd3077c | ||

|

|

0469f5aa1f | ||

|

|

3578797214 | ||

|

|

16a72fdf3e | ||

|

|

87d257f268 | ||

|

|

36d8006c26 | ||

|

|

8d47795d4d | ||

|

|

f3656712b0 | ||

|

|

b1781234fd | ||

|

|

f71dca2bfa | ||

|

|

bd333818ad | ||

|

|

95e776d7a4 | ||

|

|

51c2912434 | ||

|

|

0246b73bbd | ||

|

|

919921f6d3 | ||

|

|

e9098e2845 | ||

|

|

97d042589b | ||

|

|

df7be864e2 | ||

|

|

ba8ec26344 | ||

|

|

97add465e8 | ||

|

|

38cb1f2c3b | ||

|

|

1dd9371840 | ||

|

|

f23d22a723 | ||

|

|

b51cf09190 | ||

|

|

ceb6469a7e | ||

|

|

b0ae283eed | ||

|

|

5fcb9bdc4a | ||

|

|

fb5698360b | ||

|

|

147626bc7f | ||

|

|

448991ef75 | ||

|

|

2b1ee84280 | ||

|

|

77dd288eee | ||

|

|

3cb4def82e | ||

|

|

a0dd7dd614 | ||

|

|

88eed946ec | ||

|

|

3e1ad8b0f7 | ||

|

|

bd35c421c6 | ||

|

|

9d6e883724 | ||

|

|

cd020a7ddd | ||

|

|

bb35eaf657 | ||

|

|

648f9ae2b1 | ||

|

|

47e47d706a | ||

|

|

b852809d1a | ||

|

|

a77732f84c | ||

|

|

a1a8e28019 | ||

|

|

a99c2ec4a3 | ||

|

|

f1303c1703 | ||

|

|

bd8174ead3 | ||

|

|

4ddcd2462a | ||

|

|

81433bac25 | ||

|

|

8d03b49176 | ||

|

|

84f9624c00 | ||

|

|

3ecda7b979 | ||

|

|

8f05508e02 | ||

|

|

f5b9c93fbe | ||

|

|

62b74c9a10 | ||

|

|

e3163cd4fa | ||

|

|

1e98fc8c66 | ||

|

|

6fbe272378 | ||

|

|

7b3320ce10 | ||

|

|

25cc2d66e7 | ||

|

|

66e2112afb | ||

|

|

ba7c9afd52 | ||

|

|

2aa0993d7e | ||

|

|

0cdfc9df90 | ||

|

|

010814d656 | ||

|

|

3779dd360d | ||

|

|

0e7dc2133d | ||

|

|

c9095acbef | ||

|

|

e47e29321d | ||

|

|

25a66e77df | ||

|

|

6eea40f165 | ||

|

|

cee6ed511a | ||

|

|

4f145fd74f | ||

|

|

3fe5c5b36f | ||

|

|

7542a85ddb | ||

|

|

fae4534e5c | ||

|

|

923a8a804b | ||

|

|

b1731237d1 | ||

|

|

3d3d6e73b9 | ||

|

|

3f80127ec5 | ||

|

|

abdee954bb | ||

|

|

9a516899e8 | ||

|

|

4fd2aa6c6b | ||

|

|

0db666ac4f | ||

|

|

493d9a6627 | ||

|

|

6db87fc7fe | ||

|

|

d2b9bc8c9c | ||

|

|

886a5befe1 | ||

|

|

1f2502f9e4 | ||

|

|

9e4c5014ce | ||

|

|

024423cce9 | ||

|

|

dc4b546bd1 | ||

|

|

a86aca13b4 | ||

|

|

87b34590d4 | ||

|

|

e0198a1a95 | ||

|

|

d024ad5845 | ||

|

|

46d42c8fa3 | ||

|

|

f6c36f3363 | ||

|

|

63541a4c3c | ||

|

|

d14fa7a468 | ||

|

|

ae0de5bf14 | ||

|

|

d39919f348 | ||

|

|

4f215e1409 | ||

|

|

f05b5f8cee | ||

|

|

949b656b13 | ||

|

|

bbe293d215 | ||

|

|

35b2df729f | ||

|

|

7600b6218f | ||

|

|

108abdb35e | ||

|

|

64ebf013eb | ||

|

|

2c91793f08 | ||

|

|

72a263d25a | ||

|

|

4e14ff9a58 | ||

|

|

1fb28e14d6 | ||

|

|

e38bb9525c | ||

|

|

63b8bb22c2 | ||

|

|

11c97330f5 | ||

|

|

e56fb0bc1a | ||

|

|

4571d563f4 | ||

|

|

02c1db01f6 | ||

|

|

c557affd9b | ||

|

|

8889c3c054 | ||

|

|

f67eff87bc | ||

|

|

fa6a2e4233 | ||

|

|

b7e2efbf7e |

36

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

36

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,36 @@

|

||||

---

|

||||

name: "Bug Report"

|

||||

about: "Report a bug with open-appsec"

|

||||

labels: [bug]

|

||||

---

|

||||

|

||||

**Checklist**

|

||||

- Have you checked the open-appsec troubleshooting guides - https://docs.openappsec.io/troubleshooting/troubleshooting

|

||||

- Yes / No

|

||||

- Have you checked the existing issues and discussions in github for the same issue

|

||||

- Yes / No

|

||||

- Have you checked the knwon limitations same issue - https://docs.openappsec.io/release-notes#limitations

|

||||

- Yes / No

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**To Reproduce**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Run '...'

|

||||

3. See error '...'

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots or Logs**

|

||||

If applicable, add screenshots or logs to help explain the issue.

|

||||

|

||||

**Environment (please complete the following information):**

|

||||

- open-appsec version:

|

||||

- Deployment type (Docker, Kubernetes, etc.):

|

||||

- OS:

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

8

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

8

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1,8 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: "Documentation & Troubleshooting"

|

||||

url: "https://docs.openappsec.io/"

|

||||

about: "Check the documentation before submitting an issue."

|

||||

- name: "Feature Requests & Discussions"

|

||||

url: "https://github.com/openappsec/openappsec/discussions"

|

||||

about: "Please open a discussion for feature requests."

|

||||

17

.github/ISSUE_TEMPLATE/nginx_version_support.md

vendored

Normal file

17

.github/ISSUE_TEMPLATE/nginx_version_support.md

vendored

Normal file

@@ -0,0 +1,17 @@

|

||||

---

|

||||

name: "Nginx Version Support Request"

|

||||

about: "Request for a specific Nginx version to be supported"

|

||||

---

|

||||

|

||||

**Nginx & OS Version:**

|

||||

Which Nginx and OS version are you using?

|

||||

|

||||

**Output of nginx -V**

|

||||

Share the output of nginx -v

|

||||

|

||||

**Expected Behavior:**

|

||||

What do you expect to happen with this version?

|

||||

|

||||

**Checklist**

|

||||

- Have you considered a docker based deployment - find more information here https://docs.openappsec.io/getting-started/start-with-docker?

|

||||

- Yes / No

|

||||

22

README.md

22

README.md

@@ -6,7 +6,7 @@

|

||||

[](https://bestpractices.coreinfrastructure.org/projects/6629)

|

||||

|

||||

# About

|

||||

[open-appsec](https://www.openappsec.io) (openappsec.io) builds on machine learning to provide preemptive web app & API threat protection against OWASP-Top-10 and zero-day attacks. It can be deployed as an add-on to Kubernetes Ingress, NGINX, Envoy (soon), and API Gateways.

|

||||

[open-appsec](https://www.openappsec.io) (openappsec.io) builds on machine learning to provide preemptive web app & API threat protection against OWASP-Top-10 and zero-day attacks. It can be deployed as an add-on to Linux, Docker or K8s deployments, on NGINX, Kong, APISIX, or Envoy.

|

||||

|

||||

The open-appsec engine learns how users normally interact with your web application. It then uses this information to automatically detect requests that fall outside of normal operations, and conducts further analysis to decide whether the request is malicious or not.

|

||||

|

||||

@@ -39,13 +39,13 @@ open-appsec can be managed using multiple methods:

|

||||

* [Using SaaS Web Management](https://docs.openappsec.io/getting-started/using-the-web-ui-saas)

|

||||

|

||||

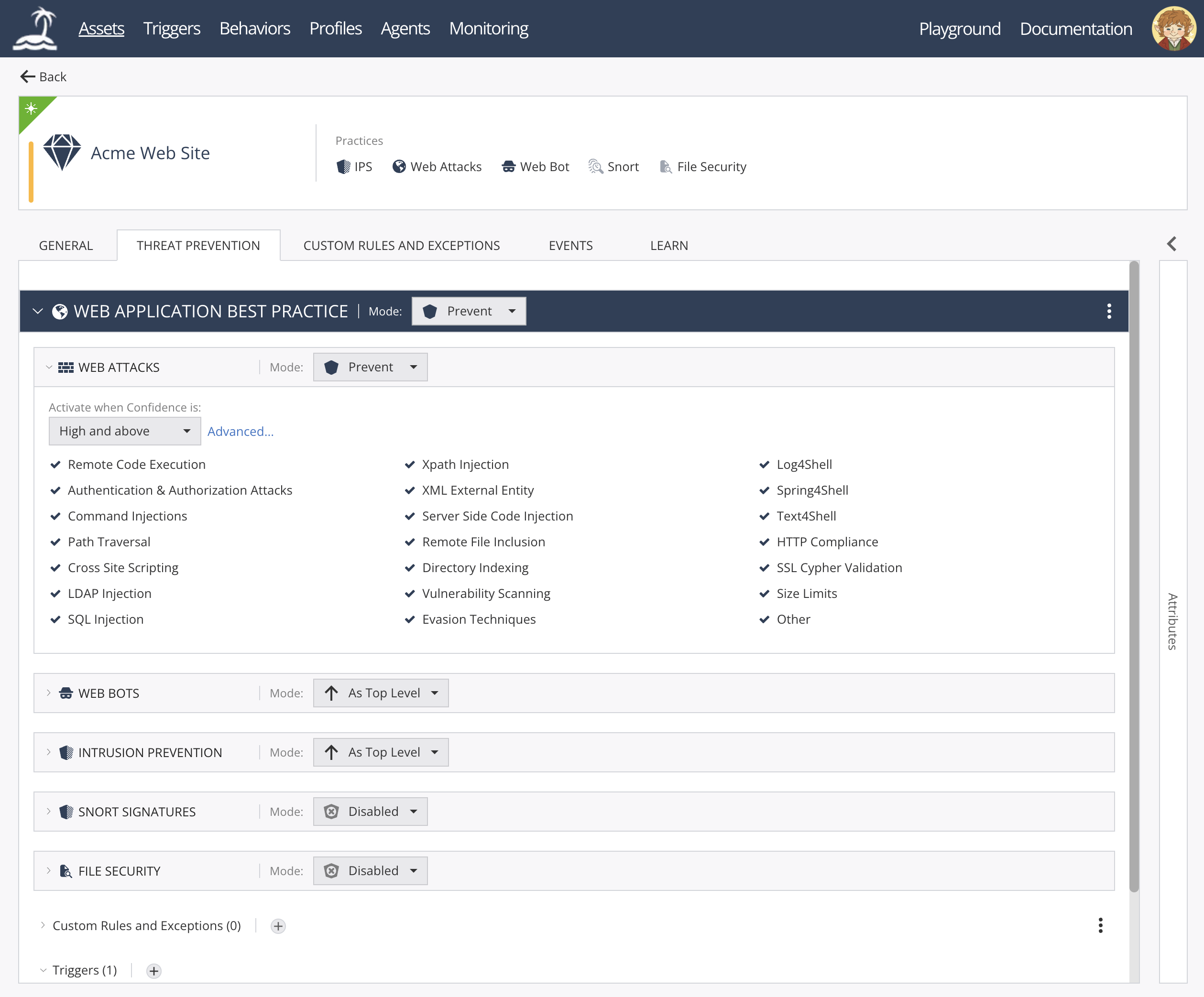

open-appsec Web UI:

|

||||

|

||||

<img width="1854" height="775" alt="image" src="https://github.com/user-attachments/assets/4c6f7b0a-14f3-4f02-9ab0-ddadc9979b8d" />

|

||||

|

||||

|

||||

|

||||

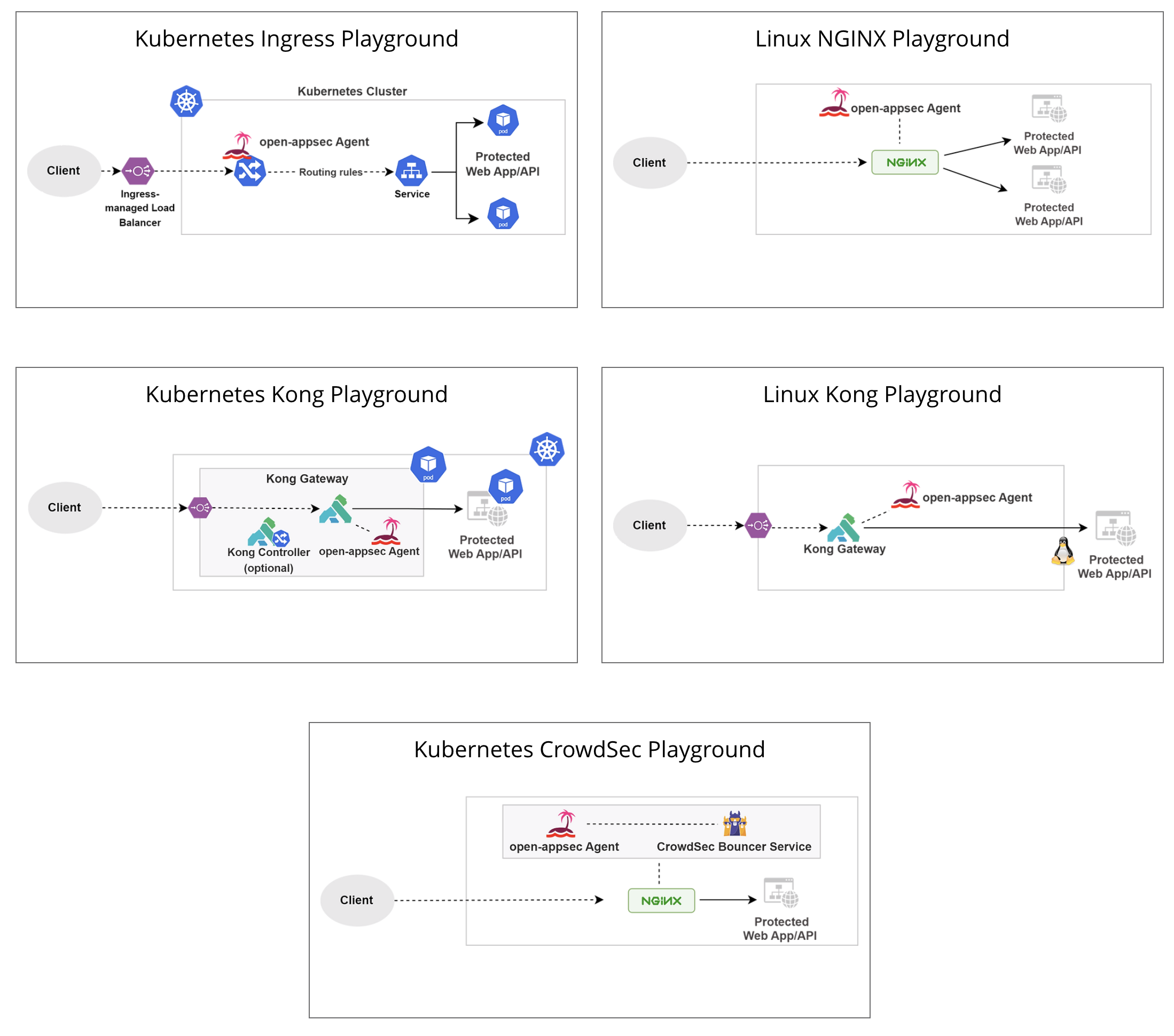

## Deployment Playgrounds (Virtual labs)

|

||||

You can experiment with open-appsec using [Playgrounds](https://www.openappsec.io/playground)

|

||||

|

||||

|

||||

<img width="781" height="878" alt="image" src="https://github.com/user-attachments/assets/0ddee216-5cdf-4288-8c41-cc28cfbf3297" />

|

||||

|

||||

# Resources

|

||||

* [Project Website](https://openappsec.io)

|

||||

@@ -54,21 +54,15 @@ You can experiment with open-appsec using [Playgrounds](https://www.openappsec.i

|

||||

|

||||

# Installation

|

||||

|

||||

For Kubernetes (NGINX Ingress) using the installer:

|

||||

For Kubernetes (NGINX /Kong / APISIX / Istio) using Helm: follow [documentation](https://docs.openappsec.io/getting-started/start-with-kubernetes)

|

||||

|

||||

```bash

|

||||

$ wget https://downloads.openappsec.io/open-appsec-k8s-install && chmod +x open-appsec-k8s-install

|

||||

$ ./open-appsec-k8s-install

|

||||

```

|

||||

|

||||

For Kubernetes (NGINX or Kong) using Helm: follow [documentation](https://docs.openappsec.io/getting-started/start-with-kubernetes/install-using-helm-ingress-nginx-and-kong) – use this method if you’ve built your own containers.

|

||||

|

||||

For Linux (NGINX or Kong) using the installer (list of supported/pre-compiled NGINX attachments is available [here](https://downloads.openappsec.io/packages/supported-nginx.txt)):

|

||||

For Linux (NGINX / Kong / APISIX) using the installer (list of supported/pre-compiled NGINX attachments is available [here](https://downloads.openappsec.io/packages/supported-nginx.txt)):

|

||||

|

||||

```bash

|

||||

$ wget https://downloads.openappsec.io/open-appsec-install && chmod +x open-appsec-install

|

||||

$ ./open-appsec-install --auto

|

||||

```

|

||||

For kong Lua Based plug in follow [documentation](https://docs.openappsec.io/getting-started/start-with-linux)

|

||||

|

||||

For Linux, if you’ve built your own package use the following commands:

|

||||

|

||||

@@ -177,7 +171,7 @@ open-appsec code was audited by an independent third party in September-October

|

||||

See the [full report](https://github.com/openappsec/openappsec/blob/main/LEXFO-CHP20221014-Report-Code_audit-OPEN-APPSEC-v1.2.pdf).

|

||||

|

||||

### Reporting security vulnerabilities

|

||||

If you've found a vulnerability or a potential vulnerability in open-appsec please let us know at securityalert@openappsec.io. We'll send a confirmation email to acknowledge your report within 24 hours, and we'll send an additional email when we've identified the issue positively or negatively.

|

||||

If you've found a vulnerability or a potential vulnerability in open-appsec please let us know at security-alert@openappsec.io. We'll send a confirmation email to acknowledge your report within 24 hours, and we'll send an additional email when we've identified the issue positively or negatively.

|

||||

|

||||

|

||||

# License

|

||||

|

||||

@@ -95,6 +95,18 @@ getFailOpenHoldTimeout()

|

||||

return conf_data.getNumericalValue("fail_open_hold_timeout");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getHoldVerdictPollingTime()

|

||||

{

|

||||

return conf_data.getNumericalValue("hold_verdict_polling_time");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getHoldVerdictRetries()

|

||||

{

|

||||

return conf_data.getNumericalValue("hold_verdict_retries");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getMaxSessionsPerMinute()

|

||||

{

|

||||

@@ -173,6 +185,12 @@ getReqBodySizeTrigger()

|

||||

return conf_data.getNumericalValue("body_size_trigger");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getRemoveResServerHeader()

|

||||

{

|

||||

return conf_data.getNumericalValue("remove_server_header");

|

||||

}

|

||||

|

||||

int

|

||||

isIPAddress(c_str ip_str)

|

||||

{

|

||||

|

||||

@@ -66,7 +66,10 @@ TEST_F(HttpAttachmentUtilTest, GetValidAttachmentConfiguration)

|

||||

"\"static_resources_path\": \"" + static_resources_path + "\",\n"

|

||||

"\"min_retries_for_verdict\": 1,\n"

|

||||

"\"max_retries_for_verdict\": 3,\n"

|

||||

"\"body_size_trigger\": 777\n"

|

||||

"\"hold_verdict_retries\": 3,\n"

|

||||

"\"hold_verdict_polling_time\": 1,\n"

|

||||

"\"body_size_trigger\": 777,\n"

|

||||

"\"remove_server_header\": 1\n"

|

||||

"}\n";

|

||||

ofstream valid_configuration_file(attachment_configuration_file_name);

|

||||

valid_configuration_file << valid_configuration;

|

||||

@@ -95,6 +98,9 @@ TEST_F(HttpAttachmentUtilTest, GetValidAttachmentConfiguration)

|

||||

EXPECT_EQ(getReqBodySizeTrigger(), 777u);

|

||||

EXPECT_EQ(getWaitingForVerdictThreadTimeout(), 75u);

|

||||

EXPECT_EQ(getInspectionMode(), ngx_http_inspection_mode::BLOCKING_THREAD);

|

||||

EXPECT_EQ(getRemoveResServerHeader(), 1u);

|

||||

EXPECT_EQ(getHoldVerdictRetries(), 3u);

|

||||

EXPECT_EQ(getHoldVerdictPollingTime(), 1u);

|

||||

|

||||

EXPECT_EQ(isDebugContext("1.2.3.4", "5.6.7.8", 80, "GET", "test", "/abc"), 1);

|

||||

EXPECT_EQ(isDebugContext("1.2.3.9", "5.6.7.8", 80, "GET", "test", "/abc"), 0);

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

install(FILES Dockerfile entry.sh install-cp-agent-intelligence-service.sh install-cp-crowdsec-aux.sh DESTINATION .)

|

||||

install(FILES Dockerfile entry.sh install-cp-agent-intelligence-service.sh install-cp-crowdsec-aux.sh self_managed_openappsec_manifest.json DESTINATION .)

|

||||

|

||||

add_custom_command(

|

||||

OUTPUT ${CMAKE_INSTALL_PREFIX}/agent-docker.img

|

||||

|

||||

@@ -1,5 +1,7 @@

|

||||

FROM alpine

|

||||

|

||||

ENV OPENAPPSEC_NANO_AGENT=TRUE

|

||||

|

||||

RUN apk add --no-cache -u busybox

|

||||

RUN apk add --no-cache -u zlib

|

||||

RUN apk add --no-cache bash

|

||||

@@ -11,8 +13,12 @@ RUN apk add --no-cache libunwind

|

||||

RUN apk add --no-cache gdb

|

||||

RUN apk add --no-cache libxml2

|

||||

RUN apk add --no-cache pcre2

|

||||

RUN apk add --no-cache ca-certificates

|

||||

RUN apk add --update coreutils

|

||||

|

||||

|

||||

COPY self_managed_openappsec_manifest.json /tmp/self_managed_openappsec_manifest.json

|

||||

|

||||

COPY install*.sh /nano-service-installers/

|

||||

COPY entry.sh /entry.sh

|

||||

|

||||

|

||||

@@ -6,6 +6,8 @@ HTTP_TRANSACTION_HANDLER_SERVICE="install-cp-nano-service-http-transaction-handl

|

||||

ATTACHMENT_REGISTRATION_SERVICE="install-cp-nano-attachment-registration-manager.sh"

|

||||

ORCHESTRATION_INSTALLATION_SCRIPT="install-cp-nano-agent.sh"

|

||||

CACHE_INSTALLATION_SCRIPT="install-cp-nano-agent-cache.sh"

|

||||

PROMETHEUS_INSTALLATION_SCRIPT="install-cp-nano-service-prometheus.sh"

|

||||

NGINX_CENTRAL_MANAGER_INSTALLATION_SCRIPT="install-cp-nano-central-nginx-manager.sh"

|

||||

|

||||

var_fog_address=

|

||||

var_proxy=

|

||||

@@ -13,6 +15,21 @@ var_mode=

|

||||

var_token=

|

||||

var_ignore=

|

||||

init=

|

||||

active_watchdog_pid=

|

||||

|

||||

cleanup() {

|

||||

local signal="$1"

|

||||

echo "[$(date '+%Y-%m-%d %H:%M:%S')] Signal ${signal} was received, exiting gracefully..." >&2

|

||||

if [ -n "${active_watchdog_pid}" ] && ps -p ${active_watchdog_pid} > /dev/null 2>&1; then

|

||||

kill -TERM ${active_watchdog_pid} 2>/dev/null || true

|

||||

wait ${active_watchdog_pid} 2>/dev/null || true

|

||||

fi

|

||||

echo "Cleanup completed. Exiting now." >&2

|

||||

exit 0

|

||||

}

|

||||

|

||||

trap 'cleanup SIGTERM' SIGTERM

|

||||

trap 'cleanup SIGINT' SIGINT

|

||||

|

||||

if [ ! -f /nano-service-installers/$ORCHESTRATION_INSTALLATION_SCRIPT ]; then

|

||||

echo "Error: agent installation package doesn't exist."

|

||||

@@ -81,6 +98,14 @@ fi

|

||||

/nano-service-installers/$CACHE_INSTALLATION_SCRIPT --install

|

||||

/nano-service-installers/$HTTP_TRANSACTION_HANDLER_SERVICE --install

|

||||

|

||||

if [ "$PROMETHEUS" == "true" ]; then

|

||||

/nano-service-installers/$PROMETHEUS_INSTALLATION_SCRIPT --install

|

||||

fi

|

||||

|

||||

if [ "$CENTRAL_NGINX_MANAGER" == "true" ]; then

|

||||

/nano-service-installers/$NGINX_CENTRAL_MANAGER_INSTALLATION_SCRIPT --install

|

||||

fi

|

||||

|

||||

if [ "$CROWDSEC_ENABLED" == "true" ]; then

|

||||

/nano-service-installers/$INTELLIGENCE_INSTALLATION_SCRIPT --install

|

||||

/nano-service-installers/$CROWDSEC_INSTALLATION_SCRIPT --install

|

||||

@@ -93,25 +118,16 @@ if [ -f "$FILE" ]; then

|

||||

fi

|

||||

|

||||

touch /etc/cp/watchdog/wd.startup

|

||||

/etc/cp/watchdog/cp-nano-watchdog >/dev/null 2>&1 &

|

||||

active_watchdog_pid=$!

|

||||

while true; do

|

||||

if [ -z "$init" ]; then

|

||||

init=true

|

||||

/etc/cp/watchdog/cp-nano-watchdog >/dev/null 2>&1 &

|

||||

sleep 5

|

||||

active_watchdog_pid=$(pgrep -f -x -o "/bin/bash /etc/cp/watchdog/cp-nano-watchdog")

|

||||

fi

|

||||

|

||||

current_watchdog_pid=$(pgrep -f -x -o "/bin/bash /etc/cp/watchdog/cp-nano-watchdog")

|

||||

if [ ! -f /tmp/restart_watchdog ] && [ "$current_watchdog_pid" != "$active_watchdog_pid" ]; then

|

||||

echo "Error: Watchdog exited abnormally"

|

||||

exit 1

|

||||

elif [ -f /tmp/restart_watchdog ]; then

|

||||

if [ -f /tmp/restart_watchdog ]; then

|

||||

rm -f /tmp/restart_watchdog

|

||||

kill -9 "$(pgrep -f -x -o "/bin/bash /etc/cp/watchdog/cp-nano-watchdog")"

|

||||

/etc/cp/watchdog/cp-nano-watchdog >/dev/null 2>&1 &

|

||||

sleep 5

|

||||

active_watchdog_pid=$(pgrep -f -x -o "/bin/bash /etc/cp/watchdog/cp-nano-watchdog")

|

||||

kill -9 ${active_watchdog_pid}

|

||||

fi

|

||||

if [ ! "$(ps -f | grep cp-nano-watchdog | grep ${active_watchdog_pid})" ]; then

|

||||

/etc/cp/watchdog/cp-nano-watchdog >/dev/null 2>&1 &

|

||||

active_watchdog_pid=$!

|

||||

fi

|

||||

|

||||

sleep 5

|

||||

done

|

||||

|

||||

@@ -7,3 +7,4 @@ add_subdirectory(pending_key)

|

||||

add_subdirectory(utils)

|

||||

add_subdirectory(attachment-intakers)

|

||||

add_subdirectory(security_apps)

|

||||

add_subdirectory(nginx_message_reader)

|

||||

|

||||

@@ -31,10 +31,12 @@

|

||||

#include <stdarg.h>

|

||||

|

||||

#include <boost/range/iterator_range.hpp>

|

||||

#include <boost/algorithm/string.hpp>

|

||||

#include <boost/regex.hpp>

|

||||

|

||||

#include "nginx_attachment_config.h"

|

||||

#include "nginx_attachment_opaque.h"

|

||||

#include "generic_rulebase/evaluators/trigger_eval.h"

|

||||

#include "nginx_parser.h"

|

||||

#include "i_instance_awareness.h"

|

||||

#include "common.h"

|

||||

@@ -129,6 +131,7 @@ class NginxAttachment::Impl

|

||||

Singleton::Provide<I_StaticResourcesHandler>::From<NginxAttachment>

|

||||

{

|

||||

static constexpr auto INSPECT = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INSPECT;

|

||||

static constexpr auto LIMIT_RESPONSE_HEADERS = ngx_http_cp_verdict_e::LIMIT_RESPONSE_HEADERS;

|

||||

static constexpr auto ACCEPT = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_ACCEPT;

|

||||

static constexpr auto DROP = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_DROP;

|

||||

static constexpr auto INJECT = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INJECT;

|

||||

@@ -260,6 +263,22 @@ public:

|

||||

);

|

||||

}

|

||||

|

||||

const char* ignored_headers_env = getenv("SAAS_IGNORED_UPSTREAM_HEADERS");

|

||||

if (ignored_headers_env) {

|

||||

string ignored_headers_str = ignored_headers_env;

|

||||

ignored_headers_str = NGEN::Strings::trim(ignored_headers_str);

|

||||

|

||||

if (!ignored_headers_str.empty()) {

|

||||

dbgInfo(D_HTTP_MANAGER)

|

||||

<< "Ignoring SAAS_IGNORED_UPSTREAM_HEADERS environment variable: "

|

||||

<< ignored_headers_str;

|

||||

|

||||

vector<string> ignored_headers_vec;

|

||||

boost::split(ignored_headers_vec, ignored_headers_str, boost::is_any_of(";"));

|

||||

for (const string &header : ignored_headers_vec) ignored_headers.insert(header);

|

||||

}

|

||||

}

|

||||

|

||||

dbgInfo(D_NGINX_ATTACHMENT) << "Successfully initialized NGINX Attachment";

|

||||

}

|

||||

|

||||

@@ -1034,7 +1053,11 @@ private:

|

||||

case ChunkType::REQUEST_START:

|

||||

return handleStartTransaction(data, opaque);

|

||||

case ChunkType::REQUEST_HEADER:

|

||||

return handleMultiModifiableChunks(NginxParser::parseRequestHeaders(data), "request header", true);

|

||||

return handleMultiModifiableChunks(

|

||||

NginxParser::parseRequestHeaders(data, ignored_headers),

|

||||

"request header",

|

||||

true

|

||||

);

|

||||

case ChunkType::REQUEST_BODY:

|

||||

return handleModifiableChunk(NginxParser::parseRequestBody(data), "request body", true);

|

||||

case ChunkType::REQUEST_END: {

|

||||

@@ -1125,10 +1148,18 @@ private:

|

||||

handleCustomWebResponse(

|

||||

SharedMemoryIPC *ipc,

|

||||

vector<const char *> &verdict_data,

|

||||

vector<uint16_t> &verdict_data_sizes)

|

||||

vector<uint16_t> &verdict_data_sizes,

|

||||

string web_user_response_id)

|

||||

{

|

||||

ngx_http_cp_web_response_data_t web_response_data;

|

||||

|

||||

ScopedContext ctx;

|

||||

if (web_user_response_id != "") {

|

||||

dbgTrace(D_NGINX_ATTACHMENT)

|

||||

<< "web user response ID registered in contex: "

|

||||

<< web_user_response_id;

|

||||

set<string> triggers_set{web_user_response_id};

|

||||

ctx.registerValue<set<GenericConfigId>>(TriggerMatcher::ctx_key, triggers_set);

|

||||

}

|

||||

WebTriggerConf web_trigger_conf = getConfigurationWithDefault<WebTriggerConf>(

|

||||

WebTriggerConf::default_trigger_conf,

|

||||

"rulebase",

|

||||

@@ -1250,7 +1281,7 @@ private:

|

||||

if (verdict.getVerdict() == DROP) {

|

||||

nginx_attachment_event.addTrafficVerdictCounter(nginxAttachmentEvent::trafficVerdict::DROP);

|

||||

verdict_to_send.modification_count = 1;

|

||||

return handleCustomWebResponse(ipc, verdict_fragments, fragments_sizes);

|

||||

return handleCustomWebResponse(ipc, verdict_fragments, fragments_sizes, verdict.getWebUserResponseID());

|

||||

}

|

||||

|

||||

if (verdict.getVerdict() == ACCEPT) {

|

||||

@@ -1476,11 +1507,17 @@ private:

|

||||

opaque.activateContext();

|

||||

|

||||

FilterVerdict verdict = handleChunkedData(*chunked_data_type, inspection_data, opaque);

|

||||

|

||||

bool is_header =

|

||||

*chunked_data_type == ChunkType::REQUEST_HEADER ||

|

||||

*chunked_data_type == ChunkType::RESPONSE_HEADER ||

|

||||

*chunked_data_type == ChunkType::CONTENT_LENGTH;

|

||||

|

||||

if (verdict.getVerdict() == LIMIT_RESPONSE_HEADERS) {

|

||||

handleVerdictResponse(verdict, attachment_ipc, transaction_data->session_id, is_header);

|

||||

popData(attachment_ipc);

|

||||

verdict = FilterVerdict(INSPECT);

|

||||

}

|

||||

|

||||

handleVerdictResponse(verdict, attachment_ipc, transaction_data->session_id, is_header);

|

||||

|

||||

bool is_final_verdict = verdict.getVerdict() == ACCEPT ||

|

||||

@@ -1593,6 +1630,8 @@ private:

|

||||

return "INJECT";

|

||||

case INSPECT:

|

||||

return "INSPECT";

|

||||

case LIMIT_RESPONSE_HEADERS:

|

||||

return "LIMIT_RESPONSE_HEADERS";

|

||||

case IRRELEVANT:

|

||||

return "IRRELEVANT";

|

||||

case RECONF:

|

||||

@@ -1814,6 +1853,7 @@ private:

|

||||

HttpAttachmentConfig attachment_config;

|

||||

I_MainLoop::RoutineID attachment_routine_id = 0;

|

||||

bool traffic_indicator = false;

|

||||

unordered_set<string> ignored_headers;

|

||||

|

||||

// Interfaces

|

||||

I_Socket *i_socket = nullptr;

|

||||

|

||||

@@ -203,6 +203,13 @@ HttpAttachmentConfig::setFailOpenTimeout()

|

||||

"NGINX wait thread timeout msec"

|

||||

));

|

||||

|

||||

conf_data.setNumericalValue("remove_server_header", getAttachmentConf<uint>(

|

||||

0,

|

||||

"agent.removeServerHeader.nginxModule",

|

||||

"HTTP manager",

|

||||

"Response server header removal"

|

||||

));

|

||||

|

||||

uint inspection_mode = getAttachmentConf<uint>(

|

||||

static_cast<uint>(ngx_http_inspection_mode_e::NON_BLOCKING_THREAD),

|

||||

"agent.inspectionMode.nginxModule",

|

||||

@@ -233,6 +240,21 @@ HttpAttachmentConfig::setRetriesForVerdict()

|

||||

"Max retries for verdict"

|

||||

));

|

||||

|

||||

conf_data.setNumericalValue("hold_verdict_retries", getAttachmentConf<uint>(

|

||||

3,

|

||||

"agent.retriesForHoldVerdict.nginxModule",

|

||||

"HTTP manager",

|

||||

"Retries for hold verdict"

|

||||

));

|

||||

|

||||

conf_data.setNumericalValue("hold_verdict_polling_time", getAttachmentConf<uint>(

|

||||

1,

|

||||

"agent.holdVerdictPollingInterval.nginxModule",

|

||||

"HTTP manager",

|

||||

"Hold verdict polling interval seconds"

|

||||

));

|

||||

|

||||

|

||||

conf_data.setNumericalValue("body_size_trigger", getAttachmentConf<uint>(

|

||||

200000,

|

||||

"agent.reqBodySizeTrigger.nginxModule",

|

||||

|

||||

@@ -19,12 +19,15 @@

|

||||

|

||||

#include "config.h"

|

||||

#include "virtual_modifiers.h"

|

||||

#include "agent_core_utilities.h"

|

||||

|

||||

using namespace std;

|

||||

using namespace boost::uuids;

|

||||

|

||||

USE_DEBUG_FLAG(D_HTTP_MANAGER);

|

||||

|

||||

extern bool is_keep_alive_ctx;

|

||||

|

||||

NginxAttachmentOpaque::NginxAttachmentOpaque(HttpTransactionData _transaction_data)

|

||||

:

|

||||

TableOpaqueSerialize<NginxAttachmentOpaque>(this),

|

||||

@@ -67,6 +70,12 @@ NginxAttachmentOpaque::NginxAttachmentOpaque(HttpTransactionData _transaction_da

|

||||

ctx.registerValue(HttpTransactionData::uri_query_decoded, decoded_url.substr(question_mark_location + 1));

|

||||

}

|

||||

ctx.registerValue(HttpTransactionData::uri_path_decoded, decoded_url);

|

||||

|

||||

// Register waf_tag from transaction data if available

|

||||

const std::string& waf_tag = transaction_data.getWafTag();

|

||||

if (!waf_tag.empty()) {

|

||||

ctx.registerValue(HttpTransactionData::waf_tag_ctx, waf_tag);

|

||||

}

|

||||

}

|

||||

|

||||

NginxAttachmentOpaque::~NginxAttachmentOpaque()

|

||||

@@ -119,3 +128,47 @@ NginxAttachmentOpaque::setSavedData(const string &name, const string &data, EnvK

|

||||

saved_data[name] = data;

|

||||

ctx.registerValue(name, data, log_ctx);

|

||||

}

|

||||

|

||||

bool

|

||||

NginxAttachmentOpaque::setKeepAliveCtx(const string &hdr_key, const string &hdr_val)

|

||||

{

|

||||

if (!is_keep_alive_ctx) return false;

|

||||

|

||||

static pair<string, string> keep_alive_hdr;

|

||||

static bool keep_alive_hdr_initialized = false;

|

||||

|

||||

if (keep_alive_hdr_initialized) {

|

||||

if (!keep_alive_hdr.first.empty() && hdr_key == keep_alive_hdr.first && hdr_val == keep_alive_hdr.second) {

|

||||

dbgTrace(D_HTTP_MANAGER) << "Registering keep alive context";

|

||||

ctx.registerValue("keep_alive_request_ctx", true);

|

||||

return true;

|

||||

}

|

||||

return false;

|

||||

}

|

||||

|

||||

const char* saas_keep_alive_hdr_name_env = getenv("SAAS_KEEP_ALIVE_HDR_NAME");

|

||||

if (saas_keep_alive_hdr_name_env) {

|

||||

keep_alive_hdr.first = NGEN::Strings::trim(saas_keep_alive_hdr_name_env);

|

||||

dbgInfo(D_HTTP_MANAGER) << "Using SAAS_KEEP_ALIVE_HDR_NAME environment variable: " << keep_alive_hdr.first;

|

||||

}

|

||||

|

||||

if (!keep_alive_hdr.first.empty()) {

|

||||

const char* saas_keep_alive_hdr_value_env = getenv("SAAS_KEEP_ALIVE_HDR_VALUE");

|

||||

if (saas_keep_alive_hdr_value_env) {

|

||||

keep_alive_hdr.second = NGEN::Strings::trim(saas_keep_alive_hdr_value_env);

|

||||

dbgInfo(D_HTTP_MANAGER)

|

||||

<< "Using SAAS_KEEP_ALIVE_HDR_VALUE environment variable: "

|

||||

<< keep_alive_hdr.second;

|

||||

}

|

||||

|

||||

if (!keep_alive_hdr.second.empty() && (hdr_key == keep_alive_hdr.first && hdr_val == keep_alive_hdr.second)) {

|

||||

dbgTrace(D_HTTP_MANAGER) << "Registering keep alive context";

|

||||

ctx.registerValue("keep_alive_request_ctx", true);

|

||||

keep_alive_hdr_initialized = true;

|

||||

return true;

|

||||

}

|

||||

}

|

||||

|

||||

keep_alive_hdr_initialized = true;

|

||||

return false;

|

||||

}

|

||||

|

||||

@@ -85,6 +85,7 @@ public:

|

||||

EnvKeyAttr::LogSection log_ctx = EnvKeyAttr::LogSection::NONE

|

||||

);

|

||||

void setApplicationState(const ApplicationState &app_state) { application_state = app_state; }

|

||||

bool setKeepAliveCtx(const std::string &hdr_key, const std::string &hdr_val);

|

||||

|

||||

private:

|

||||

CompressionStream *response_compression_stream;

|

||||

|

||||

@@ -29,6 +29,7 @@ USE_DEBUG_FLAG(D_NGINX_ATTACHMENT_PARSER);

|

||||

Buffer NginxParser::tenant_header_key = Buffer();

|

||||

static const Buffer proxy_ip_header_key("X-Forwarded-For", 15, Buffer::MemoryType::STATIC);

|

||||

static const Buffer source_ip("sourceip", 8, Buffer::MemoryType::STATIC);

|

||||

bool is_keep_alive_ctx = getenv("SAAS_KEEP_ALIVE_HDR_NAME") != nullptr;

|

||||

|

||||

map<Buffer, CompressionType> NginxParser::content_encodings = {

|

||||

{Buffer("identity"), CompressionType::NO_COMPRESSION},

|

||||

@@ -177,37 +178,70 @@ getActivetenantAndProfile(const string &str, const string &deli = ",")

|

||||

}

|

||||

|

||||

Maybe<vector<HttpHeader>>

|

||||

NginxParser::parseRequestHeaders(const Buffer &data)

|

||||

NginxParser::parseRequestHeaders(const Buffer &data, const unordered_set<string> &ignored_headers)

|

||||

{

|

||||

auto parsed_headers = genHeaders(data);

|

||||

if (!parsed_headers.ok()) return parsed_headers.passErr();

|

||||

auto maybe_parsed_headers = genHeaders(data);

|

||||

if (!maybe_parsed_headers.ok()) return maybe_parsed_headers.passErr();

|

||||

|

||||

auto i_transaction_table = Singleton::Consume<I_TableSpecific<SessionID>>::by<NginxAttachment>();

|

||||

auto parsed_headers = maybe_parsed_headers.unpack();

|

||||

NginxAttachmentOpaque &opaque = i_transaction_table->getState<NginxAttachmentOpaque>();

|

||||

|

||||

for (const HttpHeader &header : *parsed_headers) {

|

||||

if (is_keep_alive_ctx || !ignored_headers.empty()) {

|

||||

bool is_last_header_removed = false;

|

||||

parsed_headers.erase(

|

||||

remove_if(

|

||||

parsed_headers.begin(),

|

||||

parsed_headers.end(),

|

||||

[&opaque, &is_last_header_removed, &ignored_headers](const HttpHeader &header)

|

||||

{

|

||||

string hdr_key = static_cast<string>(header.getKey());

|

||||

string hdr_val = static_cast<string>(header.getValue());

|

||||

if (

|

||||

opaque.setKeepAliveCtx(hdr_key, hdr_val)

|

||||

|| ignored_headers.find(hdr_key) != ignored_headers.end()

|

||||

) {

|

||||

dbgTrace(D_NGINX_ATTACHMENT_PARSER) << "Header was removed from headers list: " << hdr_key;

|

||||

if (header.isLastHeader()) {

|

||||

dbgTrace(D_NGINX_ATTACHMENT_PARSER) << "Last header was removed from headers list";

|

||||

is_last_header_removed = true;

|

||||

}

|

||||

return true;

|

||||

}

|

||||

return false;

|

||||

}

|

||||

),

|

||||

parsed_headers.end()

|

||||

);

|

||||

if (is_last_header_removed) {

|

||||

dbgTrace(D_NGINX_ATTACHMENT_PARSER) << "Adjusting last header flag";

|

||||

if (!parsed_headers.empty()) parsed_headers.back().setIsLastHeader();

|

||||

}

|

||||

}

|

||||

|

||||

for (const HttpHeader &header : parsed_headers) {

|

||||

auto source_identifiers = getConfigurationWithDefault<UsersAllIdentifiersConfig>(

|

||||

UsersAllIdentifiersConfig(),

|

||||

"rulebase",

|

||||

"usersIdentifiers"

|

||||

);

|

||||

source_identifiers.parseRequestHeaders(header);

|

||||

|

||||

NginxAttachmentOpaque &opaque = i_transaction_table->getState<NginxAttachmentOpaque>();

|

||||

opaque.addToSavedData(

|

||||

HttpTransactionData::req_headers,

|

||||

static_cast<string>(header.getKey()) + ": " + static_cast<string>(header.getValue()) + "\r\n"

|

||||

);

|

||||

|

||||

if (NginxParser::tenant_header_key == header.getKey()) {

|

||||

const auto &header_key = header.getKey();

|

||||

if (NginxParser::tenant_header_key == header_key) {

|

||||

dbgDebug(D_NGINX_ATTACHMENT_PARSER)

|

||||

<< "Identified active tenant header. Key: "

|

||||

<< dumpHex(header.getKey())

|

||||

<< dumpHex(header_key)

|

||||

<< ", Value: "

|

||||

<< dumpHex(header.getValue());

|

||||

|

||||

auto active_tenant_and_profile = getActivetenantAndProfile(header.getValue());

|

||||

opaque.setSessionTenantAndProfile(active_tenant_and_profile[0], active_tenant_and_profile[1]);

|

||||

} else if (proxy_ip_header_key == header.getKey()) {

|

||||

} else if (proxy_ip_header_key == header_key) {

|

||||

source_identifiers.setXFFValuesToOpaqueCtx(header, UsersAllIdentifiersConfig::ExtractType::PROXYIP);

|

||||

}

|

||||

}

|

||||

@@ -345,12 +379,15 @@ NginxParser::parseResponseBody(const Buffer &raw_response_body, CompressionStrea

|

||||

Maybe<CompressionType>

|

||||

NginxParser::parseContentEncoding(const vector<HttpHeader> &headers)

|

||||

{

|

||||

static const Buffer content_encoding_header_key("Content-Encoding");

|

||||

dbgFlow(D_NGINX_ATTACHMENT_PARSER) << "Parsing \"Content-Encoding\" header";

|

||||

static const Buffer content_encoding_header_key("content-encoding");

|

||||

|

||||

auto it = find_if(

|

||||

headers.begin(),

|

||||

headers.end(),

|

||||

[&] (const HttpHeader &http_header) { return http_header.getKey() == content_encoding_header_key; }

|

||||

[&] (const HttpHeader &http_header) {

|

||||

return http_header.getKey().isEqualLowerCase(content_encoding_header_key);

|

||||

}

|

||||

);

|

||||

if (it == headers.end()) {

|

||||

dbgTrace(D_NGINX_ATTACHMENT_PARSER)

|

||||

|

||||

@@ -28,7 +28,10 @@ public:

|

||||

static Maybe<HttpTransactionData> parseStartTrasaction(const Buffer &data);

|

||||

static Maybe<ResponseCode> parseResponseCode(const Buffer &data);

|

||||

static Maybe<uint64_t> parseContentLength(const Buffer &data);

|

||||

static Maybe<std::vector<HttpHeader>> parseRequestHeaders(const Buffer &data);

|

||||

static Maybe<std::vector<HttpHeader>> parseRequestHeaders(

|

||||

const Buffer &data,

|

||||

const std::unordered_set<std::string> &ignored_headers

|

||||

);

|

||||

static Maybe<std::vector<HttpHeader>> parseResponseHeaders(const Buffer &data);

|

||||

static Maybe<HttpBody> parseRequestBody(const Buffer &data);

|

||||

static Maybe<HttpBody> parseResponseBody(const Buffer &raw_response_body, CompressionStream *compression_stream);

|

||||

|

||||

@@ -285,17 +285,21 @@ Maybe<string>

|

||||

UsersAllIdentifiersConfig::parseXForwardedFor(const string &str, ExtractType type) const

|

||||

{

|

||||

vector<string> header_values = split(str);

|

||||

|

||||

if (header_values.empty()) return genError("No IP found in the xff header list");

|

||||

|

||||

vector<string> xff_values = getHeaderValuesFromConfig("x-forwarded-for");

|

||||

vector<CIDRSData> cidr_values(xff_values.begin(), xff_values.end());

|

||||

string last_valid_ip;

|

||||

|

||||

for (auto it = header_values.rbegin(); it != header_values.rend() - 1; ++it) {

|

||||

if (!IPAddr::createIPAddr(*it).ok()) {

|

||||

dbgWarning(D_NGINX_ATTACHMENT_PARSER) << "Invalid IP address found in the xff header IPs list: " << *it;

|

||||

return genError("Invalid IP address");

|

||||

if (last_valid_ip.empty()) {

|

||||

return genError("Invalid IP address");

|

||||

}

|

||||

return last_valid_ip;

|

||||

}

|

||||

last_valid_ip = *it;

|

||||

if (type == ExtractType::PROXYIP) continue;

|

||||

if (!isIpTrusted(*it, cidr_values)) {

|

||||

dbgDebug(D_NGINX_ATTACHMENT_PARSER) << "Found untrusted IP in the xff header IPs list: " << *it;

|

||||

@@ -307,7 +311,10 @@ UsersAllIdentifiersConfig::parseXForwardedFor(const string &str, ExtractType typ

|

||||

dbgWarning(D_NGINX_ATTACHMENT_PARSER)

|

||||

<< "Invalid IP address found in the xff header IPs list: "

|

||||

<< header_values[0];

|

||||

return genError("Invalid IP address");

|

||||

if (last_valid_ip.empty()) {

|

||||

return genError("No Valid Ip address was found");

|

||||

}

|

||||

return last_valid_ip;

|

||||

}

|

||||

|

||||

return header_values[0];

|

||||

@@ -359,6 +366,24 @@ UsersAllIdentifiersConfig::setCustomHeaderToOpaqueCtx(const HttpHeader &header)

|

||||

return;

|

||||

}

|

||||

|

||||

void

|

||||

UsersAllIdentifiersConfig::setWafTagValuesToOpaqueCtx(const HttpHeader &header) const

|

||||

{

|

||||

auto i_transaction_table = Singleton::Consume<I_TableSpecific<SessionID>>::by<NginxAttachment>();

|

||||

if (!i_transaction_table || !i_transaction_table->hasState<NginxAttachmentOpaque>()) {

|

||||

dbgDebug(D_NGINX_ATTACHMENT_PARSER) << "Can't get the transaction table";

|

||||

return;

|

||||

}

|

||||

|

||||

NginxAttachmentOpaque &opaque = i_transaction_table->getState<NginxAttachmentOpaque>();

|

||||

opaque.setSavedData(HttpTransactionData::waf_tag_ctx, static_cast<string>(header.getValue()));

|

||||

|

||||

dbgDebug(D_NGINX_ATTACHMENT_PARSER)

|

||||

<< "Added waf tag to context: "

|

||||

<< static_cast<string>(header.getValue());

|

||||

return;

|

||||

}

|

||||

|

||||

Maybe<string>

|

||||

UsersAllIdentifiersConfig::parseCookieElement(

|

||||

const string::const_iterator &start,

|

||||

|

||||

@@ -142,7 +142,7 @@ private:

|

||||

if (temp_params_list.size() == 1) {

|

||||

Maybe<IPAddr> maybe_ip = IPAddr::createIPAddr(temp_params_list[0]);

|

||||

if (!maybe_ip.ok()) return genError("Could not create IP address, " + maybe_ip.getErr());

|

||||

IpAddress addr = move(ConvertToIpAddress(maybe_ip.unpackMove()));

|

||||

IpAddress addr = ConvertToIpAddress(maybe_ip.unpackMove());

|

||||

|

||||

return move(IPRange{.start = addr, .end = addr});

|

||||

}

|

||||

@@ -157,11 +157,11 @@ private:

|

||||

IPAddr max_addr = maybe_ip_max.unpackMove();

|

||||

if (min_addr > max_addr) return genError("Could not create ip range - start greater then end");

|

||||

|

||||

IpAddress addr_min = move(ConvertToIpAddress(move(min_addr)));

|

||||

IpAddress addr_max = move(ConvertToIpAddress(move(max_addr)));

|

||||

IpAddress addr_min = ConvertToIpAddress(move(min_addr));

|

||||

IpAddress addr_max = ConvertToIpAddress(move(max_addr));

|

||||

if (addr_max.ip_type != addr_min.ip_type) return genError("Range IP's type does not match");

|

||||

|

||||

return move(IPRange{.start = move(addr_min), .end = move(addr_max)});

|

||||

return IPRange{.start = move(addr_min), .end = move(addr_max)};

|

||||

}

|

||||

|

||||

return genError("Illegal range received: " + range);

|

||||

|

||||

@@ -15,19 +15,18 @@

|

||||

|

||||

#include <string>

|

||||

#include <map>

|

||||

#include <sys/stat.h>

|

||||

#include <climits>

|

||||

#include <unordered_map>

|

||||

#include <boost/range/iterator_range.hpp>

|

||||

#include <unordered_set>

|

||||

#include <boost/algorithm/string.hpp>

|

||||

#include <fstream>

|

||||

#include <algorithm>

|

||||

|

||||

#include "common.h"

|

||||

#include "config.h"

|

||||

#include "table_opaque.h"

|

||||

#include "http_manager_opaque.h"

|

||||

#include "log_generator.h"

|

||||

#include "http_inspection_events.h"

|

||||

#include "agent_core_utilities.h"

|

||||

|

||||

USE_DEBUG_FLAG(D_HTTP_MANAGER);

|

||||

|

||||

@@ -38,6 +37,7 @@ operator<<(ostream &os, const EventVerdict &event)

|

||||

{

|

||||

switch (event.getVerdict()) {

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INSPECT: return os << "Inspect";

|

||||

case ngx_http_cp_verdict_e::LIMIT_RESPONSE_HEADERS: return os << "Limit Response Headers";

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_ACCEPT: return os << "Accept";

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_DROP: return os << "Drop";

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INJECT: return os << "Inject";

|

||||

@@ -94,12 +94,14 @@ public:

|

||||

ctx.registerValue(app_sec_marker_key, i_transaction_table->keyToString(), EnvKeyAttr::LogSection::MARKER);

|

||||

|

||||

HttpManagerOpaque &state = i_transaction_table->getState<HttpManagerOpaque>();

|

||||

string event_key = static_cast<string>(event.getKey());

|

||||

if (event_key == getProfileAgentSettingWithDefault<string>("", "agent.customHeaderValueLogging")) {

|

||||

|

||||

const auto &custom_header = getProfileAgentSettingWithDefault<string>("", "agent.customHeaderValueLogging");

|

||||

|

||||

if (event.getKey().isEqualLowerCase(custom_header)) {

|

||||

string event_value = static_cast<string>(event.getValue());

|

||||

dbgTrace(D_HTTP_MANAGER)

|

||||

<< "Found header key and value - ("

|

||||

<< event_key

|

||||

<< custom_header

|

||||

<< ": "

|

||||

<< event_value

|

||||

<< ") that matched agent settings";

|

||||

@@ -195,7 +197,6 @@ public:

|

||||

if (state.getUserDefinedValue().ok()) {

|

||||

ctx.registerValue("UserDefined", state.getUserDefinedValue().unpack(), EnvKeyAttr::LogSection::DATA);

|

||||

}

|

||||

|

||||

return handleEvent(EndRequestEvent().performNamedQuery());

|

||||

}

|

||||

|

||||

@@ -323,8 +324,9 @@ private:

|

||||

<< respond.second.getVerdict();

|

||||

|

||||

state.setApplicationVerdict(respond.first, respond.second.getVerdict());

|

||||

state.setApplicationWebResponse(respond.first, respond.second.getWebUserResponseByPractice());

|

||||

}

|

||||

FilterVerdict aggregated_verdict = state.getCurrVerdict();

|

||||

FilterVerdict aggregated_verdict(state.getCurrVerdict(), state.getCurrWebUserResponse());

|

||||

if (aggregated_verdict.getVerdict() == ngx_http_cp_verdict_e::TRAFFIC_VERDICT_DROP) {

|

||||

SecurityAppsDropEvent(state.getCurrentDropVerdictCausers()).notify();

|

||||

}

|

||||

|

||||

@@ -32,6 +32,13 @@ HttpManagerOpaque::setApplicationVerdict(const string &app_name, ngx_http_cp_ver

|

||||

applications_verdicts[app_name] = verdict;

|

||||

}

|

||||

|

||||

void

|

||||

HttpManagerOpaque::setApplicationWebResponse(const string &app_name, string web_user_response_id)

|

||||

{

|

||||

dbgTrace(D_HTTP_MANAGER) << "Security app: " << app_name << ", has web user response: " << web_user_response_id;

|

||||

applications_web_user_response[app_name] = web_user_response_id;

|

||||

}

|

||||

|

||||

ngx_http_cp_verdict_e

|

||||

HttpManagerOpaque::getApplicationsVerdict(const string &app_name) const

|

||||

{

|

||||

@@ -51,8 +58,12 @@ HttpManagerOpaque::getCurrVerdict() const

|

||||

for (const auto &app_verdic_pair : applications_verdicts) {

|

||||

switch (app_verdic_pair.second) {

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_DROP:

|

||||

dbgTrace(D_HTTP_MANAGER) << "Verdict DROP for app: " << app_verdic_pair.first;

|

||||

current_web_user_response = applications_web_user_response.at(app_verdic_pair.first);

|

||||

dbgTrace(D_HTTP_MANAGER) << "current_web_user_response=" << current_web_user_response;

|

||||

return app_verdic_pair.second;

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INJECT:

|

||||

// Sent in ResponseHeaders and ResponseBody.

|

||||

verdict = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INJECT;

|

||||

break;

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_ACCEPT:

|

||||

@@ -60,11 +71,16 @@ HttpManagerOpaque::getCurrVerdict() const

|

||||

break;

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INSPECT:

|

||||

break;

|

||||

case ngx_http_cp_verdict_e::LIMIT_RESPONSE_HEADERS:

|

||||

// Sent in End Request.

|

||||

verdict = ngx_http_cp_verdict_e::LIMIT_RESPONSE_HEADERS;

|

||||

break;

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_IRRELEVANT:

|

||||

dbgTrace(D_HTTP_MANAGER) << "Verdict 'Irrelevant' is not yet supported. Returning Accept";

|

||||

accepted_apps++;

|

||||

break;

|

||||

case ngx_http_cp_verdict_e::TRAFFIC_VERDICT_WAIT:

|

||||

// Sent in Request Headers and Request Body.

|

||||

verdict = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_WAIT;

|

||||

break;

|

||||

default:

|

||||

|

||||

@@ -28,10 +28,12 @@ public:

|

||||

HttpManagerOpaque();

|

||||

|

||||

void setApplicationVerdict(const std::string &app_name, ngx_http_cp_verdict_e verdict);

|

||||

void setApplicationWebResponse(const std::string &app_name, std::string web_user_response_id);

|

||||

ngx_http_cp_verdict_e getApplicationsVerdict(const std::string &app_name) const;

|

||||

void setManagerVerdict(ngx_http_cp_verdict_e verdict) { manager_verdict = verdict; }

|

||||

ngx_http_cp_verdict_e getManagerVerdict() const { return manager_verdict; }

|

||||

ngx_http_cp_verdict_e getCurrVerdict() const;

|

||||

const std::string & getCurrWebUserResponse() const { return current_web_user_response; };

|

||||

std::set<std::string> getCurrentDropVerdictCausers() const;

|

||||

void saveCurrentDataToCache(const Buffer &full_data);

|

||||

void setUserDefinedValue(const std::string &value) { user_defined_value = value; }

|

||||

@@ -52,6 +54,8 @@ public:

|

||||

|

||||

private:

|

||||

std::unordered_map<std::string, ngx_http_cp_verdict_e> applications_verdicts;

|

||||

std::unordered_map<std::string, std::string> applications_web_user_response;

|

||||

mutable std::string current_web_user_response;

|

||||

ngx_http_cp_verdict_e manager_verdict = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INSPECT;

|

||||

Buffer prev_data_cache;

|

||||

uint aggregated_payload_size = 0;

|

||||

|

||||

45

components/include/central_nginx_manager.h

Executable file

45

components/include/central_nginx_manager.h

Executable file

@@ -0,0 +1,45 @@

|

||||

// Copyright (C) 2022 Check Point Software Technologies Ltd. All rights reserved.

|

||||

|

||||

// Licensed under the Apache License, Version 2.0 (the "License");

|

||||

// You may obtain a copy of the License at

|

||||

//

|

||||

// http://www.apache.org/licenses/LICENSE-2.0

|

||||

//

|

||||

// Unless required by applicable law or agreed to in writing, software

|

||||

// distributed under the License is distributed on an "AS IS" BASIS,

|

||||

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

// See the License for the specific language governing permissions and

|

||||

// limitations under the License.

|

||||

|

||||

#ifndef __CENTRAL_NGINX_MANAGER_H__

|

||||

#define __CENTRAL_NGINX_MANAGER_H__

|

||||

|

||||

#include "component.h"

|

||||

#include "singleton.h"

|

||||

#include "i_messaging.h"

|

||||

#include "i_rest_api.h"

|

||||

#include "i_mainloop.h"

|

||||

#include "i_agent_details.h"

|

||||

|

||||

class CentralNginxManager

|

||||

:

|

||||

public Component,

|

||||

Singleton::Consume<I_RestApi>,

|

||||

Singleton::Consume<I_Messaging>,

|

||||

Singleton::Consume<I_MainLoop>,

|

||||

Singleton::Consume<I_AgentDetails>

|

||||

{

|

||||

public:

|

||||

CentralNginxManager();

|

||||

~CentralNginxManager();

|

||||

|

||||

void preload() override;

|

||||

void init() override;

|

||||

void fini() override;

|

||||

|

||||

private:

|

||||

class Impl;

|

||||

std::unique_ptr<Impl> pimpl;

|

||||

};

|

||||

|

||||

#endif // __CENTRAL_NGINX_MANAGER_H__

|

||||

@@ -45,6 +45,19 @@ private:

|

||||

std::string host;

|

||||

};

|

||||

|

||||

class EqualWafTag : public EnvironmentEvaluator<bool>, Singleton::Consume<I_Environment>

|

||||

{

|

||||

public:

|

||||

EqualWafTag(const std::vector<std::string> ¶ms);

|

||||

|

||||

static std::string getName() { return "EqualWafTag"; }

|

||||

|

||||

Maybe<bool, Context::Error> evalVariable() const override;

|

||||

|

||||

private:

|

||||

std::string waf_tag;

|

||||

};

|

||||

|

||||

class EqualListeningIP : public EnvironmentEvaluator<bool>, Singleton::Consume<I_Environment>

|

||||

{

|

||||

public:

|

||||

|

||||

@@ -317,12 +317,12 @@ public:

|

||||

{

|

||||

return url_for_cef;

|

||||

}

|

||||

Flags<ReportIS::StreamType> getStreams(SecurityType security_type, bool is_action_drop_or_prevent) const;

|

||||

Flags<ReportIS::Enreachments> getEnrechments(SecurityType security_type) const;

|

||||

|

||||

private:

|

||||

ReportIS::Severity getSeverity(bool is_action_drop_or_prevent) const;

|

||||

ReportIS::Priority getPriority(bool is_action_drop_or_prevent) const;

|

||||

Flags<ReportIS::StreamType> getStreams(SecurityType security_type, bool is_action_drop_or_prevent) const;

|

||||

Flags<ReportIS::Enreachments> getEnrechments(SecurityType security_type) const;

|

||||

|

||||

std::string name;

|

||||

std::string verbosity;

|

||||

@@ -339,4 +339,32 @@ private:

|

||||

bool should_format_output = false;

|

||||

};

|

||||

|

||||

class ReportTriggerConf

|

||||

{

|

||||

public:

|

||||

/// \brief Default constructor for ReportTriggerConf.

|

||||

ReportTriggerConf() {}

|

||||

|

||||

/// \brief Preload function to register expected configuration.

|

||||

static void

|

||||

preload()

|

||||

{

|

||||

registerExpectedConfiguration<ReportTriggerConf>("rulebase", "report");

|

||||

}

|

||||

|

||||

/// \brief Load function to deserialize configuration from JSONInputArchive.

|

||||

/// \param archive_in The JSON input archive.

|

||||

void load(cereal::JSONInputArchive &archive_in);

|

||||

|

||||

/// \brief Get the name.

|

||||

/// \return The name.

|

||||

const std::string &

|

||||

getName() const

|

||||

{

|

||||

return name;

|

||||

}

|

||||

private:

|

||||

std::string name;

|

||||

};

|

||||

|

||||

#endif //__TRIGGERS_CONFIG_H__

|

||||

|

||||

@@ -27,9 +27,18 @@ public:

|

||||

verdict(_verdict)

|

||||

{}

|

||||

|

||||

FilterVerdict(

|

||||

ngx_http_cp_verdict_e _verdict,

|

||||

const std::string &_web_reponse_id)

|

||||

:

|

||||

verdict(_verdict),

|

||||

web_user_response_id(_web_reponse_id)

|

||||

{}

|

||||

|

||||

FilterVerdict(const EventVerdict &_verdict, ModifiedChunkIndex _event_idx = -1)

|

||||

:

|

||||

verdict(_verdict.getVerdict())

|

||||

verdict(_verdict.getVerdict()),

|

||||

web_user_response_id(_verdict.getWebUserResponseByPractice())

|

||||

{

|

||||

if (verdict == ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INJECT) {

|

||||

addModifications(_verdict.getModifications(), _event_idx);

|

||||

@@ -59,10 +68,12 @@ public:

|

||||

uint getModificationsAmount() const { return total_modifications; }

|

||||

ngx_http_cp_verdict_e getVerdict() const { return verdict; }

|

||||

const std::vector<EventModifications> & getModifications() const { return modifications; }

|

||||

const std::string getWebUserResponseID() const { return web_user_response_id; }

|

||||

|

||||

private:

|

||||

ngx_http_cp_verdict_e verdict = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INSPECT;

|

||||

std::vector<EventModifications> modifications;

|

||||

std::string web_user_response_id;

|

||||

uint total_modifications = 0;

|

||||

};

|

||||

|

||||

|

||||

@@ -239,6 +239,7 @@ public:

|

||||

const Buffer & getValue() const { return value; }

|

||||

|

||||

bool isLastHeader() const { return is_last_header; }

|

||||

void setIsLastHeader() { is_last_header = true; }

|

||||

uint8_t getHeaderIndex() const { return header_index; }

|

||||

|

||||

private:

|

||||

@@ -375,16 +376,31 @@ public:

|

||||

verdict(event_verdict)

|

||||

{}

|

||||

|

||||

EventVerdict(

|

||||

const ModificationList &mods,

|

||||

ngx_http_cp_verdict_e event_verdict,

|

||||

std::string response_id) :

|

||||

modifications(mods),

|

||||

verdict(event_verdict),

|

||||

webUserResponseByPractice(response_id)

|

||||

{}

|

||||

|

||||

// LCOV_EXCL_START - sync functions, can only be tested once the sync module exists

|

||||

template <typename T> void serialize(T &ar, uint) { ar(verdict); }

|

||||

// LCOV_EXCL_STOP

|

||||

|

||||

const ModificationList & getModifications() const { return modifications; }

|

||||

ngx_http_cp_verdict_e getVerdict() const { return verdict; }

|

||||

const std::string getWebUserResponseByPractice() const { return webUserResponseByPractice; }

|

||||

void setWebUserResponseByPractice(const std::string id) {

|

||||

dbgTrace(D_HTTP_MANAGER) << "current verdict web user response set to: " << id;

|

||||

webUserResponseByPractice = id;

|

||||

}

|

||||

|

||||

private:

|

||||

ModificationList modifications;

|

||||

ngx_http_cp_verdict_e verdict = ngx_http_cp_verdict_e::TRAFFIC_VERDICT_INSPECT;

|

||||

std::string webUserResponseByPractice;

|

||||

};

|

||||

|

||||

#endif // __I_HTTP_EVENT_IMPL_H__

|

||||

|

||||

@@ -72,7 +72,8 @@ public:

|

||||

parsed_uri,

|

||||

client_ip,

|

||||

client_port,

|

||||

response_content_encoding

|

||||

response_content_encoding,

|

||||

waf_tag

|

||||

);

|

||||

}

|

||||

|

||||

@@ -91,7 +92,8 @@ public:

|

||||

parsed_uri,

|

||||

client_ip,

|

||||

client_port,

|

||||

response_content_encoding

|

||||

response_content_encoding,

|

||||

waf_tag

|

||||

);

|

||||

}

|

||||

// LCOV_EXCL_STOP

|

||||

@@ -122,6 +124,9 @@ public:

|

||||

response_content_encoding = _response_content_encoding;

|

||||

}

|

||||

|

||||

const std::string & getWafTag() const { return waf_tag; }

|

||||

void setWafTag(const std::string &_waf_tag) { waf_tag = _waf_tag; }

|

||||

|

||||

static const std::string http_proto_ctx;

|

||||

static const std::string method_ctx;

|

||||

static const std::string host_name_ctx;

|

||||

@@ -137,6 +142,7 @@ public:

|

||||

static const std::string source_identifier;

|

||||

static const std::string proxy_ip_ctx;

|

||||

static const std::string xff_vals_ctx;

|

||||

static const std::string waf_tag_ctx;

|

||||

|

||||

static const CompressionType default_response_content_encoding;

|

||||

|

||||

@@ -153,6 +159,7 @@ private:

|

||||

uint16_t client_port;

|

||||

bool is_request;

|

||||

CompressionType response_content_encoding;

|

||||

std::string waf_tag;

|

||||

};

|

||||

|

||||

#endif // __HTTP_TRANSACTION_DATA_H__

|

||||

|

||||

@@ -26,11 +26,12 @@ public:

|

||||

virtual Maybe<std::string> getArch() = 0;

|

||||

virtual std::string getAgentVersion() = 0;

|

||||

virtual bool isKernelVersion3OrHigher() = 0;

|

||||

virtual bool isGw() = 0;

|

||||

virtual bool isGwNotVsx() = 0;

|

||||

virtual bool isVersionAboveR8110() = 0;

|

||||

virtual bool isReverseProxy() = 0;

|

||||

virtual bool isCloudStorageEnabled() = 0;

|

||||

virtual Maybe<std::tuple<std::string, std::string, std::string>> parseNginxMetadata() = 0;

|

||||

virtual Maybe<std::tuple<std::string, std::string, std::string, std::string>> parseNginxMetadata() = 0;

|

||||

virtual Maybe<std::tuple<std::string, std::string, std::string, std::string, std::string>> readCloudMetadata() = 0;

|

||||

virtual std::map<std::string, std::string> getResolvedDetails() = 0;

|

||||

#if defined(gaia) || defined(smb)

|

||||

|

||||

@@ -27,6 +27,7 @@ struct DecisionTelemetryData

|

||||

int responseCode;

|

||||

uint64_t elapsedTime;

|

||||

std::set<std::string> attackTypes;

|

||||

bool temperatureDetected;

|

||||

|

||||

DecisionTelemetryData() :

|

||||

blockType(NOT_BLOCKING),

|

||||

@@ -38,7 +39,8 @@ struct DecisionTelemetryData

|

||||

method(POST),

|

||||

responseCode(0),

|

||||

elapsedTime(0),

|

||||

attackTypes()

|

||||

attackTypes(),

|

||||

temperatureDetected(false)

|

||||

{

|

||||

}

|

||||

};

|

||||

|

||||

@@ -4,6 +4,7 @@

|

||||

#include "singleton.h"

|

||||

#include "i_keywords_rule.h"

|

||||

#include "i_table.h"

|

||||

#include "i_mainloop.h"

|

||||

#include "i_http_manager.h"

|

||||

#include "i_environment.h"

|

||||

#include "http_inspection_events.h"

|

||||

@@ -16,7 +17,8 @@ class IPSComp

|

||||

Singleton::Consume<I_KeywordsRule>,

|

||||

Singleton::Consume<I_Table>,

|

||||

Singleton::Consume<I_Environment>,

|

||||

Singleton::Consume<I_GenericRulebase>

|

||||

Singleton::Consume<I_GenericRulebase>,

|

||||

Singleton::Consume<I_MainLoop>

|

||||

{

|

||||

public:

|

||||

IPSComp();

|

||||

|

||||

@@ -62,6 +62,7 @@ public:

|

||||

|

||||

private:

|

||||

Maybe<std::string> downloadPackage(const Package &package, bool is_clean_installation);

|

||||

std::string getCurrentTimestamp();

|

||||

|

||||

std::string manifest_file_path;

|

||||

std::string temp_ext;

|

||||

|

||||

28