mirror of

https://github.com/openappsec/openappsec.git

synced 2025-11-15 17:02:15 +03:00

Compare commits

292 Commits

orianelou-

...

main

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

78d1bcf7c4 | ||

|

|

c90862d74c | ||

|

|

b7923dfd8c | ||

|

|

ed4e20b010 | ||

|

|

14159402e2 | ||

|

|

b74957d9d4 | ||

|

|

0c0da6d91b | ||

|

|

ef887dd1c7 | ||

|

|

6bbc89712a | ||

|

|

dd19bf6158 | ||

|

|

60facef890 | ||

|

|

a3ac05642c | ||

|

|

682b91684d | ||

|

|

ff8c5701fe | ||

|

|

796c6cf935 | ||

|

|

31ff6f2c72 | ||

|

|

eac686216b | ||

|

|

938cae1270 | ||

|

|

87cdeef42f | ||

|

|

d04ea7d3e2 | ||

|

|

6d649cf5d5 | ||

|

|

5f71946590 | ||

|

|

c75f1e88b7 | ||

|

|

c4975497eb | ||

|

|

782dfeada6 | ||

|

|

bc1eac9d39 | ||

|

|

4dacd7d009 | ||

|

|

3a34984def | ||

|

|

5aaf787cfa | ||

|

|

2c7b5818e8 | ||

|

|

c8743d4d4b | ||

|

|

d703f16e35 | ||

|

|

692c430e8a | ||

|

|

72c5594b10 | ||

|

|

2c6b6baa3b | ||

|

|

37d0f1c45f | ||

|

|

2678db9d2f | ||

|

|

52c93ad574 | ||

|

|

bd3a53041e | ||

|

|

44f40fbd1b | ||

|

|

0691f9b9cd | ||

|

|

0891dcd251 | ||

|

|

7669f0c89c | ||

|

|

39d7884bed | ||

|

|

b8783c3065 | ||

|

|

37dc9f14b4 | ||

|

|

9a1f1b5966 | ||

|

|

b0bfd3077c | ||

|

|

0469f5aa1f | ||

|

|

3578797214 | ||

|

|

16a72fdf3e | ||

|

|

87d257f268 | ||

|

|

36d8006c26 | ||

|

|

8d47795d4d | ||

|

|

f3656712b0 | ||

|

|

b1781234fd | ||

|

|

f71dca2bfa | ||

|

|

bd333818ad | ||

|

|

95e776d7a4 | ||

|

|

51c2912434 | ||

|

|

0246b73bbd | ||

|

|

919921f6d3 | ||

|

|

e9098e2845 | ||

|

|

97d042589b | ||

|

|

df7be864e2 | ||

|

|

ba8ec26344 | ||

|

|

97add465e8 | ||

|

|

38cb1f2c3b | ||

|

|

1dd9371840 | ||

|

|

f23d22a723 | ||

|

|

b51cf09190 | ||

|

|

ceb6469a7e | ||

|

|

b0ae283eed | ||

|

|

5fcb9bdc4a | ||

|

|

fb5698360b | ||

|

|

147626bc7f | ||

|

|

448991ef75 | ||

|

|

2b1ee84280 | ||

|

|

77dd288eee | ||

|

|

3cb4def82e | ||

|

|

a0dd7dd614 | ||

|

|

88eed946ec | ||

|

|

3e1ad8b0f7 | ||

|

|

bd35c421c6 | ||

|

|

9d6e883724 | ||

|

|

cd020a7ddd | ||

|

|

bb35eaf657 | ||

|

|

648f9ae2b1 | ||

|

|

47e47d706a | ||

|

|

b852809d1a | ||

|

|

a77732f84c | ||

|

|

a1a8e28019 | ||

|

|

a99c2ec4a3 | ||

|

|

f1303c1703 | ||

|

|

bd8174ead3 | ||

|

|

4ddcd2462a | ||

|

|

81433bac25 | ||

|

|

8d03b49176 | ||

|

|

84f9624c00 | ||

|

|

3ecda7b979 | ||

|

|

8f05508e02 | ||

|

|

f5b9c93fbe | ||

|

|

62b74c9a10 | ||

|

|

e3163cd4fa | ||

|

|

1e98fc8c66 | ||

|

|

6fbe272378 | ||

|

|

7b3320ce10 | ||

|

|

25cc2d66e7 | ||

|

|

66e2112afb | ||

|

|

ba7c9afd52 | ||

|

|

2aa0993d7e | ||

|

|

0cdfc9df90 | ||

|

|

010814d656 | ||

|

|

3779dd360d | ||

|

|

0e7dc2133d | ||

|

|

c9095acbef | ||

|

|

e47e29321d | ||

|

|

25a66e77df | ||

|

|

6eea40f165 | ||

|

|

cee6ed511a | ||

|

|

4f145fd74f | ||

|

|

3fe5c5b36f | ||

|

|

7542a85ddb | ||

|

|

fae4534e5c | ||

|

|

923a8a804b | ||

|

|

b1731237d1 | ||

|

|

3d3d6e73b9 | ||

|

|

3f80127ec5 | ||

|

|

abdee954bb | ||

|

|

9a516899e8 | ||

|

|

4fd2aa6c6b | ||

|

|

0db666ac4f | ||

|

|

493d9a6627 | ||

|

|

6db87fc7fe | ||

|

|

d2b9bc8c9c | ||

|

|

886a5befe1 | ||

|

|

1f2502f9e4 | ||

|

|

9e4c5014ce | ||

|

|

024423cce9 | ||

|

|

dc4b546bd1 | ||

|

|

a86aca13b4 | ||

|

|

87b34590d4 | ||

|

|

e0198a1a95 | ||

|

|

d024ad5845 | ||

|

|

46d42c8fa3 | ||

|

|

f6c36f3363 | ||

|

|

63541a4c3c | ||

|

|

d14fa7a468 | ||

|

|

ae0de5bf14 | ||

|

|

d39919f348 | ||

|

|

4f215e1409 | ||

|

|

f05b5f8cee | ||

|

|

949b656b13 | ||

|

|

bbe293d215 | ||

|

|

35b2df729f | ||

|

|

7600b6218f | ||

|

|

20e8e65e14 | ||

|

|

414130a789 | ||

|

|

9d704455e8 | ||

|

|

602442fed4 | ||

|

|

4e9a90db01 | ||

|

|

20f92afbc2 | ||

|

|

ee7adc37d0 | ||

|

|

c0b3e9c0d0 | ||

|

|

f1f4b13327 | ||

|

|

4354a98d37 | ||

|

|

09fa11516c | ||

|

|

446b043128 | ||

|

|

91bcadf930 | ||

|

|

0824cf4b23 | ||

|

|

108abdb35e | ||

|

|

64ebf013eb | ||

|

|

2c91793f08 | ||

|

|

72a263d25a | ||

|

|

4e14ff9a58 | ||

|

|

1fb28e14d6 | ||

|

|

e38bb9525c | ||

|

|

63b8bb22c2 | ||

|

|

11c97330f5 | ||

|

|

e56fb0bc1a | ||

|

|

4571d563f4 | ||

|

|

02c1db01f6 | ||

|

|

c557affd9b | ||

|

|

8889c3c054 | ||

|

|

f67eff87bc | ||

|

|

fa6a2e4233 | ||

|

|

b7e2efbf7e | ||

|

|

96ce290e5f | ||

|

|

de8e2d9970 | ||

|

|

0048708af1 | ||

|

|

4fe0f44e88 | ||

|

|

5f139d13d7 | ||

|

|

919d775a73 | ||

|

|

ac8e353598 | ||

|

|

0663f20691 | ||

|

|

2dda6231f6 | ||

|

|

1c1f0b7e29 | ||

|

|

6255e1f30d | ||

|

|

454aacf622 | ||

|

|

c91ccba5a8 | ||

|

|

b1f897191c | ||

|

|

027ddfea21 | ||

|

|

d1a2906b29 | ||

|

|

b1ade9bba0 | ||

|

|

36d302b77e | ||

|

|

1d7d38b0a6 | ||

|

|

1b7eafaa23 | ||

|

|

c2ea2cda6d | ||

|

|

b58f7781e6 | ||

|

|

7153d222c0 | ||

|

|

f1ec8959b7 | ||

|

|

4a7336b276 | ||

|

|

4d0042e933 | ||

|

|

015915497a | ||

|

|

586150fe4f | ||

|

|

3fe0b42fcd | ||

|

|

84e10c7129 | ||

|

|

eddd250409 | ||

|

|

294cb600f8 | ||

|

|

f4bad4c4d9 | ||

|

|

6e916599d9 | ||

|

|

24d53aed53 | ||

|

|

93fb3da2f8 | ||

|

|

e7378c9a5f | ||

|

|

110f0c8bd2 | ||

|

|

ca31aac08a | ||

|

|

161b6dd180 | ||

|

|

84327e0b19 | ||

|

|

b9723ba6ce | ||

|

|

00e183b8c6 | ||

|

|

e859c167ed | ||

|

|

384b59cc87 | ||

|

|

805e958cb9 | ||

|

|

5bcd7cfcf1 | ||

|

|

ae6f2faeec | ||

|

|

705a5e6061 | ||

|

|

c33b74a970 | ||

|

|

2da9fbc385 | ||

|

|

f58e9a6128 | ||

|

|

57ea5c72c5 | ||

|

|

962bd31d46 | ||

|

|

01770475ec | ||

|

|

78b114a274 | ||

|

|

81b1aec487 | ||

|

|

be6591a670 | ||

|

|

663782009c | ||

|

|

9392bbb26c | ||

|

|

46682bcdce | ||

|

|

057bc42375 | ||

|

|

88e0ccd308 | ||

|

|

4241b9c574 | ||

|

|

4af9f18ada | ||

|

|

3b533608b1 | ||

|

|

74bb3086ec | ||

|

|

504d1415a5 | ||

|

|

18b1b63c42 | ||

|

|

ded2a5ffc2 | ||

|

|

1254bb37b2 | ||

|

|

cf16343caa | ||

|

|

78c4209406 | ||

|

|

3c8672c565 | ||

|

|

48d6baed3b | ||

|

|

8770257a60 | ||

|

|

fd5d093b24 | ||

|

|

d6debf8d8d | ||

|

|

395b754575 | ||

|

|

dc000372c4 | ||

|

|

941c641174 | ||

|

|

fdc148aa9b | ||

|

|

307fd8897d | ||

|

|

afd2b4930b | ||

|

|

1fb9a29223 | ||

|

|

253ca70de6 | ||

|

|

938f625535 | ||

|

|

183d14fc55 | ||

|

|

1f3d4ed5e1 | ||

|

|

fdbd6d3786 | ||

|

|

4504138a4a | ||

|

|

66ed4a8d81 | ||

|

|

189c9209c9 | ||

|

|

1a1580081c | ||

|

|

942b2ef8b4 | ||

|

|

7a7f65a77a | ||

|

|

98639d9cb6 | ||

|

|

b3de81d9d9 | ||

|

|

a77fd9a6d0 | ||

|

|

8454b2dd9b | ||

|

|

3913e1e8b3 | ||

|

|

262b2e59ff | ||

|

|

a01c65994a | ||

|

|

1d13973ae2 | ||

|

|

c20fa9f966 |

36

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

36

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,36 @@

|

||||

---

|

||||

name: "Bug Report"

|

||||

about: "Report a bug with open-appsec"

|

||||

labels: [bug]

|

||||

---

|

||||

|

||||

**Checklist**

|

||||

- Have you checked the open-appsec troubleshooting guides - https://docs.openappsec.io/troubleshooting/troubleshooting

|

||||

- Yes / No

|

||||

- Have you checked the existing issues and discussions in github for the same issue

|

||||

- Yes / No

|

||||

- Have you checked the knwon limitations same issue - https://docs.openappsec.io/release-notes#limitations

|

||||

- Yes / No

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**To Reproduce**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Run '...'

|

||||

3. See error '...'

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots or Logs**

|

||||

If applicable, add screenshots or logs to help explain the issue.

|

||||

|

||||

**Environment (please complete the following information):**

|

||||

- open-appsec version:

|

||||

- Deployment type (Docker, Kubernetes, etc.):

|

||||

- OS:

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

8

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

8

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@@ -0,0 +1,8 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: "Documentation & Troubleshooting"

|

||||

url: "https://docs.openappsec.io/"

|

||||

about: "Check the documentation before submitting an issue."

|

||||

- name: "Feature Requests & Discussions"

|

||||

url: "https://github.com/openappsec/openappsec/discussions"

|

||||

about: "Please open a discussion for feature requests."

|

||||

17

.github/ISSUE_TEMPLATE/nginx_version_support.md

vendored

Normal file

17

.github/ISSUE_TEMPLATE/nginx_version_support.md

vendored

Normal file

@@ -0,0 +1,17 @@

|

||||

---

|

||||

name: "Nginx Version Support Request"

|

||||

about: "Request for a specific Nginx version to be supported"

|

||||

---

|

||||

|

||||

**Nginx & OS Version:**

|

||||

Which Nginx and OS version are you using?

|

||||

|

||||

**Output of nginx -V**

|

||||

Share the output of nginx -v

|

||||

|

||||

**Expected Behavior:**

|

||||

What do you expect to happen with this version?

|

||||

|

||||

**Checklist**

|

||||

- Have you considered a docker based deployment - find more information here https://docs.openappsec.io/getting-started/start-with-docker?

|

||||

- Yes / No

|

||||

@@ -1,7 +1,7 @@

|

||||

cmake_minimum_required (VERSION 2.8.4)

|

||||

project (ngen)

|

||||

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fPIC -Wall -Wno-terminate")

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -O2 -fPIC -Wall -Wno-terminate")

|

||||

|

||||

execute_process(COMMAND grep -c "Alpine Linux" /etc/os-release OUTPUT_VARIABLE IS_ALPINE)

|

||||

if(NOT IS_ALPINE EQUAL "0")

|

||||

|

||||

24

README.md

24

README.md

@@ -6,7 +6,7 @@

|

||||

[](https://bestpractices.coreinfrastructure.org/projects/6629)

|

||||

|

||||

# About

|

||||

[open-appsec](https://www.openappsec.io) (openappsec.io) builds on machine learning to provide preemptive web app & API threat protection against OWASP-Top-10 and zero-day attacks. It can be deployed as an add-on to Kubernetes Ingress, NGINX, Envoy (soon), and API Gateways.

|

||||

[open-appsec](https://www.openappsec.io) (openappsec.io) builds on machine learning to provide preemptive web app & API threat protection against OWASP-Top-10 and zero-day attacks. It can be deployed as an add-on to Linux, Docker or K8s deployments, on NGINX, Kong, APISIX, or Envoy.

|

||||

|

||||

The open-appsec engine learns how users normally interact with your web application. It then uses this information to automatically detect requests that fall outside of normal operations, and conducts further analysis to decide whether the request is malicious or not.

|

||||

|

||||

@@ -39,13 +39,13 @@ open-appsec can be managed using multiple methods:

|

||||

* [Using SaaS Web Management](https://docs.openappsec.io/getting-started/using-the-web-ui-saas)

|

||||

|

||||

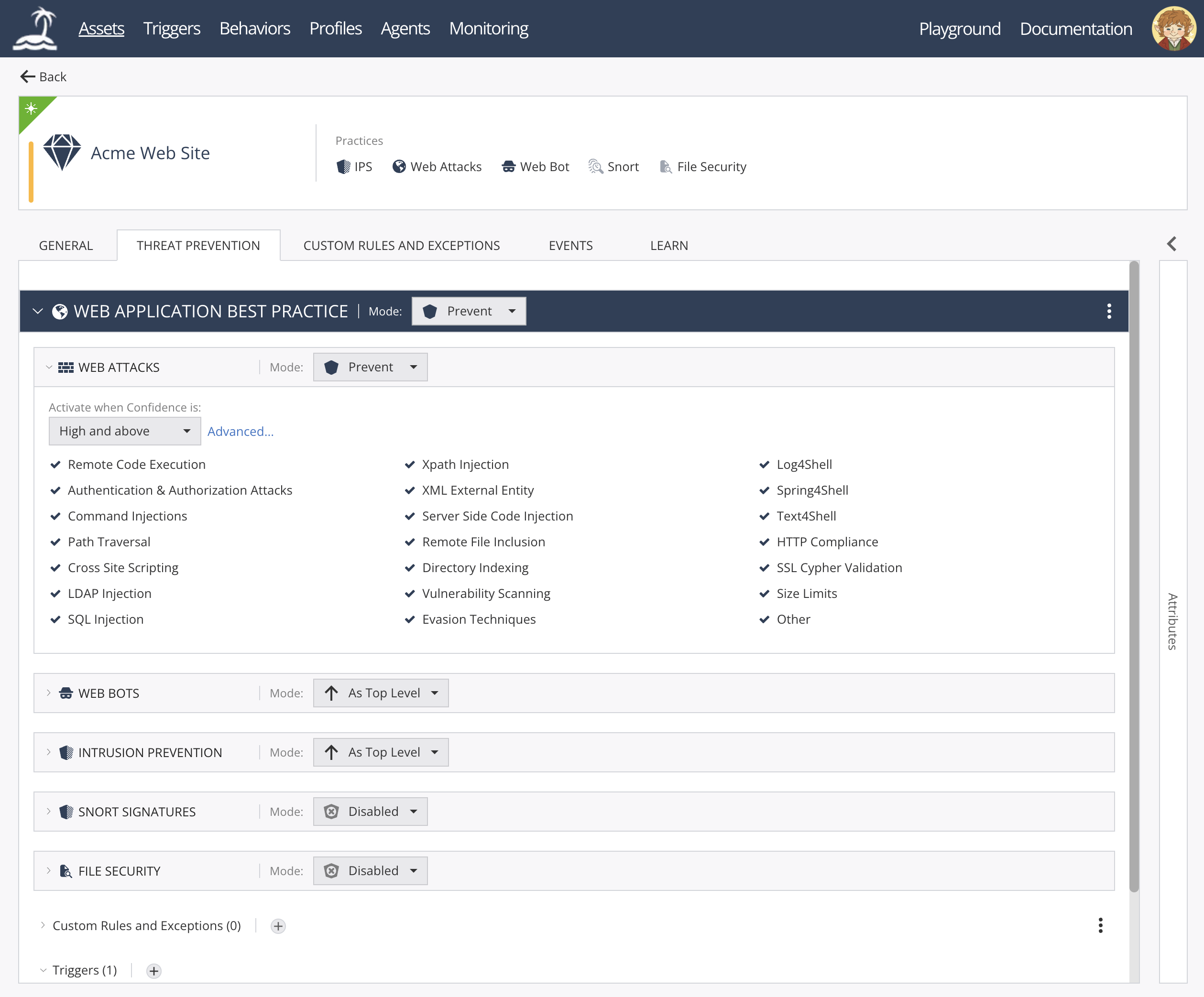

open-appsec Web UI:

|

||||

|

||||

<img width="1854" height="775" alt="image" src="https://github.com/user-attachments/assets/4c6f7b0a-14f3-4f02-9ab0-ddadc9979b8d" />

|

||||

|

||||

|

||||

|

||||

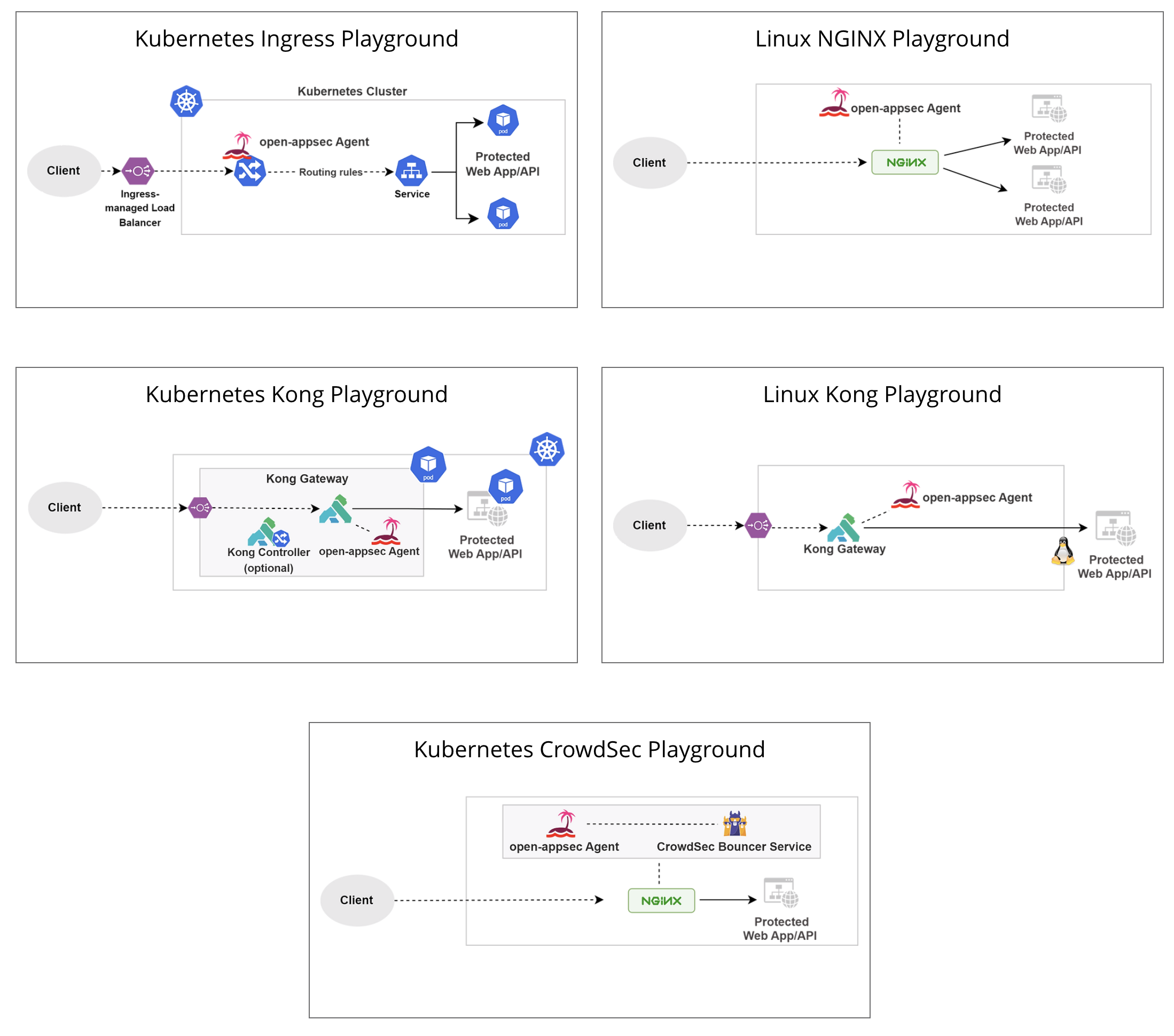

## Deployment Playgrounds (Virtual labs)

|

||||

You can experiment with open-appsec using [Playgrounds](https://www.openappsec.io/playground)

|

||||

|

||||

|

||||

<img width="781" height="878" alt="image" src="https://github.com/user-attachments/assets/0ddee216-5cdf-4288-8c41-cc28cfbf3297" />

|

||||

|

||||

# Resources

|

||||

* [Project Website](https://openappsec.io)

|

||||

@@ -54,27 +54,21 @@ You can experiment with open-appsec using [Playgrounds](https://www.openappsec.i

|

||||

|

||||

# Installation

|

||||

|

||||

For Kubernetes (NGINX Ingress) using the installer:

|

||||

For Kubernetes (NGINX /Kong / APISIX / Istio) using Helm: follow [documentation](https://docs.openappsec.io/getting-started/start-with-kubernetes)

|

||||

|

||||

```bash

|

||||

$ wget https://downloads.openappsec.io/open-appsec-k8s-install && chmod +x open-appsec-k8s-install

|

||||

$ ./open-appsec-k8s-install

|

||||

```

|

||||

|

||||

For Kubernetes (NGINX or Kong) using Helm: follow [documentation](https://docs.openappsec.io/getting-started/start-with-kubernetes/install-using-helm-ingress-nginx-and-kong) – use this method if you’ve built your own containers.

|

||||

|

||||

For Linux (NGINX or Kong) using the installer (list of supported/pre-compiled NGINX attachments is available [here](https://downloads.openappsec.io/packages/supported-nginx.txt)):

|

||||

For Linux (NGINX / Kong / APISIX) using the installer (list of supported/pre-compiled NGINX attachments is available [here](https://downloads.openappsec.io/packages/supported-nginx.txt)):

|

||||

|

||||

```bash

|

||||

$ wget https://downloads.openappsec.io/open-appsec-install && chmod +x open-appsec-install

|

||||

$ ./open-appsec-install --auto

|

||||

```

|

||||

For kong Lua Based plug in follow [documentation](https://docs.openappsec.io/getting-started/start-with-linux)

|

||||

|

||||

For Linux, if you’ve built your own package use the following commands:

|

||||

|

||||

```bash

|

||||

$ install-cp-nano-agent.sh --install --hybrid_mode

|

||||

$ install-cp-nano-service-http-transaction-handler.sh –install

|

||||

$ install-cp-nano-service-http-transaction-handler.sh --install

|

||||

$ install-cp-nano-attachment-registration-manager.sh --install

|

||||

```

|

||||

You can add the ```--token <token>``` and ```--email <email address>``` options to the first command, to get a token follow [documentation](https://docs.openappsec.io/getting-started/using-the-web-ui-saas/connect-deployed-agents-to-saas-management-k8s-and-linux).

|

||||

@@ -177,7 +171,7 @@ open-appsec code was audited by an independent third party in September-October

|

||||

See the [full report](https://github.com/openappsec/openappsec/blob/main/LEXFO-CHP20221014-Report-Code_audit-OPEN-APPSEC-v1.2.pdf).

|

||||

|

||||

### Reporting security vulnerabilities

|

||||

If you've found a vulnerability or a potential vulnerability in open-appsec please let us know at securityalert@openappsec.io. We'll send a confirmation email to acknowledge your report within 24 hours, and we'll send an additional email when we've identified the issue positively or negatively.

|

||||

If you've found a vulnerability or a potential vulnerability in open-appsec please let us know at security-alert@openappsec.io. We'll send a confirmation email to acknowledge your report within 24 hours, and we'll send an additional email when we've identified the issue positively or negatively.

|

||||

|

||||

|

||||

# License

|

||||

|

||||

@@ -95,6 +95,18 @@ getFailOpenHoldTimeout()

|

||||

return conf_data.getNumericalValue("fail_open_hold_timeout");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getHoldVerdictPollingTime()

|

||||

{

|

||||

return conf_data.getNumericalValue("hold_verdict_polling_time");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getHoldVerdictRetries()

|

||||

{

|

||||

return conf_data.getNumericalValue("hold_verdict_retries");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getMaxSessionsPerMinute()

|

||||

{

|

||||

@@ -155,6 +167,30 @@ getWaitingForVerdictThreadTimeout()

|

||||

return conf_data.getNumericalValue("waiting_for_verdict_thread_timeout_msec");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getMinRetriesForVerdict()

|

||||

{

|

||||

return conf_data.getNumericalValue("min_retries_for_verdict");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getMaxRetriesForVerdict()

|

||||

{

|

||||

return conf_data.getNumericalValue("max_retries_for_verdict");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getReqBodySizeTrigger()

|

||||

{

|

||||

return conf_data.getNumericalValue("body_size_trigger");

|

||||

}

|

||||

|

||||

unsigned int

|

||||

getRemoveResServerHeader()

|

||||

{

|

||||

return conf_data.getNumericalValue("remove_server_header");

|

||||

}

|

||||

|

||||

int

|

||||

isIPAddress(c_str ip_str)

|

||||

{

|

||||

|

||||

@@ -63,32 +63,44 @@ TEST_F(HttpAttachmentUtilTest, GetValidAttachmentConfiguration)

|

||||

"\"waiting_for_verdict_thread_timeout_msec\": 75,\n"

|

||||

"\"req_header_thread_timeout_msec\": 10,\n"

|

||||

"\"ip_ranges\": " + createIPRangesString(ip_ranges) + ",\n"

|

||||

"\"static_resources_path\": \"" + static_resources_path + "\""

|

||||

"\"static_resources_path\": \"" + static_resources_path + "\",\n"

|

||||

"\"min_retries_for_verdict\": 1,\n"

|

||||

"\"max_retries_for_verdict\": 3,\n"

|

||||

"\"hold_verdict_retries\": 3,\n"

|

||||

"\"hold_verdict_polling_time\": 1,\n"

|

||||

"\"body_size_trigger\": 777,\n"

|

||||

"\"remove_server_header\": 1\n"

|

||||

"}\n";

|

||||

ofstream valid_configuration_file(attachment_configuration_file_name);

|

||||

valid_configuration_file << valid_configuration;

|

||||

valid_configuration_file.close();

|

||||

|

||||

EXPECT_EQ(initAttachmentConfig(attachment_configuration_file_name.c_str()), 1);

|

||||

EXPECT_EQ(getDbgLevel(), 2);

|

||||

EXPECT_EQ(getDbgLevel(), 2u);

|

||||

EXPECT_EQ(getStaticResourcesPath(), static_resources_path);

|

||||

EXPECT_EQ(isFailOpenMode(), 0);

|

||||

EXPECT_EQ(getFailOpenTimeout(), 1234);

|

||||

EXPECT_EQ(getFailOpenTimeout(), 1234u);

|

||||

EXPECT_EQ(isFailOpenHoldMode(), 1);

|

||||

EXPECT_EQ(getFailOpenHoldTimeout(), 4321);

|

||||

EXPECT_EQ(getFailOpenHoldTimeout(), 4321u);

|

||||

EXPECT_EQ(isFailOpenOnSessionLimit(), 1);

|

||||

EXPECT_EQ(getMaxSessionsPerMinute(), 0);

|

||||

EXPECT_EQ(getNumOfNginxIpcElements(), 200);

|

||||

EXPECT_EQ(getKeepAliveIntervalMsec(), 10000);

|

||||

EXPECT_EQ(getResProccessingTimeout(), 420);

|

||||

EXPECT_EQ(getReqProccessingTimeout(), 42);

|

||||

EXPECT_EQ(getRegistrationThreadTimeout(), 101);

|

||||

EXPECT_EQ(getReqHeaderThreadTimeout(), 10);

|

||||

EXPECT_EQ(getReqBodyThreadTimeout(), 155);

|

||||

EXPECT_EQ(getResHeaderThreadTimeout(), 1);

|

||||

EXPECT_EQ(getResBodyThreadTimeout(), 0);

|

||||

EXPECT_EQ(getWaitingForVerdictThreadTimeout(), 75);

|

||||

EXPECT_EQ(getMaxSessionsPerMinute(), 0u);

|

||||

EXPECT_EQ(getNumOfNginxIpcElements(), 200u);

|

||||

EXPECT_EQ(getKeepAliveIntervalMsec(), 10000u);

|

||||

EXPECT_EQ(getResProccessingTimeout(), 420u);

|

||||

EXPECT_EQ(getReqProccessingTimeout(), 42u);

|

||||

EXPECT_EQ(getRegistrationThreadTimeout(), 101u);

|

||||

EXPECT_EQ(getReqHeaderThreadTimeout(), 10u);

|

||||

EXPECT_EQ(getReqBodyThreadTimeout(), 155u);

|

||||

EXPECT_EQ(getResHeaderThreadTimeout(), 1u);

|

||||

EXPECT_EQ(getResBodyThreadTimeout(), 0u);

|

||||

EXPECT_EQ(getMinRetriesForVerdict(), 1u);

|

||||

EXPECT_EQ(getMaxRetriesForVerdict(), 3u);

|

||||

EXPECT_EQ(getReqBodySizeTrigger(), 777u);

|

||||

EXPECT_EQ(getWaitingForVerdictThreadTimeout(), 75u);

|

||||

EXPECT_EQ(getInspectionMode(), ngx_http_inspection_mode::BLOCKING_THREAD);

|

||||

EXPECT_EQ(getRemoveResServerHeader(), 1u);

|

||||

EXPECT_EQ(getHoldVerdictRetries(), 3u);

|

||||

EXPECT_EQ(getHoldVerdictPollingTime(), 1u);

|

||||

|

||||

EXPECT_EQ(isDebugContext("1.2.3.4", "5.6.7.8", 80, "GET", "test", "/abc"), 1);

|

||||

EXPECT_EQ(isDebugContext("1.2.3.9", "5.6.7.8", 80, "GET", "test", "/abc"), 0);

|

||||

|

||||

@@ -3,4 +3,4 @@ dependencies:

|

||||

repository: https://charts.bitnami.com/bitnami

|

||||

version: 12.2.8

|

||||

digest: sha256:0d13b8b0c66b8e18781eac510ce58b069518ff14a6a15ad90375e7f0ffad71fe

|

||||

generated: "2024-02-18T16:45:15.395307713Z"

|

||||

generated: "2024-03-26T14:53:49.928153508Z"

|

||||

|

||||

@@ -1,7 +1,5 @@

|

||||

annotations:

|

||||

artifacthub.io/changes: |-

|

||||

- "update web hook cert gen to latest release v20231226-1a7112e06"

|

||||

- "Update Ingress-Nginx version controller-v1.9.6"

|

||||

artifacthub.io/changes: '- "Update Ingress-Nginx version controller-v1.10.0"'

|

||||

artifacthub.io/prerelease: "false"

|

||||

apiVersion: v2

|

||||

appVersion: latest

|

||||

@@ -17,4 +15,4 @@ kubeVersion: '>=1.20.0-0'

|

||||

name: open-appsec-k8s-nginx-ingress

|

||||

sources:

|

||||

- https://github.com/kubernetes/ingress-nginx

|

||||

version: 4.9.1

|

||||

version: 4.10.0

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

[ingress-nginx](https://github.com/kubernetes/ingress-nginx) Ingress controller for Kubernetes using NGINX as a reverse proxy and load balancer

|

||||

|

||||

|

||||

|

||||

|

||||

To use, add `ingressClassName: nginx` spec field or the `kubernetes.io/ingress.class: nginx` annotation to your Ingress resources.

|

||||

|

||||

@@ -253,11 +253,11 @@ As of version `1.26.0` of this chart, by simply not providing any clusterIP valu

|

||||

| controller.admissionWebhooks.namespaceSelector | object | `{}` | |

|

||||

| controller.admissionWebhooks.objectSelector | object | `{}` | |

|

||||

| controller.admissionWebhooks.patch.enabled | bool | `true` | |

|

||||

| controller.admissionWebhooks.patch.image.digest | string | `"sha256:25d6a5f11211cc5c3f9f2bf552b585374af287b4debf693cacbe2da47daa5084"` | |

|

||||

| controller.admissionWebhooks.patch.image.digest | string | `"sha256:44d1d0e9f19c63f58b380c5fddaca7cf22c7cee564adeff365225a5df5ef3334"` | |

|

||||

| controller.admissionWebhooks.patch.image.image | string | `"ingress-nginx/kube-webhook-certgen"` | |

|

||||

| controller.admissionWebhooks.patch.image.pullPolicy | string | `"IfNotPresent"` | |

|

||||

| controller.admissionWebhooks.patch.image.registry | string | `"registry.k8s.io"` | |

|

||||

| controller.admissionWebhooks.patch.image.tag | string | `"v20231226-1a7112e06"` | |

|

||||

| controller.admissionWebhooks.patch.image.tag | string | `"v1.4.0"` | |

|

||||

| controller.admissionWebhooks.patch.labels | object | `{}` | Labels to be added to patch job resources |

|

||||

| controller.admissionWebhooks.patch.networkPolicy.enabled | bool | `false` | Enable 'networkPolicy' or not |

|

||||

| controller.admissionWebhooks.patch.nodeSelector."kubernetes.io/os" | string | `"linux"` | |

|

||||

@@ -317,7 +317,7 @@ As of version `1.26.0` of this chart, by simply not providing any clusterIP valu

|

||||

| controller.hostname | object | `{}` | Optionally customize the pod hostname. |

|

||||

| controller.image.allowPrivilegeEscalation | bool | `false` | |

|

||||

| controller.image.chroot | bool | `false` | |

|

||||

| controller.image.digest | string | `"sha256:1405cc613bd95b2c6edd8b2a152510ae91c7e62aea4698500d23b2145960ab9c"` | |

|

||||

| controller.image.digest | string | `"sha256:42b3f0e5d0846876b1791cd3afeb5f1cbbe4259d6f35651dcc1b5c980925379c"` | |

|

||||

| controller.image.digestChroot | string | `"sha256:7eb46ff733429e0e46892903c7394aff149ac6d284d92b3946f3baf7ff26a096"` | |

|

||||

| controller.image.image | string | `"ingress-nginx/controller"` | |

|

||||

| controller.image.pullPolicy | string | `"IfNotPresent"` | |

|

||||

@@ -326,7 +326,7 @@ As of version `1.26.0` of this chart, by simply not providing any clusterIP valu

|

||||

| controller.image.runAsNonRoot | bool | `true` | |

|

||||

| controller.image.runAsUser | int | `101` | |

|

||||

| controller.image.seccompProfile.type | string | `"RuntimeDefault"` | |

|

||||

| controller.image.tag | string | `"v1.9.6"` | |

|

||||

| controller.image.tag | string | `"v1.10.0"` | |

|

||||

| controller.ingressClass | string | `"nginx"` | For backwards compatibility with ingress.class annotation, use ingressClass. Algorithm is as follows, first ingressClassName is considered, if not present, controller looks for ingress.class annotation |

|

||||

| controller.ingressClassByName | bool | `false` | Process IngressClass per name (additionally as per spec.controller). |

|

||||

| controller.ingressClassResource.controllerValue | string | `"k8s.io/ingress-nginx"` | Controller-value of the controller that is processing this ingressClass |

|

||||

|

||||

@@ -0,0 +1,9 @@

|

||||

# Changelog

|

||||

|

||||

This file documents all notable changes to [ingress-nginx](https://github.com/kubernetes/ingress-nginx) Helm Chart. The release numbering uses [semantic versioning](http://semver.org).

|

||||

|

||||

### 4.10.0

|

||||

|

||||

* - "Update Ingress-Nginx version controller-v1.10.0"

|

||||

|

||||

**Full Changelog**: https://github.com/kubernetes/ingress-nginx/compare/helm-chart-4.9.1...helm-chart-4.10.0

|

||||

@@ -29,7 +29,7 @@

|

||||

- --watch-namespace={{ default "$(POD_NAMESPACE)" .Values.controller.scope.namespace }}

|

||||

{{- end }}

|

||||

{{- if and (not .Values.controller.scope.enabled) .Values.controller.scope.namespaceSelector }}

|

||||

- --watch-namespace-selector={{ default "" .Values.controller.scope.namespaceSelector }}

|

||||

- --watch-namespace-selector={{ .Values.controller.scope.namespaceSelector }}

|

||||

{{- end }}

|

||||

{{- if and .Values.controller.reportNodeInternalIp .Values.controller.hostNetwork }}

|

||||

- --report-node-internal-ip-address={{ .Values.controller.reportNodeInternalIp }}

|

||||

@@ -54,6 +54,9 @@

|

||||

{{- if .Values.controller.watchIngressWithoutClass }}

|

||||

- --watch-ingress-without-class=true

|

||||

{{- end }}

|

||||

{{- if not .Values.controller.metrics.enabled }}

|

||||

- --enable-metrics={{ .Values.controller.metrics.enabled }}

|

||||

{{- end }}

|

||||

{{- if .Values.controller.enableTopologyAwareRouting }}

|

||||

- --enable-topology-aware-routing=true

|

||||

{{- end }}

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

{{- if and ( .Values.controller.metrics.enabled ) ( .Values.controller.metrics.prometheusRule.enabled ) ( .Capabilities.APIVersions.Has "monitoring.coreos.com/v1" ) -}}

|

||||

{{- if and .Values.controller.metrics.enabled .Values.controller.metrics.prometheusRule.enabled -}}

|

||||

apiVersion: monitoring.coreos.com/v1

|

||||

kind: PrometheusRule

|

||||

metadata:

|

||||

|

||||

@@ -34,7 +34,7 @@ spec:

|

||||

http-headers: false

|

||||

request-body: false

|

||||

log-destination:

|

||||

cloud: false

|

||||

cloud: true

|

||||

stdout:

|

||||

format: json-formatted

|

||||

---

|

||||

|

||||

@@ -15,3 +15,37 @@ tests:

|

||||

- equal:

|

||||

path: metadata.name

|

||||

value: RELEASE-NAME-open-appsec-k8s-nginx-ingress-controller

|

||||

|

||||

- it: should create a DaemonSet with argument `--enable-metrics=false` if `controller.metrics.enabled` is false

|

||||

set:

|

||||

controller.kind: DaemonSet

|

||||

kind: Vanilla

|

||||

controller.metrics.enabled: false

|

||||

asserts:

|

||||

- contains:

|

||||

path: spec.template.spec.containers[0].args

|

||||

content: --enable-metrics=false

|

||||

|

||||

- it: should create a DaemonSet without argument `--enable-metrics=false` if `controller.metrics.enabled` is true

|

||||

set:

|

||||

controller.kind: DaemonSet

|

||||

kind: Vanilla

|

||||

controller.metrics.enabled: true

|

||||

asserts:

|

||||

- notContains:

|

||||

path: spec.template.spec.containers[0].args

|

||||

content: --enable-metrics=false

|

||||

|

||||

- it: should create a DaemonSet with resource limits if `controller.resources.limits` is set

|

||||

set:

|

||||

controller.kind: DaemonSet

|

||||

kind: Vanilla

|

||||

controller.resources.limits.cpu: 500m

|

||||

controller.resources.limits.memory: 512Mi

|

||||

asserts:

|

||||

- equal:

|

||||

path: spec.template.spec.containers[0].resources.limits.cpu

|

||||

value: 500m

|

||||

- equal:

|

||||

path: spec.template.spec.containers[0].resources.limits.memory

|

||||

value: 512Mi

|

||||

|

||||

@@ -4,8 +4,6 @@ templates:

|

||||

|

||||

tests:

|

||||

- it: should create a Deployment

|

||||

set:

|

||||

kind: Vanilla

|

||||

asserts:

|

||||

- hasDocuments:

|

||||

count: 1

|

||||

@@ -24,6 +22,22 @@ tests:

|

||||

path: spec.replicas

|

||||

value: 3

|

||||

|

||||

- it: should create a Deployment with argument `--enable-metrics=false` if `controller.metrics.enabled` is false

|

||||

set:

|

||||

controller.metrics.enabled: false

|

||||

asserts:

|

||||

- contains:

|

||||

path: spec.template.spec.containers[0].args

|

||||

content: --enable-metrics=false

|

||||

|

||||

- it: should create a Deployment without argument `--enable-metrics=false` if `controller.metrics.enabled` is true

|

||||

set:

|

||||

controller.metrics.enabled: true

|

||||

asserts:

|

||||

- notContains:

|

||||

path: spec.template.spec.containers[0].args

|

||||

content: --enable-metrics=false

|

||||

|

||||

- it: should create a Deployment with resource limits if `controller.resources.limits` is set

|

||||

set:

|

||||

controller.resources.limits.cpu: 500m

|

||||

|

||||

@@ -26,8 +26,8 @@ controller:

|

||||

## for backwards compatibility consider setting the full image url via the repository value below

|

||||

## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail

|

||||

## repository:

|

||||

tag: "v1.9.6"

|

||||

digest: sha256:1405cc613bd95b2c6edd8b2a152510ae91c7e62aea4698500d23b2145960ab9c

|

||||

tag: "v1.10.0"

|

||||

digest: sha256:42b3f0e5d0846876b1791cd3afeb5f1cbbe4259d6f35651dcc1b5c980925379c

|

||||

digestChroot: sha256:7eb46ff733429e0e46892903c7394aff149ac6d284d92b3946f3baf7ff26a096

|

||||

pullPolicy: IfNotPresent

|

||||

runAsNonRoot: true

|

||||

@@ -781,8 +781,8 @@ controller:

|

||||

## for backwards compatibility consider setting the full image url via the repository value below

|

||||

## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail

|

||||

## repository:

|

||||

tag: v20231226-1a7112e06

|

||||

digest: sha256:25d6a5f11211cc5c3f9f2bf552b585374af287b4debf693cacbe2da47daa5084

|

||||

tag: v1.4.0

|

||||

digest: sha256:44d1d0e9f19c63f58b380c5fddaca7cf22c7cee564adeff365225a5df5ef3334

|

||||

pullPolicy: IfNotPresent

|

||||

# -- Provide a priority class name to the webhook patching job

|

||||

##

|

||||

@@ -1198,7 +1198,7 @@ appsec:

|

||||

image:

|

||||

registry: ghcr.io/openappsec

|

||||

image: smartsync-tuning

|

||||

tag: 1.1.3

|

||||

tag: latest

|

||||

enabled: false

|

||||

replicaCount: 1

|

||||

securityContext:

|

||||

|

||||

@@ -1,5 +1,27 @@

|

||||

# Changelog

|

||||

|

||||

## 2.38.0

|

||||

|

||||

### Changes

|

||||

|

||||

* Added support for setting `SVC.tls.appProtocol` and `SVC.http.appProtocol` values to configure the appProtocol fields

|

||||

for Kubernetes Service HTTP and TLS ports. It might be useful for integration with external load balancers like GCP.

|

||||

[#1018](https://github.com/Kong/charts/pull/1018)

|

||||

|

||||

## 2.37.1

|

||||

|

||||

* Rename the controller status port. This fixes a collision with the proxy status port in the Prometheus ServiceMonitor.

|

||||

[#1008](https://github.com/Kong/charts/pull/1008)

|

||||

|

||||

## 2.37.0

|

||||

|

||||

### Changes

|

||||

|

||||

* Bumped default `kong/kubernetes-ingress-controller` image tag and updated CRDs to 3.1.

|

||||

[#1011](https://github.com/Kong/charts/pull/1011)

|

||||

* Bumped default `kong` image tag to 3.6.

|

||||

[#1011](https://github.com/Kong/charts/pull/1011)

|

||||

|

||||

## 2.36.0

|

||||

|

||||

### Fixed

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

apiVersion: v2

|

||||

appVersion: 1.1.6

|

||||

appVersion: 1.1.8

|

||||

dependencies:

|

||||

- condition: postgresql.enabled

|

||||

name: postgresql

|

||||

@@ -14,4 +14,4 @@ maintainers:

|

||||

name: open-appsec-kong

|

||||

sources:

|

||||

- https://github.com/Kong/charts/tree/main/charts/kong

|

||||

version: 2.36.0

|

||||

version: 2.38.0

|

||||

|

||||

@@ -666,40 +666,42 @@ nodes.

|

||||

mixed TCP/UDP LoadBalancer Services). It _does not_ support the `http`, `tls`,

|

||||

or `ingress` sections, as it is used only for stream listens.

|

||||

|

||||

| Parameter | Description | Default |

|

||||

|------------------------------------|---------------------------------------------------------------------------------------|--------------------------|

|

||||

| SVC.enabled | Create Service resource for SVC (admin, proxy, manager, etc.) | |

|

||||

| SVC.http.enabled | Enables http on the service | |

|

||||

| SVC.http.servicePort | Service port to use for http | |

|

||||

| SVC.http.containerPort | Container port to use for http | |

|

||||

| SVC.http.nodePort | Node port to use for http | |

|

||||

| SVC.http.hostPort | Host port to use for http | |

|

||||

| SVC.http.parameters | Array of additional listen parameters | `[]` |

|

||||

| SVC.tls.enabled | Enables TLS on the service | |

|

||||

| SVC.tls.containerPort | Container port to use for TLS | |

|

||||

| SVC.tls.servicePort | Service port to use for TLS | |

|

||||

| SVC.tls.nodePort | Node port to use for TLS | |

|

||||

| SVC.tls.hostPort | Host port to use for TLS | |

|

||||

| SVC.tls.overrideServiceTargetPort | Override service port to use for TLS without touching Kong containerPort | |

|

||||

| SVC.tls.parameters | Array of additional listen parameters | `["http2"]` |

|

||||

| SVC.type | k8s service type. Options: NodePort, ClusterIP, LoadBalancer | |

|

||||

| SVC.clusterIP | k8s service clusterIP | |

|

||||

| SVC.loadBalancerClass | loadBalancerClass to use for LoadBalancer provisionning | |

|

||||

| SVC.loadBalancerSourceRanges | Limit service access to CIDRs if set and service type is `LoadBalancer` | `[]` |

|

||||

| SVC.loadBalancerIP | Reuse an existing ingress static IP for the service | |

|

||||

| SVC.externalIPs | IPs for which nodes in the cluster will also accept traffic for the servic | `[]` |

|

||||

| SVC.externalTrafficPolicy | k8s service's externalTrafficPolicy. Options: Cluster, Local | |

|

||||

| SVC.ingress.enabled | Enable ingress resource creation (works with SVC.type=ClusterIP) | `false` |

|

||||

| SVC.ingress.ingressClassName | Set the ingressClassName to associate this Ingress with an IngressClass | |

|

||||

| SVC.ingress.hostname | Ingress hostname | `""` |

|

||||

| SVC.ingress.path | Ingress path. | `/` |

|

||||

| SVC.ingress.pathType | Ingress pathType. One of `ImplementationSpecific`, `Exact` or `Prefix` | `ImplementationSpecific` |

|

||||

| SVC.ingress.hosts | Slice of hosts configurations, including `hostname`, `path` and `pathType` keys | `[]` |

|

||||

| SVC.ingress.tls | Name of secret resource or slice of `secretName` and `hosts` keys | |

|

||||

| SVC.ingress.annotations | Ingress annotations. See documentation for your ingress controller for details | `{}` |

|

||||

| SVC.ingress.labels | Ingress labels. Additional custom labels to add to the ingress. | `{}` |

|

||||

| SVC.annotations | Service annotations | `{}` |

|

||||

| SVC.labels | Service labels | `{}` |

|

||||

| Parameter | Description | Default |

|

||||

|-----------------------------------|-------------------------------------------------------------------------------------------|--------------------------|

|

||||

| SVC.enabled | Create Service resource for SVC (admin, proxy, manager, etc.) | |

|

||||

| SVC.http.enabled | Enables http on the service | |

|

||||

| SVC.http.servicePort | Service port to use for http | |

|

||||

| SVC.http.containerPort | Container port to use for http | |

|

||||

| SVC.http.nodePort | Node port to use for http | |

|

||||

| SVC.http.hostPort | Host port to use for http | |

|

||||

| SVC.http.parameters | Array of additional listen parameters | `[]` |

|

||||

| SVC.http.appProtocol | `appProtocol` to be set in a Service's port. If left empty, no `appProtocol` will be set. | |

|

||||

| SVC.tls.enabled | Enables TLS on the service | |

|

||||

| SVC.tls.containerPort | Container port to use for TLS | |

|

||||

| SVC.tls.servicePort | Service port to use for TLS | |

|

||||

| SVC.tls.nodePort | Node port to use for TLS | |

|

||||

| SVC.tls.hostPort | Host port to use for TLS | |

|

||||

| SVC.tls.overrideServiceTargetPort | Override service port to use for TLS without touching Kong containerPort | |

|

||||

| SVC.tls.parameters | Array of additional listen parameters | `["http2"]` |

|

||||

| SVC.tls.appProtocol | `appProtocol` to be set in a Service's port. If left empty, no `appProtocol` will be set. | |

|

||||

| SVC.type | k8s service type. Options: NodePort, ClusterIP, LoadBalancer | |

|

||||

| SVC.clusterIP | k8s service clusterIP | |

|

||||

| SVC.loadBalancerClass | loadBalancerClass to use for LoadBalancer provisionning | |

|

||||

| SVC.loadBalancerSourceRanges | Limit service access to CIDRs if set and service type is `LoadBalancer` | `[]` |

|

||||

| SVC.loadBalancerIP | Reuse an existing ingress static IP for the service | |

|

||||

| SVC.externalIPs | IPs for which nodes in the cluster will also accept traffic for the servic | `[]` |

|

||||

| SVC.externalTrafficPolicy | k8s service's externalTrafficPolicy. Options: Cluster, Local | |

|

||||

| SVC.ingress.enabled | Enable ingress resource creation (works with SVC.type=ClusterIP) | `false` |

|

||||

| SVC.ingress.ingressClassName | Set the ingressClassName to associate this Ingress with an IngressClass | |

|

||||

| SVC.ingress.hostname | Ingress hostname | `""` |

|

||||

| SVC.ingress.path | Ingress path. | `/` |

|

||||

| SVC.ingress.pathType | Ingress pathType. One of `ImplementationSpecific`, `Exact` or `Prefix` | `ImplementationSpecific` |

|

||||

| SVC.ingress.hosts | Slice of hosts configurations, including `hostname`, `path` and `pathType` keys | `[]` |

|

||||

| SVC.ingress.tls | Name of secret resource or slice of `secretName` and `hosts` keys | |

|

||||

| SVC.ingress.annotations | Ingress annotations. See documentation for your ingress controller for details | `{}` |

|

||||

| SVC.ingress.labels | Ingress labels. Additional custom labels to add to the ingress. | `{}` |

|

||||

| SVC.annotations | Service annotations | `{}` |

|

||||

| SVC.labels | Service labels | `{}` |

|

||||

|

||||

#### Admin Service mTLS

|

||||

|

||||

|

||||

@@ -9,8 +9,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -33,9 +33,9 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

version: \"3.5\"

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

version: \"3.6\"

|

||||

spec:

|

||||

automountServiceAccountToken: false

|

||||

containers:

|

||||

@@ -90,7 +90,7 @@ SnapShot = """

|

||||

value: \"off\"

|

||||

- name: KONG_NGINX_DAEMON

|

||||

value: \"off\"

|

||||

image: kong:3.5

|

||||

image: kong:3.6

|

||||

imagePullPolicy: IfNotPresent

|

||||

lifecycle:

|

||||

preStop:

|

||||

@@ -205,7 +205,7 @@ SnapShot = """

|

||||

value: 0.0.0.0:8100, [::]:8100

|

||||

- name: KONG_STREAM_LISTEN

|

||||

value: \"off\"

|

||||

image: kong:3.5

|

||||

image: kong:3.6

|

||||

imagePullPolicy: IfNotPresent

|

||||

name: clear-stale-pid

|

||||

resources: {}

|

||||

@@ -274,8 +274,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-custom-dbless-config

|

||||

namespace: default

|

||||

- object:

|

||||

@@ -286,8 +286,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-admin

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -309,8 +309,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-manager

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -336,9 +336,9 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

enable-metrics: \"true\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-proxy

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -364,8 +364,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

"""

|

||||

|

||||

@@ -9,8 +9,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-validations

|

||||

namespace: default

|

||||

webhooks:

|

||||

@@ -84,8 +84,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -108,9 +108,9 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

version: \"3.5\"

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

version: \"3.6\"

|

||||

spec:

|

||||

automountServiceAccountToken: false

|

||||

containers:

|

||||

@@ -138,7 +138,7 @@ SnapShot = """

|

||||

value: https://localhost:8444

|

||||

- name: CONTROLLER_PUBLISH_SERVICE

|

||||

value: default/chartsnap-kong-proxy

|

||||

image: kong/kubernetes-ingress-controller:3.0

|

||||

image: kong/kubernetes-ingress-controller:3.1

|

||||

imagePullPolicy: IfNotPresent

|

||||

livenessProbe:

|

||||

failureThreshold: 3

|

||||

@@ -159,7 +159,7 @@ SnapShot = """

|

||||

name: cmetrics

|

||||

protocol: TCP

|

||||

- containerPort: 10254

|

||||

name: status

|

||||

name: cstatus

|

||||

protocol: TCP

|

||||

readinessProbe:

|

||||

failureThreshold: 3

|

||||

@@ -240,7 +240,7 @@ SnapShot = """

|

||||

value: \"off\"

|

||||

- name: KONG_NGINX_DAEMON

|

||||

value: \"off\"

|

||||

image: kong:3.5

|

||||

image: kong:3.6

|

||||

imagePullPolicy: IfNotPresent

|

||||

lifecycle:

|

||||

preStop:

|

||||

@@ -350,7 +350,7 @@ SnapShot = """

|

||||

value: 0.0.0.0:8100, [::]:8100

|

||||

- name: KONG_STREAM_LISTEN

|

||||

value: \"off\"

|

||||

image: kong:3.5

|

||||

image: kong:3.6

|

||||

imagePullPolicy: IfNotPresent

|

||||

name: clear-stale-pid

|

||||

resources: {}

|

||||

@@ -408,8 +408,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

rules:

|

||||

- apiGroups:

|

||||

@@ -617,6 +617,38 @@ SnapShot = """

|

||||

- get

|

||||

- list

|

||||

- watch

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- konglicenses

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

- watch

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- konglicenses/status

|

||||

verbs:

|

||||

- get

|

||||

- patch

|

||||

- update

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- kongvaults

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

- watch

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- kongvaults/status

|

||||

verbs:

|

||||

- get

|

||||

- patch

|

||||

- update

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

@@ -657,8 +689,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

roleRef:

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

@@ -677,8 +709,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

rules:

|

||||

@@ -742,8 +774,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

roleRef:

|

||||

@@ -766,8 +798,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-validation-webhook-ca-keypair

|

||||

namespace: default

|

||||

type: kubernetes.io/tls

|

||||

@@ -783,8 +815,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-validation-webhook-keypair

|

||||

namespace: default

|

||||

type: kubernetes.io/tls

|

||||

@@ -797,8 +829,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-manager

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -825,9 +857,9 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

enable-metrics: \"true\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-proxy

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -854,8 +886,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-validation-webhook

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -870,8 +902,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

- object:

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

@@ -881,8 +913,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

"""

|

||||

|

||||

@@ -8,8 +8,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-validations

|

||||

namespace: default

|

||||

webhooks:

|

||||

@@ -82,8 +82,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

spec:

|

||||

@@ -105,9 +105,9 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

version: \"3.5\"

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

version: \"3.6\"

|

||||

spec:

|

||||

automountServiceAccountToken: false

|

||||

containers:

|

||||

@@ -137,7 +137,7 @@ SnapShot = """

|

||||

value: https://localhost:8444

|

||||

- name: CONTROLLER_PUBLISH_SERVICE

|

||||

value: default/chartsnap-kong-proxy

|

||||

image: kong/kubernetes-ingress-controller:3.0

|

||||

image: kong/kubernetes-ingress-controller:3.1

|

||||

imagePullPolicy: IfNotPresent

|

||||

livenessProbe:

|

||||

failureThreshold: 3

|

||||

@@ -158,7 +158,7 @@ SnapShot = """

|

||||

name: cmetrics

|

||||

protocol: TCP

|

||||

- containerPort: 10254

|

||||

name: status

|

||||

name: cstatus

|

||||

protocol: TCP

|

||||

readinessProbe:

|

||||

failureThreshold: 3

|

||||

@@ -241,7 +241,7 @@ SnapShot = """

|

||||

value: \"off\"

|

||||

- name: KONG_NGINX_DAEMON

|

||||

value: \"off\"

|

||||

image: kong:3.5

|

||||

image: kong:3.6

|

||||

imagePullPolicy: IfNotPresent

|

||||

lifecycle:

|

||||

preStop:

|

||||

@@ -353,7 +353,7 @@ SnapShot = """

|

||||

value: 0.0.0.0:8100, [::]:8100

|

||||

- name: KONG_STREAM_LISTEN

|

||||

value: \"off\"

|

||||

image: kong:3.5

|

||||

image: kong:3.6

|

||||

imagePullPolicy: IfNotPresent

|

||||

name: clear-stale-pid

|

||||

resources: {}

|

||||

@@ -410,8 +410,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

rules:

|

||||

- apiGroups:

|

||||

@@ -619,6 +619,38 @@ SnapShot = """

|

||||

- get

|

||||

- list

|

||||

- watch

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- konglicenses

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

- watch

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- konglicenses/status

|

||||

verbs:

|

||||

- get

|

||||

- patch

|

||||

- update

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- kongvaults

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

- watch

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

- kongvaults/status

|

||||

verbs:

|

||||

- get

|

||||

- patch

|

||||

- update

|

||||

- apiGroups:

|

||||

- configuration.konghq.com

|

||||

resources:

|

||||

@@ -658,8 +690,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

roleRef:

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

@@ -677,8 +709,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

rules:

|

||||

@@ -741,8 +773,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong

|

||||

namespace: default

|

||||

roleRef:

|

||||

@@ -764,8 +796,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|

||||

app.kubernetes.io/name: kong

|

||||

app.kubernetes.io/version: \"3.5\"

|

||||

helm.sh/chart: kong-2.36.0

|

||||

app.kubernetes.io/version: \"3.6\"

|

||||

helm.sh/chart: kong-2.38.0

|

||||

name: chartsnap-kong-validation-webhook-ca-keypair

|

||||

namespace: default

|

||||

type: kubernetes.io/tls

|

||||

@@ -780,8 +812,8 @@ SnapShot = """

|

||||

app.kubernetes.io/instance: chartsnap

|

||||

app.kubernetes.io/managed-by: Helm

|