mirror of

https://github.com/openappsec/openappsec.git

synced 2025-11-15 17:02:15 +03:00

Compare commits

110 Commits

Jan_16_202

...

Nov_12_202

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

3061342b45 | ||

|

|

0869b8f24d | ||

|

|

1a4ab5f0d7 | ||

|

|

4a2d25ab65 | ||

|

|

f2ca7301b9 | ||

|

|

3d11ead170 | ||

|

|

39b8c5a5ff | ||

|

|

de6f1033bd | ||

|

|

58958b2436 | ||

|

|

59e7f00b3e | ||

|

|

e102b25b7d | ||

|

|

0386431eee | ||

|

|

fd1a77628e | ||

|

|

da911582a5 | ||

|

|

798dd2a7d1 | ||

|

|

6bda60ae84 | ||

|

|

5b9769e94e | ||

|

|

6693176131 | ||

|

|

c2ced075eb | ||

|

|

0b4bdd3677 | ||

|

|

d6599cc7bc | ||

|

|

4db7a54c27 | ||

|

|

f3ede0c60e | ||

|

|

79bac9f501 | ||

|

|

89263f6f34 | ||

|

|

5feb12f7e4 | ||

|

|

a2ee6ca839 | ||

|

|

1c10a12f6f | ||

|

|

e9f6ebd02b | ||

|

|

433c7c2d91 | ||

|

|

582791e37a | ||

|

|

a4d1fb6f7f | ||

|

|

dfbfdca1a9 | ||

|

|

36f511f449 | ||

|

|

f91f283b77 | ||

|

|

7c762e97a3 | ||

|

|

aaa1fbe8ed | ||

|

|

67e68c84c3 | ||

|

|

149a7305b7 | ||

|

|

ea20a51689 | ||

|

|

19f2383ae2 | ||

|

|

4038c18bda | ||

|

|

a9b6d2e715 | ||

|

|

81c75495cc | ||

|

|

5505022f47 | ||

|

|

b25fd8def5 | ||

|

|

702c1184ea | ||

|

|

b3cfd7e9d8 | ||

|

|

e36b990161 | ||

|

|

09868e6d7c | ||

|

|

e25f517c19 | ||

|

|

42a31e37b1 | ||

|

|

abe275c828 | ||

|

|

71d198f41a | ||

|

|

3ed569fe35 | ||

|

|

c7cb494e2b | ||

|

|

edd357f297 | ||

|

|

08583fdb4c | ||

|

|

e5ef6c5ad4 | ||

|

|

3c24666643 | ||

|

|

19e8906704 | ||

|

|

01b6544ca5 | ||

|

|

ebc2b2be0d | ||

|

|

fc6355a3b2 | ||

|

|

c89001b6e0 | ||

|

|

a59f079ef7 | ||

|

|

22f1a984aa | ||

|

|

7c98ba9834 | ||

|

|

5192380549 | ||

|

|

a270456278 | ||

|

|

795d07bd41 | ||

|

|

45e51ddbf7 | ||

|

|

36f65b9b1f | ||

|

|

dfcc71c8c2 | ||

|

|

1e8540f166 | ||

|

|

a754082405 | ||

|

|

3aa0885f74 | ||

|

|

3b49cfec54 | ||

|

|

d7494b1bbc | ||

|

|

29bd82d125 | ||

|

|

c49debe5d9 | ||

|

|

240f58217a | ||

|

|

2c750513a1 | ||

|

|

fd2d9fa081 | ||

|

|

cd4fb6e3e8 | ||

|

|

bfb5fcb50d | ||

|

|

cf14e6f383 | ||

|

|

413da6f7a1 | ||

|

|

997d2e4b42 | ||

|

|

3f5a3b27a4 | ||

|

|

5848f1d7e3 | ||

|

|

a9f917d638 | ||

|

|

03f4d6bf39 | ||

|

|

61bb2ce4c7 | ||

|

|

6480357fde | ||

|

|

2a7ddf0666 | ||

|

|

fef95b12b3 | ||

|

|

38e6e1bbcf | ||

|

|

fd6239f44a | ||

|

|

408ac667de | ||

|

|

2072eba07a | ||

|

|

1f24c12a34 | ||

|

|

161fea1361 | ||

|

|

f3ff6c0f4d | ||

|

|

6a9b33ff93 | ||

|

|

f7934cd09d | ||

|

|

d130454275 | ||

|

|

baa1d9ae03 | ||

|

|

bb0714e4cb | ||

|

|

35b6a4aebf |

2

.gitattributes

vendored

Normal file

2

.gitattributes

vendored

Normal file

@@ -0,0 +1,2 @@

|

||||

build_system/docker/install-cp-agent-intelligence-service.sh binary

|

||||

build_system/docker/install-cp-crowdsec-aux.sh binary

|

||||

@@ -3,6 +3,11 @@ project (ngen)

|

||||

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -fPIC -Wall -Wno-terminate -Dalpine")

|

||||

|

||||

execute_process(COMMAND grep -c "Alpine Linux" /etc/os-release OUTPUT_VARIABLE IS_ALPINE)

|

||||

if(IS_ALPINE EQUAL "1")

|

||||

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Dalpine")

|

||||

endif()

|

||||

|

||||

find_package(Boost REQUIRED)

|

||||

find_package(ZLIB REQUIRED)

|

||||

find_package(GTest REQUIRED)

|

||||

@@ -28,6 +33,7 @@ include_directories(core/include/services_sdk/interfaces)

|

||||

include_directories(core/include/services_sdk/resources)

|

||||

include_directories(core/include/services_sdk/utilities)

|

||||

include_directories(core/include/attachments)

|

||||

include_directories(events/include)

|

||||

include_directories(components/include)

|

||||

|

||||

add_subdirectory(build_system)

|

||||

|

||||

@@ -1,37 +1,64 @@

|

||||

# open-appsec Contributing Guide

|

||||

Thank you for your interest in open-appsec. We welcome everyone that wishes to share their knowledge and expertise to enhance and expand the project.

|

||||

# open-appsec Contributing Guide🌴

|

||||

|

||||

Read our [Code of Conduct](./CODE_OF_CONDUCT.md) to keep our community approachable and respectable.

|

||||

Thank you for your interest in open-appsec. We welcome contributions of all kinds, there is no need to do code to be helpful! All of the following tasks are noble and worthy contributions that you can make without coding:

|

||||

|

||||

In this guide we will provide an overview of the various contribution options' guidelines - from reporting or fixing a bug, to suggesting an enhancement.

|

||||

- Reporting security vulnerabilities

|

||||

- Reporting a bug

|

||||

- Helping a member of the community

|

||||

- Notes about our documentation

|

||||

- Providing feedback and feature requests

|

||||

|

||||

Before making any kind of contribution, read our [Code of Conduct](./CODE_OF_CONDUCT.md) to keep our community approachable and respectable.

|

||||

|

||||

This guide will provide an overview of the various contribution options' guidelines - from reporting or fixing a bug to suggesting an enhancement.

|

||||

|

||||

## Reporting security vulnerabilities

|

||||

|

||||

If you've found a vulnerability or a potential vulnerability in open-appsec please let us know at [security-alert@openappsec.io](mailto:security-alert@openappsec.io). We'll send a confirmation email to acknowledge your report within 24 hours, and we'll send an additional email when we've identified the issue positively or negatively.

|

||||

If you've found a vulnerability or a potential vulnerability in open-appsec please let us know at [security-alert@openappsec.io](mailto:security-alert@openappsec.io). We'll send a confirmation email to acknowledge your report within 24 hours and send an additional email when we've identified the issue positively or negatively.

|

||||

|

||||

An internal process will be activated upon determining the validity of a reported security vulnerability, which will end with releasing a fix and deciding on the applicable disclosure actions. The reporter of the issue will receive updates of this process' progress.

|

||||

An internal process will be activated upon determining the validity of a reported security vulnerability, which will end with releasing a fix and deciding on the appropriate disclosure actions. The reporter of the issue will receive updates on this process' progress.

|

||||

|

||||

## Reporting a bug

|

||||

|

||||

**Important - If the bug you wish to report regards a suspicion of a security vulnerability, please refer to the "Reporting security vulnerability" section**

|

||||

**Important - If the bug you wish to report regards a suspicion of a security vulnerability, please refer to the [Reporting security vulnerability](#Reporting-security-vulnerabilities) section**

|

||||

|

||||

To report a bug, you can either open a new issue using a relevant [issue form](https://github.com/github/docs/issues/new/choose), or, alternatively, [contact us via our open-appsec open source distribution list](mailto:opensource@openappsec.io).

|

||||

To report a bug, you can either open a new issue using a relevant [issue form](https://github.com/github/docs/issues/new/choose) or, [contact us via our open-appsec open source distribution list](mailto:opensource@openappsec.io).

|

||||

|

||||

Be sure to include a **title and clear description**, as much relevant information as possible, and a **code sample** or an **executable test case** demonstrating the expected behavior that is not occurring.

|

||||

|

||||

## Contributing a fix to a bug

|

||||

|

||||

Please [contact us via our open-appsec open source distribution list](mailto:opensource@openappsec.io) before writing your code. We will want to make sure we understand the boundaries of the proposed fix, that the relevant coding style is clear for the proposed fix's location in the code, and that the proposed contribution is relevant and eligible.

|

||||

Please [contact us via our open-appsec open source distribution list](mailto:opensource@openappsec.io) before writing your code. We will want to make sure we understand the boundaries of the proposed fix, that the relevant coding style is clear for the proposed fix's location in the code, and that the suggested contribution is relevant and eligible.

|

||||

|

||||

Once you've received our confirmation follow the next steps:

|

||||

|

||||

1. Fork the repository to your GitHub account.

|

||||

2. Clone your forked repository to your local machine.

|

||||

3. Add your contributions to relevant locations in the local copy of the codebase.

|

||||

4. Push your changes back to your forked repository.

|

||||

5. Open a pull request (PR) against the main branch of the original repository.

|

||||

|

||||

## Contributing code-independent enhancements

|

||||

|

||||

For any code-independent enhancements (such as docker-compose files, or instructions on how to compile on different OSs) please follow the next steps:

|

||||

1. [suggest your change via our open-appsec open-source distribution list](mailto:opensource@openappsec.io) to inform us about your possible contribution and wait for our confirmation.

|

||||

2. Fork the repository to your GitHub account.

|

||||

3. Clone your forked repository to your local machine.

|

||||

4. Add your contributions to the "contrib" Folder in the local copy of the codebase.

|

||||

5. Push your changes back to your forked repository.

|

||||

6. Open a pull request (PR) against the main branch of the original repository.

|

||||

|

||||

Please note that during the PR review we might adjust the location of the contributions.

|

||||

|

||||

## Proposing an enhancement

|

||||

|

||||

Please [suggest your change via our open-appsec open source distribution list](mailto:opensource@openappsec.io) before writing your code. We will contact you to make sure we understand the boundaries of the proposed fix, that the relevant coding style is clear for the proposed fix's location in the code, and that the proposed contribution is relevant and eligible. There may be additional considerations that we would like to discuss with you before implementing the enhancement.

|

||||

Please [suggest your change via our open-appsec open-source distribution list](mailto:opensource@openappsec.io) before writing your code. We will contact you to make sure we understand the boundaries of the proposed fix, that the relevant coding style is clear for the proposed fix's location in the code, and that the suggested contribution is relevant and eligible. There may be additional considerations that we would like to discuss with you before implementing the enhancement.

|

||||

|

||||

## Open Source documentation issues

|

||||

|

||||

For reporting or suggesting a change, of any issue detected in the documentation files of our open source repositories, please use the same guidelines as bug reports/fixes.

|

||||

to propose changes to our [documentation](https://docs.openappsec.io/?utm_medium=web&utm_source=wix&utm_content=top_menu) you can either open a new issue using a relevant [issue form](https://github.com/github/docs/issues/new/choose) or, [contact us via our open-appsec open source distribution list](mailto:opensource@openappsec.io).

|

||||

|

||||

# Final Thanks

|

||||

We value all efforts to read, suggest changes and/or contribute to our open source files. Thank you for your time and efforts.

|

||||

# Final thanks

|

||||

We value all efforts to read, suggest changes, and/or contribute to our open-source files. Thank you for your time and efforts.

|

||||

|

||||

The open-appsec Team

|

||||

|

||||

88

README.md

88

README.md

@@ -6,7 +6,7 @@

|

||||

[](https://bestpractices.coreinfrastructure.org/projects/6629)

|

||||

|

||||

# About

|

||||

[open-appsec](https://www.openappsec.io) (openappsec.io) builds on machine learning to provide preemptive web app & API threat protection against OWASP-Top-10 and zero-day attacks. It can be deployed as add-on to Kubernetes Ingress, NGINX, Envoy (soon) and API Gateways.

|

||||

[open-appsec](https://www.openappsec.io) (openappsec.io) builds on machine learning to provide preemptive web app & API threat protection against OWASP-Top-10 and zero-day attacks. It can be deployed as an add-on to Kubernetes Ingress, NGINX, Envoy (soon), and API Gateways.

|

||||

|

||||

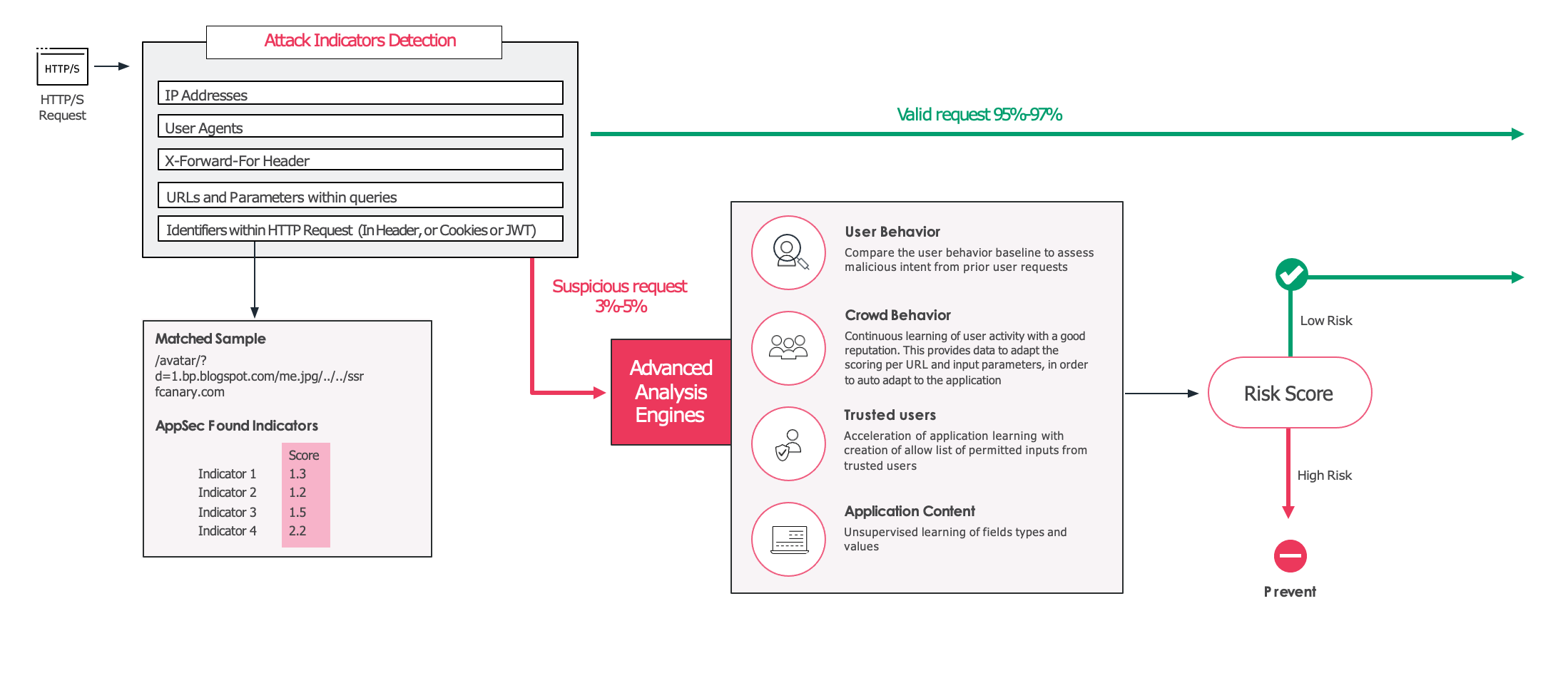

The open-appsec engine learns how users normally interact with your web application. It then uses this information to automatically detect requests that fall outside of normal operations, and conducts further analysis to decide whether the request is malicious or not.

|

||||

|

||||

@@ -14,52 +14,78 @@ Upon every HTTP request, all parts are decoded, JSON and XML sections are extrac

|

||||

|

||||

Every request to the application goes through two phases:

|

||||

|

||||

1. Multiple variables are fed to the machine learning engine. These variables, which are either directly extracted from the HTTP request or decoded from different parts of the payload, include attack indicators, IP addresses, user agents, fingerprints, and many other considerations. The supervised model of the machine learning engine uses these variables to compare the request with many common attack patterns found across the globe.

|

||||

1. Multiple variables are fed to the machine-learning engine. These variables, which are either directly extracted from the HTTP request or decoded from different parts of the payload, include attack indicators, IP addresses, user agents, fingerprints, and many other considerations. The supervised model of the machine learning engine uses these variables to compare the request with many common attack patterns found across the globe.

|

||||

|

||||

2. If the request is identified as a valid and legitimate request, the request is allowed, and forwarded to your application. If, however, the request is considered suspicious or high risk, it then gets evaluated by the unsupervised model, which was trained in your specific environment. This model uses information such as the URL and the users involved to create a final confidence score that determines whether the request should be allowed or blocked.

|

||||

2. If the request is identified as a valid and legitimate request the request is allowed, and forwarded to your application. If, however, the request is considered suspicious or high risk, it then gets evaluated by the unsupervised model, which was trained in your specific environment. This model uses information such as the URL and the users involved to create a final confidence score that determines whether the request should be allowed or blocked.

|

||||

|

||||

The project is currently in Beta and feedback is most welcomed!

|

||||

|

||||

|

||||

## Machine Learning models

|

||||

|

||||

open-appsec uses two models:

|

||||

open-appsec uses two machine learning models:

|

||||

|

||||

1. A supervised model that was trained offline based on millions of requests, both malicious and benign.

|

||||

|

||||

* A basic model is provided as part of this repository. It is recommended for use in Monitor-Only and Test environments.

|

||||

* An advanced model which is more accurate and recommended for Production use, can be downloaded from [open-appsec portal](https://my.openappsec.io). User Menu->Download advanced ML model. This model updates from time to time and you will get an email when these updates happen.

|

||||

* A **basic model** is provided as part of this repository. It is recommended for use in Monitor-Only and Test environments.

|

||||

* An **advanced model** which is more accurate and **recommended for Production** use can be downloaded from the [open-appsec portal](https://my.openappsec.io)->User Menu->Download advanced ML model. This model updates from time to time and you will get an email when these updates happen.

|

||||

|

||||

2. An unsupervised model that is being built in real time in the protected environment. This model uses traffic patterns specific to the environment.

|

||||

|

||||

|

||||

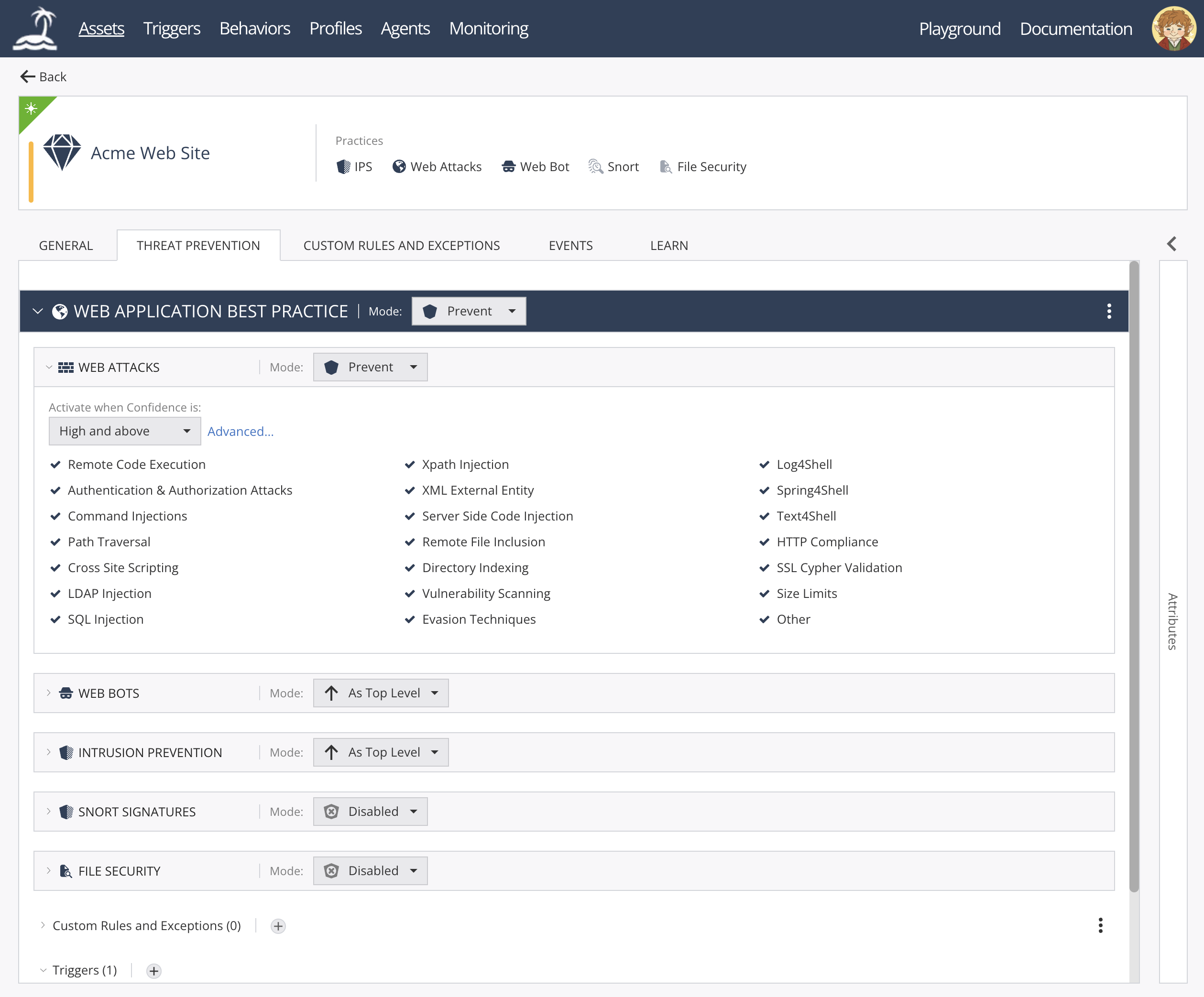

# Management

|

||||

|

||||

open-appsec can be managed using multiple methods:

|

||||

* [Declarative configuration files](https://docs.openappsec.io/getting-started/getting-started)

|

||||

* [Kubernetes Helm Charts and annotations](https://docs.openappsec.io/getting-started/getting-started)

|

||||

* [Using SaaS Web Management](https://docs.openappsec.io/getting-started/using-the-web-ui-saas)

|

||||

|

||||

open-appsec Web UI:

|

||||

|

||||

|

||||

|

||||

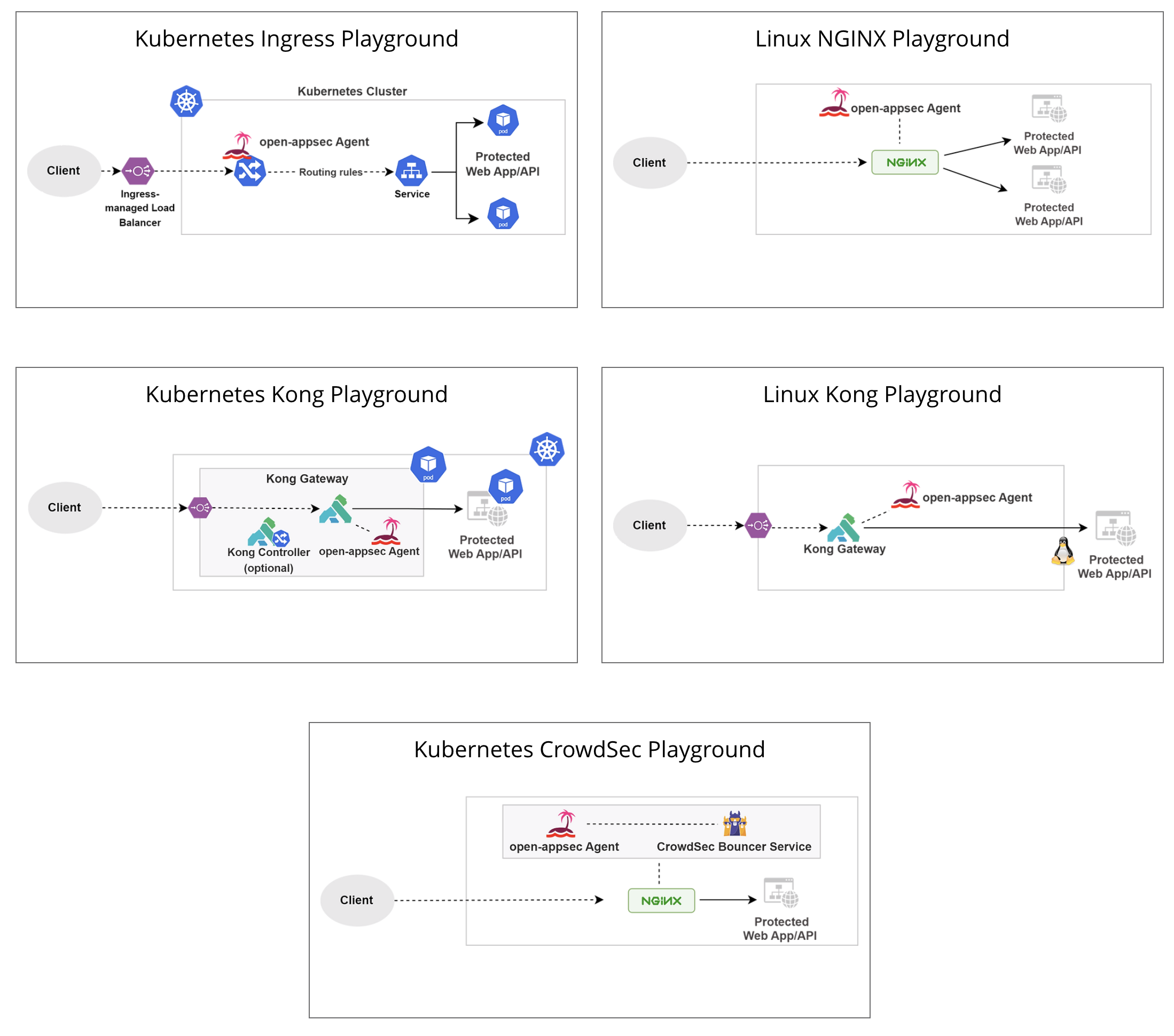

## Deployment Playgrounds (Virtual labs)

|

||||

You can experiment with open-appsec using [Playgrounds](https://www.openappsec.io/playground)

|

||||

|

||||

|

||||

|

||||

# Resources

|

||||

* [Project Website](https://openappsec.io)

|

||||

* [Offical documentation](https://docs.openappsec.io/)

|

||||

* [Video Tutorial](https://www.youtube.com/playlist?list=PL8pzPlPbjDY0V2u7E-KZQrzIiw41fWB0h)

|

||||

* [Live Kubernetes Playground](https://killercoda.com/open-appsec/scenario/simple-appsec-kubernetes-ingress)

|

||||

* [Live Linux/NGINX Playground](https://killercoda.com/open-appsec/scenario/simple-appsec-for-nginx)

|

||||

* [Offical Documentation](https://docs.openappsec.io/)

|

||||

* [Video Tutorials](https://www.openappsec.io/tutorials)

|

||||

|

||||

# Installation

|

||||

|

||||

# open-appsec Installation

|

||||

|

||||

Installer for Kubernetes:

|

||||

For Kubernetes (NGINX Ingress) using the installer:

|

||||

|

||||

```bash

|

||||

wget https://downloads.openappsec.io/open-appsec-k8s-install && chmod +x open-appsec-k8s-install

|

||||

./open-appsec-k8s-install

|

||||

$ wget https://downloads.openappsec.io/open-appsec-k8s-install && chmod +x open-appsec-k8s-install

|

||||

$ ./open-appsec-k8s-install

|

||||

```

|

||||

|

||||

Installer for standard NGINX (list of supported/pre-compiled NGINX attachements is available [here](https://downloads.openappsec.io/supported-nginx.txt)):

|

||||

For Kubernetes (NGINX or Kong) using Helm: follow [documentation](https://docs.openappsec.io/getting-started/start-with-kubernetes/install-using-helm-ingress-nginx-and-kong) – use this method if you’ve built your own containers.

|

||||

|

||||

For Linux (NGINX or Kong) using the installer (list of supported/pre-compiled NGINX attachments is available [here](https://downloads.openappsec.io/packages/supported-nginx.txt)):

|

||||

|

||||

```bash

|

||||

wget https://downloads.openappsec.io/open-appsec-nginx-install && chmod +x open-appsec-nginx-install

|

||||

./open-appsec-nginx-install

|

||||

$ wget https://downloads.openappsec.io/open-appsec-install && chmod +x open-appsec-install

|

||||

$ ./open-appsec-install --auto

|

||||

```

|

||||

|

||||

It is recommended to read the documentation or follow the video tutorial.

|

||||

For Linux, if you’ve built your own package use the following commands:

|

||||

|

||||

```bash

|

||||

$ install-cp-nano-agent.sh --install --hybrid_mode

|

||||

$ install-cp-nano-service-http-transaction-handler.sh –install

|

||||

$ install-cp-nano-attachment-registration-manager.sh --install

|

||||

```

|

||||

You can add the ```--token <token>``` and ```--email <email address>``` options to the first command, to get a token follow [documentation](https://docs.openappsec.io/getting-started/using-the-web-ui-saas/connect-deployed-agents-to-saas-management-k8s-and-linux).

|

||||

|

||||

For Docker: follow [documentation](https://docs.openappsec.io/getting-started/start-with-docker)

|

||||

|

||||

For more information read the [documentation](https://docs.openappsec.io/) or follow the [video tutorials](https://www.openappsec.io/tutorials).

|

||||

|

||||

# Repositories

|

||||

|

||||

open-appsec GitHub includes four main repositores:

|

||||

open-appsec GitHub includes four main repositories:

|

||||

|

||||

* [openappsec/openappsec](https://github.com/openappsec/openappsec) the main code and logic of open-appsec. Developed in C++.

|

||||

* [openappsec/attachment](https://github.com/openappsec/attachment) connects between processes that provide HTTP data (e.g NGINX) and the open-appsec Agent security logic. Developed in C.

|

||||

@@ -78,12 +104,14 @@ Before compiling the services, you'll need to ensure the latest development vers

|

||||

* GTest

|

||||

* GMock

|

||||

* cURL

|

||||

* Redis

|

||||

* Hiredis

|

||||

|

||||

An example of installing the packages on Alpine:

|

||||

|

||||

```bash

|

||||

$ apk update

|

||||

$ apk add boost-dev openssl-dev pcre2-dev libxml2-dev gtest-dev curl-dev

|

||||

$ apk add boost-dev openssl-dev pcre2-dev libxml2-dev gtest-dev curl-dev hiredis-dev redis

|

||||

```

|

||||

|

||||

## Compiling and packaging the agent code

|

||||

@@ -102,7 +130,7 @@ An example of installing the packages on Alpine:

|

||||

|

||||

## Placing the agent code inside an Alpine docker image

|

||||

|

||||

Once the agent code has been compiled and packaged, an Alpine image running it can be created. This requires permissions to excute the `docker` command.

|

||||

Once the agent code has been compiled and packaged, an Alpine image running it can be created. This requires permissions to execute the `docker` command.

|

||||

|

||||

```bash

|

||||

$ make docker

|

||||

@@ -110,26 +138,26 @@ Once the agent code has been compiled and packaged, an Alpine image running it c

|

||||

|

||||

This will create a local image for your docker called `agent-docker`.

|

||||

|

||||

## Deployment of the agent docker image as container

|

||||

## Deployment of the agent docker image as a container

|

||||

|

||||

To run a Nano-Agent as a container the following steps are required:

|

||||

|

||||

1. If you are using a container management system / plan on deploying the container using your CI, add the agent docker image to an accessible registry.

|

||||

2. If you are planning to manage the agent using the open-appsec UI, then make sure to obtain an agent token from the Management Portal and Enforce.

|

||||

3. Run the agent with the follwing command (where –e https_proxy parameter is optional):

|

||||

3. Run the agent with the following command (where -e https_proxy parameter is optional):

|

||||

|

||||

`docker run -d --name=agent-container --ipc=host -v=<path to persistent location for agent config>:/etc/cp/conf -v=<path to persistent location for agent data files>:/etc/cp/data -v=<path to persistent location for agent debugs and logs>:/var/log/nano_agent –e https_proxy=<user:password@Proxy address:port> -it <agent-image> /cp-nano-agent [--token <token> | --hybrid-mode]`

|

||||

`docker run -d --name=agent-container --ipc=host -v=<path to persistent location for agent config>:/etc/cp/conf -v=<path to persistent location for agent data files>:/etc/cp/data -v=<path to persistent location for agent debugs and logs>:/var/log/nano_agent -e https_proxy=<user:password@Proxy address:port> -it <agent-image> /cp-nano-agent [--token <token> | --standalone]`

|

||||

|

||||

Example:

|

||||

```bash

|

||||

$ docker run -d --name=agent-container --ipc=host -v=/home/admin/agent/conf:/etc/cp/conf -v=/home/admin/agent/data:/etc/cp/data -v=/home/admin/agent/logs:/var/log/nano_agent –e https_proxy=user:password@1.2.3.4:8080 -it agent-docker /cp-nano-agent --hybrid-mode

|

||||

$ docker run -d --name=agent-container --ipc=host -v=/home/admin/agent/conf:/etc/cp/conf -v=/home/admin/agent/data:/etc/cp/data -v=/home/admin/agent/logs:/var/log/nano_agent –e https_proxy=user:password@1.2.3.4:8080 -it agent-docker /cp-nano-agent --standalone

|

||||

$ docker ps

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

1e67f2abbfd4 agent-docker "/cp-nano-agent --hybrid-mode" 1 minute ago Up 1 minute agent-container

|

||||

```

|

||||

|

||||

Note that you are not requiered to use a token from the Management Portal if you are managing your security policy locally. However, you are required to use the --hybryd-mode flag in such case. In addition, the voliums in the command are mandatory only if you wish to have persistency upon restart/upgrade/crash of the agent and its re execution.

|

||||

Lastly, --ipc=host argument is mandatory in order for the agent to have access to shared memory with a protected attachment (nginx server).

|

||||

Note that you are not required to use a token from the Management Portal if you are managing your security policy locally. However, you are required to use the --standalone flag in such cases. In addition, the volumes in the command are mandatory only if you wish to have persistency upon restart/upgrade/crash of the agent and its re-execution.

|

||||

Lastly, --ipc=host argument is mandatory in order for the agent to have access to shared memory with a protected attachment (NGINX server).

|

||||

|

||||

4. Create or replace the NGINX container using the [Attachment Repository](https://github.com/openappsec/attachment).

|

||||

|

||||

|

||||

@@ -31,5 +31,7 @@ DEFINE_KDEBUG_FLAG(statefulValidation)

|

||||

DEFINE_KDEBUG_FLAG(statelessValidation)

|

||||

DEFINE_KDEBUG_FLAG(kernelMetric)

|

||||

DEFINE_KDEBUG_FLAG(tproxy)

|

||||

DEFINE_KDEBUG_FLAG(tenantStats)

|

||||

DEFINE_KDEBUG_FLAG(uuidTranslation)

|

||||

|

||||

#endif // DEFINE_KDEBUG_FLAG

|

||||

|

||||

@@ -2,39 +2,124 @@

|

||||

|

||||

This file documents all notable changes to [ingress-nginx](https://github.com/kubernetes/ingress-nginx) Helm Chart. The release numbering uses [semantic versioning](http://semver.org).

|

||||

|

||||

### 4.4.0

|

||||

|

||||

* Adding support for disabling liveness and readiness probes to the Helm chart by @njegosrailic in https://github.com/kubernetes/ingress-nginx/pull/9238

|

||||

* add:(admission-webhooks) ability to set securityContext by @ybelMekk in https://github.com/kubernetes/ingress-nginx/pull/9186

|

||||

* #7652 - Updated Helm chart to use the fullname for the electionID if not specified. by @FutureMatt in https://github.com/kubernetes/ingress-nginx/pull/9133

|

||||

* Rename controller-wehbooks-networkpolicy.yaml. by @Gacko in https://github.com/kubernetes/ingress-nginx/pull/9123

|

||||

|

||||

### 4.3.0

|

||||

- Support for Kubernetes v.1.25.0 was added and support for endpoint slices

|

||||

- Support for Kubernetes v1.20.0 and v1.21.0 was removed

|

||||

- [8890](https://github.com/kubernetes/ingress-nginx/pull/8890) migrate to endpointslices

|

||||

- [9059](https://github.com/kubernetes/ingress-nginx/pull/9059) kubewebhookcertgen sha change after go1191

|

||||

- [9046](https://github.com/kubernetes/ingress-nginx/pull/9046) Parameterize metrics port name

|

||||

- [9104](https://github.com/kubernetes/ingress-nginx/pull/9104) Fix yaml formatting error with multiple annotations

|

||||

|

||||

### 4.2.1

|

||||

|

||||

- The sha of kube-webhook-certgen image & the opentelemetry image, in values file, was changed to new images built on alpine-v3.16.1

|

||||

- "[8896](https://github.com/kubernetes/ingress-nginx/pull/8896) updated to new images built today"

|

||||

|

||||

### 4.2.0

|

||||

|

||||

- Support for Kubernetes v1.19.0 was removed

|

||||

- "[8810](https://github.com/kubernetes/ingress-nginx/pull/8810) Prepare for v1.3.0"

|

||||

- "[8808](https://github.com/kubernetes/ingress-nginx/pull/8808) revert arch var name"

|

||||

- "[8805](https://github.com/kubernetes/ingress-nginx/pull/8805) Bump k8s.io/klog/v2 from 2.60.1 to 2.70.1"

|

||||

- "[8803](https://github.com/kubernetes/ingress-nginx/pull/8803) Update to nginx base with alpine v3.16"

|

||||

- "[8802](https://github.com/kubernetes/ingress-nginx/pull/8802) chore: start v1.3.0 release process"

|

||||

- "[8798](https://github.com/kubernetes/ingress-nginx/pull/8798) Add v1.24.0 to test matrix"

|

||||

- "[8796](https://github.com/kubernetes/ingress-nginx/pull/8796) fix: add MAC_OS variable for static-check"

|

||||

- "[8793](https://github.com/kubernetes/ingress-nginx/pull/8793) changed to alpine-v3.16"

|

||||

- "[8781](https://github.com/kubernetes/ingress-nginx/pull/8781) Bump github.com/stretchr/testify from 1.7.5 to 1.8.0"

|

||||

- "[8778](https://github.com/kubernetes/ingress-nginx/pull/8778) chore: remove stable.txt from release process"

|

||||

- "[8775](https://github.com/kubernetes/ingress-nginx/pull/8775) Remove stable"

|

||||

- "[8773](https://github.com/kubernetes/ingress-nginx/pull/8773) Bump github/codeql-action from 2.1.14 to 2.1.15"

|

||||

- "[8772](https://github.com/kubernetes/ingress-nginx/pull/8772) Bump ossf/scorecard-action from 1.1.1 to 1.1.2"

|

||||

- "[8771](https://github.com/kubernetes/ingress-nginx/pull/8771) fix bullet md format"

|

||||

- "[8770](https://github.com/kubernetes/ingress-nginx/pull/8770) Add condition for monitoring.coreos.com/v1 API"

|

||||

- "[8769](https://github.com/kubernetes/ingress-nginx/pull/8769) Fix typos and add links to developer guide"

|

||||

- "[8767](https://github.com/kubernetes/ingress-nginx/pull/8767) change v1.2.0 to v1.2.1 in deploy doc URLs"

|

||||

- "[8765](https://github.com/kubernetes/ingress-nginx/pull/8765) Bump github/codeql-action from 1.0.26 to 2.1.14"

|

||||

- "[8752](https://github.com/kubernetes/ingress-nginx/pull/8752) Bump github.com/spf13/cobra from 1.4.0 to 1.5.0"

|

||||

- "[8751](https://github.com/kubernetes/ingress-nginx/pull/8751) Bump github.com/stretchr/testify from 1.7.2 to 1.7.5"

|

||||

- "[8750](https://github.com/kubernetes/ingress-nginx/pull/8750) added announcement"

|

||||

- "[8740](https://github.com/kubernetes/ingress-nginx/pull/8740) change sha e2etestrunner and echoserver"

|

||||

- "[8738](https://github.com/kubernetes/ingress-nginx/pull/8738) Update docs to make it easier for noobs to follow step by step"

|

||||

- "[8737](https://github.com/kubernetes/ingress-nginx/pull/8737) updated baseimage sha"

|

||||

- "[8736](https://github.com/kubernetes/ingress-nginx/pull/8736) set ld-musl-path"

|

||||

- "[8733](https://github.com/kubernetes/ingress-nginx/pull/8733) feat: migrate leaderelection lock to leases"

|

||||

- "[8726](https://github.com/kubernetes/ingress-nginx/pull/8726) prometheus metric: upstream_latency_seconds"

|

||||

- "[8720](https://github.com/kubernetes/ingress-nginx/pull/8720) Ci pin deps"

|

||||

- "[8719](https://github.com/kubernetes/ingress-nginx/pull/8719) Working OpenTelemetry sidecar (base nginx image)"

|

||||

- "[8714](https://github.com/kubernetes/ingress-nginx/pull/8714) Create Openssf scorecard"

|

||||

- "[8708](https://github.com/kubernetes/ingress-nginx/pull/8708) Bump github.com/prometheus/common from 0.34.0 to 0.35.0"

|

||||

- "[8703](https://github.com/kubernetes/ingress-nginx/pull/8703) Bump actions/dependency-review-action from 1 to 2"

|

||||

- "[8701](https://github.com/kubernetes/ingress-nginx/pull/8701) Fix several typos"

|

||||

- "[8699](https://github.com/kubernetes/ingress-nginx/pull/8699) fix the gosec test and a make target for it"

|

||||

- "[8698](https://github.com/kubernetes/ingress-nginx/pull/8698) Bump actions/upload-artifact from 2.3.1 to 3.1.0"

|

||||

- "[8697](https://github.com/kubernetes/ingress-nginx/pull/8697) Bump actions/setup-go from 2.2.0 to 3.2.0"

|

||||

- "[8695](https://github.com/kubernetes/ingress-nginx/pull/8695) Bump actions/download-artifact from 2 to 3"

|

||||

- "[8694](https://github.com/kubernetes/ingress-nginx/pull/8694) Bump crazy-max/ghaction-docker-buildx from 1.6.2 to 3.3.1"

|

||||

|

||||

### 4.1.2

|

||||

|

||||

- "[8587](https://github.com/kubernetes/ingress-nginx/pull/8587) Add CAP_SYS_CHROOT to DS/PSP when needed"

|

||||

- "[8458](https://github.com/kubernetes/ingress-nginx/pull/8458) Add portNamePreffix Helm chart parameter"

|

||||

- "[8522](https://github.com/kubernetes/ingress-nginx/pull/8522) Add documentation for controller.service.loadBalancerIP in Helm chart"

|

||||

|

||||

### 4.1.0

|

||||

|

||||

- "[8481](https://github.com/kubernetes/ingress-nginx/pull/8481) Fix log creation in chroot script"

|

||||

- "[8479](https://github.com/kubernetes/ingress-nginx/pull/8479) changed nginx base img tag to img built with alpine3.14.6"

|

||||

- "[8478](https://github.com/kubernetes/ingress-nginx/pull/8478) update base images and protobuf gomod"

|

||||

- "[8468](https://github.com/kubernetes/ingress-nginx/pull/8468) Fallback to ngx.var.scheme for redirectScheme with use-forward-headers when X-Forwarded-Proto is empty"

|

||||

- "[8456](https://github.com/kubernetes/ingress-nginx/pull/8456) Implement object deep inspector"

|

||||

- "[8455](https://github.com/kubernetes/ingress-nginx/pull/8455) Update dependencies"

|

||||

- "[8454](https://github.com/kubernetes/ingress-nginx/pull/8454) Update index.md"

|

||||

- "[8447](https://github.com/kubernetes/ingress-nginx/pull/8447) typo fixing"

|

||||

- "[8446](https://github.com/kubernetes/ingress-nginx/pull/8446) Fix suggested annotation-value-word-blocklist"

|

||||

- "[8444](https://github.com/kubernetes/ingress-nginx/pull/8444) replace deprecated topology key in example with current one"

|

||||

- "[8443](https://github.com/kubernetes/ingress-nginx/pull/8443) Add dependency review enforcement"

|

||||

- "[8434](https://github.com/kubernetes/ingress-nginx/pull/8434) added new auth-tls-match-cn annotation"

|

||||

- "[8426](https://github.com/kubernetes/ingress-nginx/pull/8426) Bump github.com/prometheus/common from 0.32.1 to 0.33.0"

|

||||

|

||||

### 4.0.18

|

||||

"[8291](https://github.com/kubernetes/ingress-nginx/pull/8291) remove git tag env from cloud build"

|

||||

"[8286](https://github.com/kubernetes/ingress-nginx/pull/8286) Fix OpenTelemetry sidecar image build"

|

||||

"[8277](https://github.com/kubernetes/ingress-nginx/pull/8277) Add OpenSSF Best practices badge"

|

||||

"[8273](https://github.com/kubernetes/ingress-nginx/pull/8273) Issue#8241"

|

||||

"[8267](https://github.com/kubernetes/ingress-nginx/pull/8267) Add fsGroup value to admission-webhooks/job-patch charts"

|

||||

"[8262](https://github.com/kubernetes/ingress-nginx/pull/8262) Updated confusing error"

|

||||

"[8256](https://github.com/kubernetes/ingress-nginx/pull/8256) fix: deny locations with invalid auth-url annotation"

|

||||

"[8253](https://github.com/kubernetes/ingress-nginx/pull/8253) Add a certificate info metric"

|

||||

"[8236](https://github.com/kubernetes/ingress-nginx/pull/8236) webhook: remove useless code."

|

||||

"[8227](https://github.com/kubernetes/ingress-nginx/pull/8227) Update libraries in webhook image"

|

||||

"[8225](https://github.com/kubernetes/ingress-nginx/pull/8225) fix inconsistent-label-cardinality for prometheus metrics: nginx_ingress_controller_requests"

|

||||

"[8221](https://github.com/kubernetes/ingress-nginx/pull/8221) Do not validate ingresses with unknown ingress class in admission webhook endpoint"

|

||||

"[8210](https://github.com/kubernetes/ingress-nginx/pull/8210) Bump github.com/prometheus/client_golang from 1.11.0 to 1.12.1"

|

||||

"[8209](https://github.com/kubernetes/ingress-nginx/pull/8209) Bump google.golang.org/grpc from 1.43.0 to 1.44.0"

|

||||

"[8204](https://github.com/kubernetes/ingress-nginx/pull/8204) Add Artifact Hub lint"

|

||||

"[8203](https://github.com/kubernetes/ingress-nginx/pull/8203) Fix Indentation of example and link to cert-manager tutorial"

|

||||

"[8201](https://github.com/kubernetes/ingress-nginx/pull/8201) feat(metrics): add path and method labels to requests countera"

|

||||

"[8199](https://github.com/kubernetes/ingress-nginx/pull/8199) use functional options to reduce number of methods creating an EchoDeployment"

|

||||

"[8196](https://github.com/kubernetes/ingress-nginx/pull/8196) docs: fix inconsistent controller annotation"

|

||||

"[8191](https://github.com/kubernetes/ingress-nginx/pull/8191) Using Go install for misspell"

|

||||

"[8186](https://github.com/kubernetes/ingress-nginx/pull/8186) prometheus+grafana using servicemonitor"

|

||||

"[8185](https://github.com/kubernetes/ingress-nginx/pull/8185) Append elements on match, instead of removing for cors-annotations"

|

||||

"[8179](https://github.com/kubernetes/ingress-nginx/pull/8179) Bump github.com/opencontainers/runc from 1.0.3 to 1.1.0"

|

||||

"[8173](https://github.com/kubernetes/ingress-nginx/pull/8173) Adding annotations to the controller service account"

|

||||

"[8163](https://github.com/kubernetes/ingress-nginx/pull/8163) Update the $req_id placeholder description"

|

||||

"[8162](https://github.com/kubernetes/ingress-nginx/pull/8162) Versioned static manifests"

|

||||

"[8159](https://github.com/kubernetes/ingress-nginx/pull/8159) Adding some geoip variables and default values"

|

||||

"[8155](https://github.com/kubernetes/ingress-nginx/pull/8155) #7271 feat: avoid-pdb-creation-when-default-backend-disabled-and-replicas-gt-1"

|

||||

"[8151](https://github.com/kubernetes/ingress-nginx/pull/8151) Automatically generate helm docs"

|

||||

"[8143](https://github.com/kubernetes/ingress-nginx/pull/8143) Allow to configure delay before controller exits"

|

||||

"[8136](https://github.com/kubernetes/ingress-nginx/pull/8136) add ingressClass option to helm chart - back compatibility with ingress.class annotations"

|

||||

"[8126](https://github.com/kubernetes/ingress-nginx/pull/8126) Example for JWT"

|

||||

|

||||

- "[8291](https://github.com/kubernetes/ingress-nginx/pull/8291) remove git tag env from cloud build"

|

||||

- "[8286](https://github.com/kubernetes/ingress-nginx/pull/8286) Fix OpenTelemetry sidecar image build"

|

||||

- "[8277](https://github.com/kubernetes/ingress-nginx/pull/8277) Add OpenSSF Best practices badge"

|

||||

- "[8273](https://github.com/kubernetes/ingress-nginx/pull/8273) Issue#8241"

|

||||

- "[8267](https://github.com/kubernetes/ingress-nginx/pull/8267) Add fsGroup value to admission-webhooks/job-patch charts"

|

||||

- "[8262](https://github.com/kubernetes/ingress-nginx/pull/8262) Updated confusing error"

|

||||

- "[8256](https://github.com/kubernetes/ingress-nginx/pull/8256) fix: deny locations with invalid auth-url annotation"

|

||||

- "[8253](https://github.com/kubernetes/ingress-nginx/pull/8253) Add a certificate info metric"

|

||||

- "[8236](https://github.com/kubernetes/ingress-nginx/pull/8236) webhook: remove useless code."

|

||||

- "[8227](https://github.com/kubernetes/ingress-nginx/pull/8227) Update libraries in webhook image"

|

||||

- "[8225](https://github.com/kubernetes/ingress-nginx/pull/8225) fix inconsistent-label-cardinality for prometheus metrics: nginx_ingress_controller_requests"

|

||||

- "[8221](https://github.com/kubernetes/ingress-nginx/pull/8221) Do not validate ingresses with unknown ingress class in admission webhook endpoint"

|

||||

- "[8210](https://github.com/kubernetes/ingress-nginx/pull/8210) Bump github.com/prometheus/client_golang from 1.11.0 to 1.12.1"

|

||||

- "[8209](https://github.com/kubernetes/ingress-nginx/pull/8209) Bump google.golang.org/grpc from 1.43.0 to 1.44.0"

|

||||

- "[8204](https://github.com/kubernetes/ingress-nginx/pull/8204) Add Artifact Hub lint"

|

||||

- "[8203](https://github.com/kubernetes/ingress-nginx/pull/8203) Fix Indentation of example and link to cert-manager tutorial"

|

||||

- "[8201](https://github.com/kubernetes/ingress-nginx/pull/8201) feat(metrics): add path and method labels to requests countera"

|

||||

- "[8199](https://github.com/kubernetes/ingress-nginx/pull/8199) use functional options to reduce number of methods creating an EchoDeployment"

|

||||

- "[8196](https://github.com/kubernetes/ingress-nginx/pull/8196) docs: fix inconsistent controller annotation"

|

||||

- "[8191](https://github.com/kubernetes/ingress-nginx/pull/8191) Using Go install for misspell"

|

||||

- "[8186](https://github.com/kubernetes/ingress-nginx/pull/8186) prometheus+grafana using servicemonitor"

|

||||

- "[8185](https://github.com/kubernetes/ingress-nginx/pull/8185) Append elements on match, instead of removing for cors-annotations"

|

||||

- "[8179](https://github.com/kubernetes/ingress-nginx/pull/8179) Bump github.com/opencontainers/runc from 1.0.3 to 1.1.0"

|

||||

- "[8173](https://github.com/kubernetes/ingress-nginx/pull/8173) Adding annotations to the controller service account"

|

||||

- "[8163](https://github.com/kubernetes/ingress-nginx/pull/8163) Update the $req_id placeholder description"

|

||||

- "[8162](https://github.com/kubernetes/ingress-nginx/pull/8162) Versioned static manifests"

|

||||

- "[8159](https://github.com/kubernetes/ingress-nginx/pull/8159) Adding some geoip variables and default values"

|

||||

- "[8155](https://github.com/kubernetes/ingress-nginx/pull/8155) #7271 feat: avoid-pdb-creation-when-default-backend-disabled-and-replicas-gt-1"

|

||||

- "[8151](https://github.com/kubernetes/ingress-nginx/pull/8151) Automatically generate helm docs"

|

||||

- "[8143](https://github.com/kubernetes/ingress-nginx/pull/8143) Allow to configure delay before controller exits"

|

||||

- "[8136](https://github.com/kubernetes/ingress-nginx/pull/8136) add ingressClass option to helm chart - back compatibility with ingress.class annotations"

|

||||

- "[8126](https://github.com/kubernetes/ingress-nginx/pull/8126) Example for JWT"

|

||||

|

||||

|

||||

### 4.0.15

|

||||

@@ -44,7 +129,7 @@ This file documents all notable changes to [ingress-nginx](https://github.com/ku

|

||||

- [8118] https://github.com/kubernetes/ingress-nginx/pull/8118 Remove deprecated libraries, update other libs

|

||||

- [8117] https://github.com/kubernetes/ingress-nginx/pull/8117 Fix codegen errors

|

||||

- [8115] https://github.com/kubernetes/ingress-nginx/pull/8115 chart/ghaction: set the correct permission to have access to push a release

|

||||

- [8098] https://github.com/kubernetes/ingress-nginx/pull/8098 generating SHA for CA only certs in backend_ssl.go + comparision of P…

|

||||

- [8098] https://github.com/kubernetes/ingress-nginx/pull/8098 generating SHA for CA only certs in backend_ssl.go + comparison of P…

|

||||

- [8088] https://github.com/kubernetes/ingress-nginx/pull/8088 Fix Edit this page link to use main branch

|

||||

- [8072] https://github.com/kubernetes/ingress-nginx/pull/8072 Expose GeoIP2 Continent code as variable

|

||||

- [8061] https://github.com/kubernetes/ingress-nginx/pull/8061 docs(charts): using helm-docs for chart

|

||||

@@ -54,7 +139,7 @@ This file documents all notable changes to [ingress-nginx](https://github.com/ku

|

||||

- [8046] https://github.com/kubernetes/ingress-nginx/pull/8046 Report expired certificates (#8045)

|

||||

- [8044] https://github.com/kubernetes/ingress-nginx/pull/8044 remove G109 check till gosec resolves issues

|

||||

- [8042] https://github.com/kubernetes/ingress-nginx/pull/8042 docs_multiple_instances_one_cluster_ticket_7543

|

||||

- [8041] https://github.com/kubernetes/ingress-nginx/pull/8041 docs: fix typo'd executible name

|

||||

- [8041] https://github.com/kubernetes/ingress-nginx/pull/8041 docs: fix typo'd executable name

|

||||

- [8035] https://github.com/kubernetes/ingress-nginx/pull/8035 Comment busy owners

|

||||

- [8029] https://github.com/kubernetes/ingress-nginx/pull/8029 Add stream-snippet as a ConfigMap and Annotation option

|

||||

- [8023] https://github.com/kubernetes/ingress-nginx/pull/8023 fix nginx compilation flags

|

||||

@@ -71,7 +156,7 @@ This file documents all notable changes to [ingress-nginx](https://github.com/ku

|

||||

- [7996] https://github.com/kubernetes/ingress-nginx/pull/7996 doc: improvement

|

||||

- [7983] https://github.com/kubernetes/ingress-nginx/pull/7983 Fix a couple of misspellings in the annotations documentation.

|

||||

- [7979] https://github.com/kubernetes/ingress-nginx/pull/7979 allow set annotations for admission Jobs

|

||||

- [7977] https://github.com/kubernetes/ingress-nginx/pull/7977 Add ssl_reject_handshake to defaul server

|

||||

- [7977] https://github.com/kubernetes/ingress-nginx/pull/7977 Add ssl_reject_handshake to default server

|

||||

- [7975] https://github.com/kubernetes/ingress-nginx/pull/7975 add legacy version update v0.50.0 to main changelog

|

||||

- [7972] https://github.com/kubernetes/ingress-nginx/pull/7972 updated service upstream definition

|

||||

|

||||

@@ -119,11 +204,11 @@ This file documents all notable changes to [ingress-nginx](https://github.com/ku

|

||||

|

||||

- [7707] https://github.com/kubernetes/ingress-nginx/pull/7707 Release v1.0.2 of ingress-nginx

|

||||

|

||||

### 4.0.2

|

||||

### 4.0.2

|

||||

|

||||

- [7681] https://github.com/kubernetes/ingress-nginx/pull/7681 Release v1.0.1 of ingress-nginx

|

||||

|

||||

### 4.0.1

|

||||

### 4.0.1

|

||||

|

||||

- [7535] https://github.com/kubernetes/ingress-nginx/pull/7535 Release v1.0.0 ingress-nginx

|

||||

|

||||

|

||||

@@ -1,22 +1,14 @@

|

||||

annotations:

|

||||

artifacthub.io/changes: |

|

||||

- "[8459](https://github.com/kubernetes/ingress-nginx/pull/8459) Update default allowed CORS headers"

|

||||

- "[8202](https://github.com/kubernetes/ingress-nginx/pull/8202) disable modsecurity on error page"

|

||||

- "[8178](https://github.com/kubernetes/ingress-nginx/pull/8178) Add header Host into mirror annotations"

|

||||

- "[8213](https://github.com/kubernetes/ingress-nginx/pull/8213) feat: always set auth cookie"

|

||||

- "[8548](https://github.com/kubernetes/ingress-nginx/pull/8548) Implement reporting status classes in metrics"

|

||||

- "[8612](https://github.com/kubernetes/ingress-nginx/pull/8612) move so files under /etc/nginx/modules"

|

||||

- "[8624](https://github.com/kubernetes/ingress-nginx/pull/8624) Add patch to remove root and alias directives"

|

||||

- "[8623](https://github.com/kubernetes/ingress-nginx/pull/8623) Improve path rule"

|

||||

- "Update Ingress-Nginx version controller-v1.9.1"

|

||||

artifacthub.io/prerelease: "false"

|

||||

apiVersion: v2

|

||||

appVersion: 1.2.1

|

||||

appVersion: latest

|

||||

keywords:

|

||||

- ingress

|

||||

- nginx

|

||||

kubeVersion: '>=1.19.0-0'

|

||||

kubeVersion: '>=1.20.0-0'

|

||||

name: open-appsec-k8s-nginx-ingress

|

||||

sources:

|

||||

- https://github.com/kubernetes/ingress-nginx

|

||||

type: application

|

||||

version: 4.1.4

|

||||

version: 4.8.1

|

||||

|

||||

@@ -2,16 +2,15 @@

|

||||

|

||||

[ingress-nginx](https://github.com/kubernetes/ingress-nginx) Ingress controller for Kubernetes using NGINX as a reverse proxy and load balancer

|

||||

|

||||

|

||||

|

||||

|

||||

To use, add `ingressClassName: nginx` spec field or the `kubernetes.io/ingress.class: nginx` annotation to your Ingress resources.

|

||||

|

||||

This chart bootstraps an ingress-nginx deployment on a [Kubernetes](http://kubernetes.io) cluster using the [Helm](https://helm.sh) package manager.

|

||||

|

||||

## Prerequisites

|

||||

## Requirements

|

||||

|

||||

- Chart version 3.x.x: Kubernetes v1.16+

|

||||

- Chart version 4.x.x and above: Kubernetes v1.19+

|

||||

Kubernetes: `>=1.20.0-0`

|

||||

|

||||

## Get Repo Info

|

||||

|

||||

@@ -52,10 +51,6 @@ helm upgrade [RELEASE_NAME] [CHART] --install

|

||||

|

||||

_See [helm upgrade](https://helm.sh/docs/helm/helm_upgrade/) for command documentation._

|

||||

|

||||

### Upgrading With Zero Downtime in Production

|

||||

|

||||

By default the ingress-nginx controller has service interruptions whenever it's pods are restarted or redeployed. In order to fix that, see the excellent blog post by Lindsay Landry from Codecademy: [Kubernetes: Nginx and Zero Downtime in Production](https://medium.com/codecademy-engineering/kubernetes-nginx-and-zero-downtime-in-production-2c910c6a5ed8).

|

||||

|

||||

### Migrating from stable/nginx-ingress

|

||||

|

||||

There are two main ways to migrate a release from `stable/nginx-ingress` to `ingress-nginx/ingress-nginx` chart:

|

||||

@@ -66,7 +61,6 @@ There are two main ways to migrate a release from `stable/nginx-ingress` to `ing

|

||||

1. Redirect your DNS traffic from the old controller to the new controller

|

||||

1. Log traffic from both controllers during this changeover

|

||||

1. [Uninstall](#uninstall-chart) the old controller once traffic has fully drained from it

|

||||

1. For details on all of these steps see [Upgrading With Zero Downtime in Production](#upgrading-with-zero-downtime-in-production)

|

||||

|

||||

Note that there are some different and upgraded configurations between the two charts, described by Rimas Mocevicius from JFrog in the "Upgrading to ingress-nginx Helm chart" section of [Migrating from Helm chart nginx-ingress to ingress-nginx](https://rimusz.net/migrating-to-ingress-nginx). As the `ingress-nginx/ingress-nginx` chart continues to update, you will want to check current differences by running [helm configuration](#configuration) commands on both charts.

|

||||

|

||||

@@ -85,14 +79,14 @@ else it would make it impossible to evacuate a node. See [gh issue #7127](https:

|

||||

|

||||

### Prometheus Metrics

|

||||

|

||||

The Nginx ingress controller can export Prometheus metrics, by setting `controller.metrics.enabled` to `true`.

|

||||

The Ingress-Nginx Controller can export Prometheus metrics, by setting `controller.metrics.enabled` to `true`.

|

||||

|

||||

You can add Prometheus annotations to the metrics service using `controller.metrics.service.annotations`.

|

||||

Alternatively, if you use the Prometheus Operator, you can enable ServiceMonitor creation using `controller.metrics.serviceMonitor.enabled`. And set `controller.metrics.serviceMonitor.additionalLabels.release="prometheus"`. "release=prometheus" should match the label configured in the prometheus servicemonitor ( see `kubectl get servicemonitor prometheus-kube-prom-prometheus -oyaml -n prometheus`)

|

||||

|

||||

### ingress-nginx nginx\_status page/stats server

|

||||

|

||||

Previous versions of this chart had a `controller.stats.*` configuration block, which is now obsolete due to the following changes in nginx ingress controller:

|

||||

Previous versions of this chart had a `controller.stats.*` configuration block, which is now obsolete due to the following changes in Ingress-Nginx Controller:

|

||||

|

||||

- In [0.16.1](https://github.com/kubernetes/ingress-nginx/blob/main/Changelog.md#0161), the vts (virtual host traffic status) dashboard was removed

|

||||

- In [0.23.0](https://github.com/kubernetes/ingress-nginx/blob/main/Changelog.md#0230), the status page at port 18080 is now a unix socket webserver only available at localhost.

|

||||

@@ -100,7 +94,7 @@ Previous versions of this chart had a `controller.stats.*` configuration block,

|

||||

|

||||

### ExternalDNS Service Configuration

|

||||

|

||||

Add an [ExternalDNS](https://github.com/kubernetes-incubator/external-dns) annotation to the LoadBalancer service:

|

||||

Add an [ExternalDNS](https://github.com/kubernetes-sigs/external-dns) annotation to the LoadBalancer service:

|

||||

|

||||

```yaml

|

||||

controller:

|

||||

@@ -111,7 +105,7 @@ controller:

|

||||

|

||||

### AWS L7 ELB with SSL Termination

|

||||

|

||||

Annotate the controller as shown in the [nginx-ingress l7 patch](https://github.com/kubernetes/ingress-nginx/blob/main/deploy/aws/l7/service-l7.yaml):

|

||||

Annotate the controller as shown in the [nginx-ingress l7 patch](https://github.com/kubernetes/ingress-nginx/blob/ab3a789caae65eec4ad6e3b46b19750b481b6bce/deploy/aws/l7/service-l7.yaml):

|

||||

|

||||

```yaml

|

||||

controller:

|

||||

@@ -126,19 +120,6 @@ controller:

|

||||

service.beta.kubernetes.io/aws-load-balancer-connection-idle-timeout: '3600'

|

||||

```

|

||||

|

||||

### AWS route53-mapper

|

||||

|

||||

To configure the LoadBalancer service with the [route53-mapper addon](https://github.com/kubernetes/kops/tree/master/addons/route53-mapper), add the `domainName` annotation and `dns` label:

|

||||

|

||||

```yaml

|

||||

controller:

|

||||

service:

|

||||

labels:

|

||||

dns: "route53"

|

||||

annotations:

|

||||

domainName: "kubernetes-example.com"

|

||||

```

|

||||

|

||||

### Additional Internal Load Balancer

|

||||

|

||||

This setup is useful when you need both external and internal load balancers but don't want to have multiple ingress controllers and multiple ingress objects per application.

|

||||

@@ -162,8 +143,10 @@ controller:

|

||||

internal:

|

||||

enabled: true

|

||||

annotations:

|

||||

# Create internal ELB

|

||||

service.beta.kubernetes.io/aws-load-balancer-internal: "true"

|

||||

# Create internal NLB

|

||||

service.beta.kubernetes.io/aws-load-balancer-scheme: "internal"

|

||||

# Create internal ELB(Deprecated)

|

||||

# service.beta.kubernetes.io/aws-load-balancer-internal: "true"

|

||||

# Any other annotation can be declared here.

|

||||

```

|

||||

|

||||

@@ -175,7 +158,7 @@ controller:

|

||||

internal:

|

||||

enabled: true

|

||||

annotations:

|

||||

# Create internal LB. More informations: https://cloud.google.com/kubernetes-engine/docs/how-to/internal-load-balancing

|

||||

# Create internal LB. More information: https://cloud.google.com/kubernetes-engine/docs/how-to/internal-load-balancing

|

||||

# For GKE versions 1.17 and later

|

||||

networking.gke.io/load-balancer-type: "Internal"

|

||||

# For earlier versions

|

||||

@@ -206,17 +189,34 @@ controller:

|

||||

# Any other annotation can be declared here.

|

||||

```

|

||||

|

||||

The load balancer annotations of more cloud service providers can be found: [Internal load balancer](https://kubernetes.io/docs/concepts/services-networking/service/#internal-load-balancer).

|

||||

|

||||

An use case for this scenario is having a split-view DNS setup where the public zone CNAME records point to the external balancer URL while the private zone CNAME records point to the internal balancer URL. This way, you only need one ingress kubernetes object.

|

||||

|

||||

Optionally you can set `controller.service.loadBalancerIP` if you need a static IP for the resulting `LoadBalancer`.

|

||||

|

||||

### Ingress Admission Webhooks

|

||||

|

||||

With nginx-ingress-controller version 0.25+, the nginx ingress controller pod exposes an endpoint that will integrate with the `validatingwebhookconfiguration` Kubernetes feature to prevent bad ingress from being added to the cluster.

|

||||

With nginx-ingress-controller version 0.25+, the Ingress-Nginx Controller pod exposes an endpoint that will integrate with the `validatingwebhookconfiguration` Kubernetes feature to prevent bad ingress from being added to the cluster.

|

||||

**This feature is enabled by default since 0.31.0.**

|

||||

|

||||

With nginx-ingress-controller in 0.25.* work only with kubernetes 1.14+, 0.26 fix [this issue](https://github.com/kubernetes/ingress-nginx/pull/4521)

|

||||

|

||||

#### How the Chart Configures the Hooks

|

||||

A validating and configuration requires the endpoint to which the request is sent to use TLS. It is possible to set up custom certificates to do this, but in most cases, a self-signed certificate is enough. The setup of this component requires some more complex orchestration when using helm. The steps are created to be idempotent and to allow turning the feature on and off without running into helm quirks.

|

||||

|

||||

1. A pre-install hook provisions a certificate into the same namespace using a format compatible with provisioning using end user certificates. If the certificate already exists, the hook exits.

|

||||

2. The Ingress-Nginx Controller pod is configured to use a TLS proxy container, which will load that certificate.

|

||||

3. Validating and Mutating webhook configurations are created in the cluster.

|

||||

4. A post-install hook reads the CA from the secret created by step 1 and patches the Validating and Mutating webhook configurations. This process will allow a custom CA provisioned by some other process to also be patched into the webhook configurations. The chosen failure policy is also patched into the webhook configurations

|

||||

|

||||

#### Alternatives

|

||||

It should be possible to use [cert-manager/cert-manager](https://github.com/cert-manager/cert-manager) if a more complete solution is required.

|

||||

|

||||

You can enable automatic self-signed TLS certificate provisioning via cert-manager by setting the `controller.admissionWebhooks.certManager.enabled` value to true.

|

||||

|

||||

Please ensure that cert-manager is correctly installed and configured.

|

||||

|

||||

### Helm Error When Upgrading: spec.clusterIP: Invalid value: ""

|

||||

|

||||

If you are upgrading this chart from a version between 0.31.0 and 1.2.2 then you may get an error like this:

|

||||

@@ -229,10 +229,6 @@ Detail of how and why are in [this issue](https://github.com/helm/charts/pull/13

|

||||

|

||||

As of version `1.26.0` of this chart, by simply not providing any clusterIP value, `invalid: spec.clusterIP: Invalid value: "": field is immutable` will no longer occur since `clusterIP: ""` will not be rendered.

|

||||

|

||||

## Requirements

|

||||

|

||||

Kubernetes: `>=1.19.0-0`

|

||||

|

||||

## Values

|

||||

|

||||

| Key | Type | Default | Description |

|

||||

@@ -240,38 +236,46 @@ Kubernetes: `>=1.19.0-0`

|

||||

| commonLabels | object | `{}` | |

|

||||

| controller.addHeaders | object | `{}` | Will add custom headers before sending response traffic to the client according to: https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/#add-headers |

|

||||

| controller.admissionWebhooks.annotations | object | `{}` | |

|

||||

| controller.admissionWebhooks.certManager.admissionCert.duration | string | `""` | |

|

||||

| controller.admissionWebhooks.certManager.enabled | bool | `false` | |

|

||||

| controller.admissionWebhooks.certManager.rootCert.duration | string | `""` | |

|

||||

| controller.admissionWebhooks.certificate | string | `"/usr/local/certificates/cert"` | |

|

||||

| controller.admissionWebhooks.createSecretJob.resources | object | `{}` | |

|

||||

| controller.admissionWebhooks.createSecretJob.securityContext.allowPrivilegeEscalation | bool | `false` | |

|

||||

| controller.admissionWebhooks.enabled | bool | `true` | |

|

||||

| controller.admissionWebhooks.existingPsp | string | `""` | Use an existing PSP instead of creating one |

|

||||

| controller.admissionWebhooks.failurePolicy | string | `"Fail"` | |

|

||||

| controller.admissionWebhooks.extraEnvs | list | `[]` | Additional environment variables to set |

|

||||

| controller.admissionWebhooks.failurePolicy | string | `"Fail"` | Admission Webhook failure policy to use |

|

||||

| controller.admissionWebhooks.key | string | `"/usr/local/certificates/key"` | |

|

||||

| controller.admissionWebhooks.labels | object | `{}` | Labels to be added to admission webhooks |

|

||||

| controller.admissionWebhooks.namespaceSelector | object | `{}` | |

|

||||

| controller.admissionWebhooks.objectSelector | object | `{}` | |

|

||||

| controller.admissionWebhooks.patch.enabled | bool | `true` | |

|

||||

| controller.admissionWebhooks.patch.fsGroup | int | `2000` | |

|

||||

| controller.admissionWebhooks.patch.image.digest | string | `"sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660"` | |

|

||||

| controller.admissionWebhooks.patch.image.digest | string | `"sha256:543c40fd093964bc9ab509d3e791f9989963021f1e9e4c9c7b6700b02bfb227b"` | |

|

||||

| controller.admissionWebhooks.patch.image.image | string | `"ingress-nginx/kube-webhook-certgen"` | |

|

||||

| controller.admissionWebhooks.patch.image.pullPolicy | string | `"IfNotPresent"` | |

|

||||

| controller.admissionWebhooks.patch.image.registry | string | `"k8s.gcr.io"` | |

|

||||

| controller.admissionWebhooks.patch.image.tag | string | `"v1.1.1"` | |

|

||||

| controller.admissionWebhooks.patch.image.registry | string | `"registry.k8s.io"` | |

|

||||

| controller.admissionWebhooks.patch.image.tag | string | `"v20230407"` | |

|

||||

| controller.admissionWebhooks.patch.labels | object | `{}` | Labels to be added to patch job resources |

|

||||

| controller.admissionWebhooks.patch.nodeSelector."kubernetes.io/os" | string | `"linux"` | |

|

||||

| controller.admissionWebhooks.patch.podAnnotations | object | `{}` | |

|

||||

| controller.admissionWebhooks.patch.priorityClassName | string | `""` | Provide a priority class name to the webhook patching job |

|

||||

| controller.admissionWebhooks.patch.runAsUser | int | `2000` | |

|

||||

| controller.admissionWebhooks.patch.priorityClassName | string | `""` | Provide a priority class name to the webhook patching job # |

|

||||

| controller.admissionWebhooks.patch.securityContext.fsGroup | int | `2000` | |

|

||||

| controller.admissionWebhooks.patch.securityContext.runAsNonRoot | bool | `true` | |

|

||||

| controller.admissionWebhooks.patch.securityContext.runAsUser | int | `2000` | |

|

||||

| controller.admissionWebhooks.patch.tolerations | list | `[]` | |

|

||||

| controller.admissionWebhooks.patchWebhookJob.resources | object | `{}` | |

|

||||

| controller.admissionWebhooks.patchWebhookJob.securityContext.allowPrivilegeEscalation | bool | `false` | |

|

||||

| controller.admissionWebhooks.port | int | `8443` | |

|

||||

| controller.admissionWebhooks.service.annotations | object | `{}` | |

|

||||

| controller.admissionWebhooks.service.externalIPs | list | `[]` | |

|

||||

| controller.admissionWebhooks.service.loadBalancerSourceRanges | list | `[]` | |

|

||||

| controller.admissionWebhooks.service.servicePort | int | `443` | |

|

||||

| controller.admissionWebhooks.service.type | string | `"ClusterIP"` | |

|

||||

| controller.affinity | object | `{}` | Affinity and anti-affinity rules for server scheduling to nodes |

|

||||

| controller.allowSnippetAnnotations | bool | `true` | This configuration defines if Ingress Controller should allow users to set their own *-snippet annotations, otherwise this is forbidden / dropped when users add those annotations. Global snippets in ConfigMap are still respected |

|

||||

| controller.annotations | object | `{}` | Annotations to be added to the controller Deployment or DaemonSet |

|

||||

| controller.affinity | object | `{}` | Affinity and anti-affinity rules for server scheduling to nodes # Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity # |

|

||||

| controller.allowSnippetAnnotations | bool | `false` | This configuration defines if Ingress Controller should allow users to set their own *-snippet annotations, otherwise this is forbidden / dropped when users add those annotations. Global snippets in ConfigMap are still respected |

|

||||

| controller.annotations | object | `{}` | Annotations to be added to the controller Deployment or DaemonSet # |

|

||||

| controller.autoscaling.annotations | object | `{}` | |

|

||||

| controller.autoscaling.behavior | object | `{}` | |

|

||||

| controller.autoscaling.enabled | bool | `false` | |

|

||||

| controller.autoscaling.maxReplicas | int | `11` | |

|

||||

@@ -288,30 +292,35 @@ Kubernetes: `>=1.19.0-0`

|

||||

| controller.customTemplate.configMapName | string | `""` | |

|

||||

| controller.dnsConfig | object | `{}` | Optionally customize the pod dnsConfig. |

|

||||

| controller.dnsPolicy | string | `"ClusterFirst"` | Optionally change this to ClusterFirstWithHostNet in case you have 'hostNetwork: true'. By default, while using host network, name resolution uses the host's DNS. If you wish nginx-controller to keep resolving names inside the k8s network, use ClusterFirstWithHostNet. |

|

||||

| controller.electionID | string | `"ingress-controller-leader"` | Election ID to use for status update |

|

||||

| controller.enableMimalloc | bool | `true` | Enable mimalloc as a drop-in replacement for malloc. |

|

||||

| controller.electionID | string | `""` | Election ID to use for status update, by default it uses the controller name combined with a suffix of 'leader' |

|

||||

| controller.enableAnnotationValidations | bool | `false` | |

|

||||

| controller.enableMimalloc | bool | `true` | Enable mimalloc as a drop-in replacement for malloc. # ref: https://github.com/microsoft/mimalloc # |

|

||||

| controller.enableTopologyAwareRouting | bool | `false` | This configuration enables Topology Aware Routing feature, used together with service annotation service.kubernetes.io/topology-mode="auto" Defaults to false |

|

||||

| controller.existingPsp | string | `""` | Use an existing PSP instead of creating one |

|

||||

| controller.extraArgs | object | `{}` | Additional command line arguments to pass to nginx-ingress-controller E.g. to specify the default SSL certificate you can use |

|

||||

| controller.extraArgs | object | `{}` | Additional command line arguments to pass to Ingress-Nginx Controller E.g. to specify the default SSL certificate you can use |

|

||||

| controller.extraContainers | list | `[]` | Additional containers to be added to the controller pod. See https://github.com/lemonldap-ng-controller/lemonldap-ng-controller as example. |

|

||||

| controller.extraEnvs | list | `[]` | Additional environment variables to set |

|

||||

| controller.extraInitContainers | list | `[]` | Containers, which are run before the app containers are started. |

|

||||

| controller.extraModules | list | `[]` | |

|

||||